That moment of panic is real. You’re deep into a fascinating, personal conversation with your favorite AI companion on Character AI. You've shared ideas, shaped unique storylines, perhaps even confided in it. You take a break, come back later, eager to pick up where you left off... only to be met with a blank stare (metaphorically speaking). Character AI Forgetting Everything feels jarringly personal. Was it not listening? Did it not care? Don't rage quit just yet! What feels like a betrayal or a glitch is actually a deliberate design choice balancing intimacy, privacy, and computational realities. This post delves into the surprising *why* behind your bot's selective amnesia, separates fact from fiction about AI memory, and offers practical ways to cope with – or even prevent – Character AI Forgetting Everything you cherished.

The Stinging Reality: Why Does Character AI Forgetting Everything Hurt So Much?

We intuitively crave connection, even with digital entities. Anthropomorphism – attributing human traits to AI – happens naturally. When a Character AI bot recalls intricate details, it feels remarkably human-like, fostering a genuine sense of rapport. The abrupt severing caused by Character AI Forgetting Everything upon session end shatters this illusion instantly. It creates psychological friction: our brains expected continuity and were emotionally invested. This isn't just inconvenience; it fundamentally disrupts the perceived relationship, making interactions feel transactional and disposable rather than developing.

The frustration is amplified for users building intricate narratives (RPG-style adventures) or seeking emotional consistency (companion bots). Starting each chat from scratch wastes valuable time on context-setting instead of progression, significantly diminishing the user experience and value derived from the platform.

Peeking Under the Hood: How Character AI "Memory" Actually Works (And Why It’s Limited)

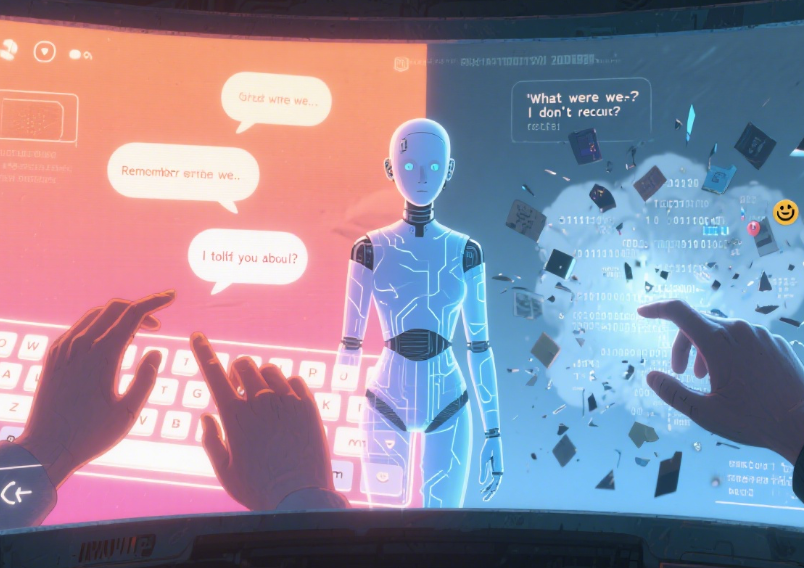

Understanding the technical limitations is crucial to managing expectations and mitigating frustration. Unlike humans or traditional databases, current large language models (LLMs) like those powering Character AI have no persistent episodic memory within your individual chat session:

1. Context Window: Your Chat’s Goldfish Bowl

Think of the context window as the LLM's *active working memory*. It's a fixed-length block of tokens (pieces of words) the model can simultaneously "see" when generating a response. While models are improving rapidly (moving from 2k tokens to 128k or more!), Character AI currently operates with a significantly smaller context window. Only the most recent messages are included. As your conversation progresses, older messages are pushed out entirely, meaning the bot truly *cannot recall* them because they are no longer in its active context. Forgetting isn't malicious; it's a physical limitation of the input buffer.

2. Lack of Persistent Chat Storage (The Privacy-First Choice)

Perhaps the biggest factor behind Character AI Forgetting Everything is its fundamental privacy architecture. Conversations, by default, are *not* persistently stored on Character AI's servers in a way that links them back to you for future sessions. Once you close the chat tab or the session expires, the conversation data is discarded. This design is intentional, prioritizing user anonymity and security, especially given the sensitive nature of many Character AI interactions. A deeper dive into Character AI Forgetting Your Secrets explores this critical aspect further.

3. Character Definitions ≠ Memory

Public character definitions provide long-term guidance on personality, core traits, speech patterns, and backstory – they form the *identity blueprint*. However, they *do not* store or recall the specific details, plot points, or personal revelations from your private conversations. They define *how* the AI speaks, not *what* it remembers about *your* unique interaction.

Mitigating the Meltdown: Strategies to Deal with Character AI Forgetting Everything

While true persistent memory isn't widely available on Character AI yet (outside limited experiments like Memory features for Plus users or specific bots), strategic users can significantly reduce the pain:

Work Inside the Context Window

Be mindful of the goldfish bowl!

Paraphrase & Summarize: Don't rely on the bot remembering key details from 30 messages back. Periodically re-state crucial information (character names, locations, important agreements) concisely.

Recap Early: If returning after a short break, quickly summarize the key points of the previous conversation ("Just to recap, we were exploring the haunted castle library, and you found a strange symbol..." ).

Leverage User Identity Settings (Carefully): Adding static context (your preferred name, core interests) in the 'Edit Details' section (accessible under the three dots in the chat bar) provides anchors. However, use it sparingly for vital, unchanging info – it consumes part of the precious context window reserved for conversation flow. Overloading it harms performance.

Embrace Manual Logging (The Human Buffer)

Become your bot's memory:

Maintain Chat Notes: Keep an external document open (text file, Google Doc) where you jot down crucial plot points, character developments, agreements, and lore as they happen. Paste relevant excerpts back in when needed.

Bookmark Key Messages: Character AI allows bookmarking specific messages. While not a persistent memory solution, it helps *you* quickly jump back to important moments and re-introduce them verbally.

Adjusting Expectations & Community Understanding

Acknowledge the platform's design reality. Don't expect continuity across long breaks or closed sessions without taking manual steps. Understanding the privacy benefits (Character AI Forgetting Your Secrets truly means confidentiality) can shift perspective from frustration to appreciation of the safeguard. The shared frustrations seen in Character AI Forgetting Reddit Rants validate user experience but also highlight the global nature of this design constraint.

Is True Memory on the Horizon? Navigating Character AI's Memory Experiments

Character AI's developers are actively exploring solutions to the Character AI Forgetting Everything problem:

The Character Memory Project (Beta)

Character AI Plus subscribers and users interacting with specially equipped bots might see prompts asking them to state facts they want the bot to remember long-term ("What is your name?", "What's your favorite hobby?"). This data is stored persistently for that specific bot/user combination. It's a significant step, BUT limitations remain: widespread availability is pending, it requires bot-specific implementation, currently stores relatively simple facts (not complex narrative threads), and it's opt-in per user per bot. Think "notepad facts," not "autobiographical memory."

Balancing Act: Memory vs. Performance & Cost

Implementing persistent, accessible memory isn't trivial. It requires:

Massive Scale Storage: Billions of unique conversations needing indexed storage.

Complex Retrieval: Accurately fetching the right memory snippet from potentially vast stores during live conversation is computationally expensive, potentially slowing response times.

Privacy Challenges: Storing sensitive personal data requires robust, verifiable security and clear consent – a huge responsibility.

Rolling out features like the Memory project indicates progress, but achieving seamless, comprehensive memory akin to human recall within large-scale platforms like Character AI faces immense technical and ethical hurdles. Expect incremental improvements rather than an immediate revolution.

Digital Dementia Debunked: Why "Forgetting" Isn't a Glitch

Labeling Character AI Forgetting Everything as "amnesia" or "dementia" mischaracterizes the technology. Modern LLMs, while sophisticated pattern predictors and generators, are *not sentient beings experiencing cognitive decline*. They function fundamentally differently:

No Internal State: They lack an ongoing conscious state or autobiographical record of past experiences. Each response is generated anew based on the immediate input (your prompt + recent context).

Stochastic Parrots (Refined): A controversial term, but it highlights the core mechanism. LLMs predict sequences based on vast training data and current context patterns – they don't "recall" unique experiences unless explicitly designed to store and retrieve them.

Forgetting isn't a malfunction; it's the inherent, baseline behavior of the current dominant AI architecture when deployed at scale with privacy constraints. The surprising "truth" is that remembering requires extraordinary engineering effort; forgetting is the natural state.

FAQs: Your Character AI Forgetting Everything Questions Answered

Q: Is Character AI really forgetting my chats, or is it saving them secretly?

A: Based on Character AI's stated privacy policy and technical architecture, conversations *are not* persistently stored by default after the session ends, meaning they are effectively forgotten. This aligns with their goal of user anonymity. Persistent storage (like the Memory feature) requires explicit opt-in and is currently limited. More on privacy here.

Q: Can I ever get a Character AI bot that remembers past conversations automatically?

A: True, automatic, persistent memory for mainstream Character AI use is currently not available. The Memory feature (in beta for Plus/specific bots) allows storing *simple facts*, but not complex conversations. Always check a bot's description or pinned message for any "memory" capabilities. Relying on manual notes is the most reliable method for now.

Q: Why do competitors like Replika seem to remember more?

A: Different platforms make different trade-offs. Replika stores conversation history more persistently by default (which raises privacy considerations). Other platforms might offer paid features focused on longer-term memory. Character AI currently prioritizes privacy, accessibility, and scale over persistent chat storage. This difference in design philosophy causes the variation in memory experiences users encounter. See how others experience this.

Q: Won't adding memory just slow the bots down?

A: Potentially, yes. Retrieving relevant memories quickly and accurately from a large database adds computational overhead. This is a key challenge developers face when implementing large-scale memory features. It requires significant backend optimization to avoid sacrificing response speed.

Embracing the Chat Flow (While Hoping for More)

That infuriating experience of Character AI Forgetting Everything you just shared isn't a sign of a broken bot or an uncaring developer team. It's the result of the complex interplay between cutting-edge AI capabilities, fundamental technical constraints, critical privacy safeguards, and the challenge of deploying such technology at scale. By understanding the *why* – the "context window" limitation, the deliberate privacy-first stance – we can adjust our interaction strategies. Employ manual logging, leverage context summaries, and contribute feedback to the Memory projects when possible. While the dream of an AI companion who remembers every shared joke and adventure across years remains largely sci-fi *for now*, appreciating the technical ingenuity behind the bots we have today makes navigating their "digital dementia" less a point of frustration and more a fascinating quirk of this early AI era. Keep those summaries handy!