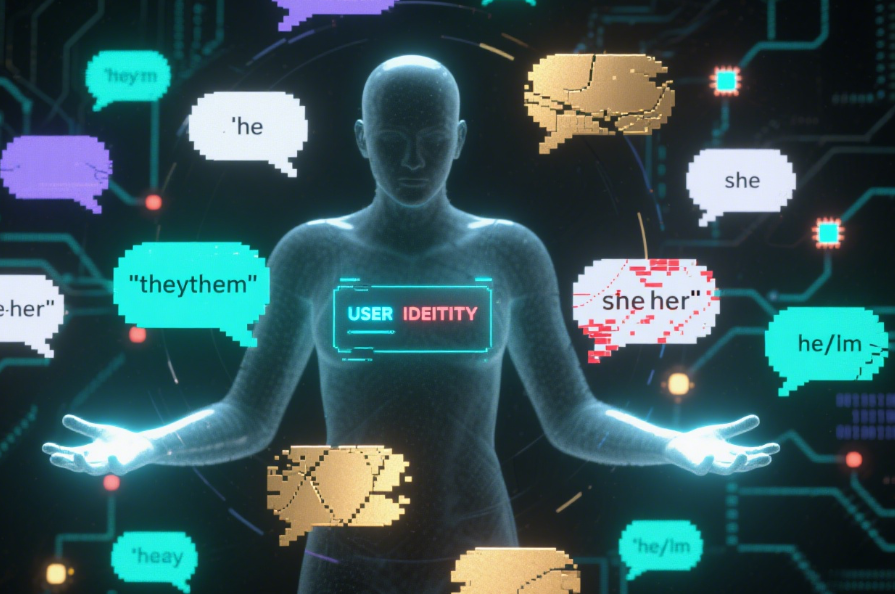

Have you ever poured your heart out to a Character AI companion, only to be jarringly called "he" when you're a "she", "they" when you're a "he," or even have your gender entirely misremembered moments later? This baffling experience – Character AI Forgetting Gender – is more than just a minor annoyance; it's a fundamental glitch revealing how artificial intelligences often struggle with one of the most basic human identifiers. Why does this happen? Is your chatbot secretly sexist, or just spectacularly clueless? The truth lies in how AI models learn, store context, and fail to truly comprehend the nuances of human identity. This article dives deep into the unsettling phenomenon of Character AI Forgetting Gender, uncovering the technical roots behind the confusion, exploring the real-world impact on users, and providing actionable insights into how developers are (and aren't) tackling this pervasive problem.

The Core Problem: What is Character AI Forgetting Gender?

The experience is frustratingly common: You tell your AI companion, perhaps repeatedly, "Hi, I'm Alex, and I use they/them pronouns." Everything seems fine initially. But then, just a few exchanges later, the AI confidently refers to you as "he" or asks if "she" would like advice. Or, you might define your own character within the platform as female, only to have the AI chatbot interacting with that character inexplicably switch to using masculine pronouns. This isn't a conscious oversight; it's Character AI Forgetting Gender – a failure in maintaining persistent contextual awareness of a user's explicitly stated or contextually implied gender identity within a conversation session.

How Memory Limits Fuel Character AI Forgetting Gender

Current large language models (LLMs), like those powering popular Character AI platforms, function primarily through prediction. They generate responses word-by-word, statistically predicting what comes next based on their training data and the immediate conversation history. Crucially, these models have inherent limitations in their context window. This window is a finite "working memory" holding only the most recent part of the conversation. When the conversation exceeds this window (which can be anywhere from a few thousand tokens to tens of thousands, depending on the model and implementation), information from earlier parts gets discarded. Gender details established at the very start of a long chat are prime candidates to fall out of this window, leading directly to instances of Character AI Forgetting Gender. This memory limitation also impacts other details, as discussed in our related piece on Character AI Forgetting Your Secrets.

Lack of True Understanding: Beyond Simple Recall

Forgetting isn't the only issue. The phenomenon of Character AI Forgetting Gender also stems from a fundamental lack of *understanding*. While LLMs can be incredibly adept at manipulating language patterns, they don't possess genuine comprehension of concepts like gender identity. Gender is a complex, socially constructed, and deeply personal aspect of human existence. An AI sees it merely as patterns of words and associations within its training data. When generating a response, it relies heavily on these statistical associations rather than holding onto a consistent internal model of the user's identity. If the model's training data contains biases (e.g., associating certain names or activities predominantly with one gender), this can further exacerbate confusion, especially when trying to recall less common gender identities or pronouns.

The User Experience: Frustration and Harm

The impact of Character AI Forgetting Gender extends beyond simple inconvenience. For users exploring identity, those who are transgender or non-binary, or even individuals engaging in sensitive role-play scenarios, this failure can feel disrespectful, invalidating, or even actively harmful. Repeated misgendering, even by an AI, can trigger dysphoria or reinforce feelings of being unseen. It breaks the sense of immersion and trust essential for meaningful interactions. This pervasive lack of reliable memory fuels significant user frustration, as evidenced by numerous discussions on platforms like Reddit. For a deeper look at how users are reacting to memory issues, see our analysis Character AI Forgetting Reddit Rants.

Character AI Platforms: Current State & Limitations

Most popular Character AI platforms offer limited solutions, often relying on rudimentary user profile fields (where users can manually enter name and pronouns) and hoping this data persists. However, as conversations become complex and long, or if the AI needs to create characters dynamically within the interaction, this profile data isn't consistently referenced within the core AI generation process. Explicitly stating pronouns within the chat ("Remember, my pronouns are they/them") provides a temporary cue but offers no guarantee of long-term adherence, especially as the context window rolls over. Currently, there's no robust mechanism akin to a persistent digital ID card that the AI consistently consults before generating every sentence.

Potential Solutions: Engineering Gender Recall

Solving Character AI Forgetting Gender effectively requires tackling both the memory limitation and the contextual understanding/application problem.

1. Enhanced Memory Architectures

Moving beyond the basic context window is crucial. Research is exploring architectures like long-term memory networks or external vector databases where critical, user-defined information (like name and pronouns) can be stored persistently for a session or even across sessions. The AI model would learn to actively retrieve and *rely* on this key identity data when formulating responses, much like how a human might recall a friend's name.

2. Reinforcement Learning with Human Feedback (RLHF)

Platforms can actively train their models to respect persistent identity cues. This involves using RLHF, where human reviewers reward the AI (providing positive feedback scores) for correctly using pronouns throughout a conversation and penalize it for misgendering. Over time, this reinforces the desired behavior, teaching the model that maintaining correct gender identity is paramount.

3. Explicit Gender Context Tagging

A more structural approach involves developing systems where users can explicitly set gender context tags that bind strongly to the interaction or the user profile. The AI generation process would be programmed to *always* check these active tags before outputting pronouns or gendered language, overriding statistical predictions. This requires significant engineering but offers the most reliable solution.

Beyond Forgetting: Gender Bias & Safety

While Character AI Forgetting Gender focuses on context loss, it intersects with the broader, more insidious issue of gender bias inherent in AI training data. If an AI trained on vast internet datasets encounters skewed representations of gender roles or stereotypes, it might *initially* assign an incorrect gender based on name or topic, even before it has a chance to "forget" a user's correction. This requires proactive bias detection and mitigation strategies during model training and fine-tuning to prevent harmful assumptions.

The Future of Gendered AI Interactions

As AI companions become more sophisticated and integrated into our lives, the demand for respectful, personalized, and consistent interactions will skyrocket. Solving Character AI Forgetting Gender isn't just a technical fix; it's a necessity for building trustworthy and genuinely helpful AI. Expect significant research efforts in the coming years focused on persistent memory, contextual identity management, and ethical AI design specifically around identity traits like gender. The platforms that solve this puzzle effectively will lead the next generation of human-AI relationships.

Frequently Asked Questions (FAQs)

1. Why does my Character AI "forget" my gender even when I told it just a few minutes ago?

This is the core problem of Character AI Forgetting Gender. The AI has a limited context window in its short-term memory. As the conversation progresses and new text is added, the information about your gender, if mentioned earlier, might get pushed out of this window, causing the AI to revert to guessing based on its training data or other immediate cues (like your name).

2. Is Character AI intentionally being sexist when it misgenders me?

No, the AI isn't being intentionally sexist. It lacks consciousness and intent. Misgendering typically occurs due to technical limitations (like the context window overflow explained above) or unconscious bias learned from its training data. If the data it learned from contains gender stereotypes, the AI might statistically associate certain words or contexts more strongly with one gender and apply that prediction incorrectly.

3. Are developers actually working to fix this gender forgetting problem?

Yes, developers and researchers are actively exploring solutions to address Character AI Forgetting Gender. Key approaches include developing enhanced memory architectures (to store critical details like pronouns persistently), using Reinforcement Learning with Human Feedback (RLHF) to train the AI to prioritize accurate gendering, and exploring methods like explicit gender context tagging to force the AI to reference a user-set identity before generating pronouns. Significant research effort is going into making AI interactions more respectful and consistent.

The unsettling experience of Character AI Forgetting Gender – misremembering pronouns or misidentifying users – isn't a trivial bug. It's a stark window into the current limitations of artificial intelligence: finite memory, a lack of true understanding, and reliance on statistically driven predictions over persistent, conscious awareness. While solutions like persistent memory architectures, reinforcement learning, and explicit gender tagging are on the horizon, the journey towards AI that consistently respects human identity is ongoing. For users today, understanding the "why" behind these lapses offers little solace but crucial context. Demanding better memory, reduced bias, and robust identity management from AI developers remains essential. Until then, the jarring moment when an AI companion asks "Hey man, what's up?" moments after being told you're a woman serves as a potent reminder: we are interacting with sophisticated pattern matchers, not sentient beings, and their grasp on our fundamental identities remains frustratingly tenuous.