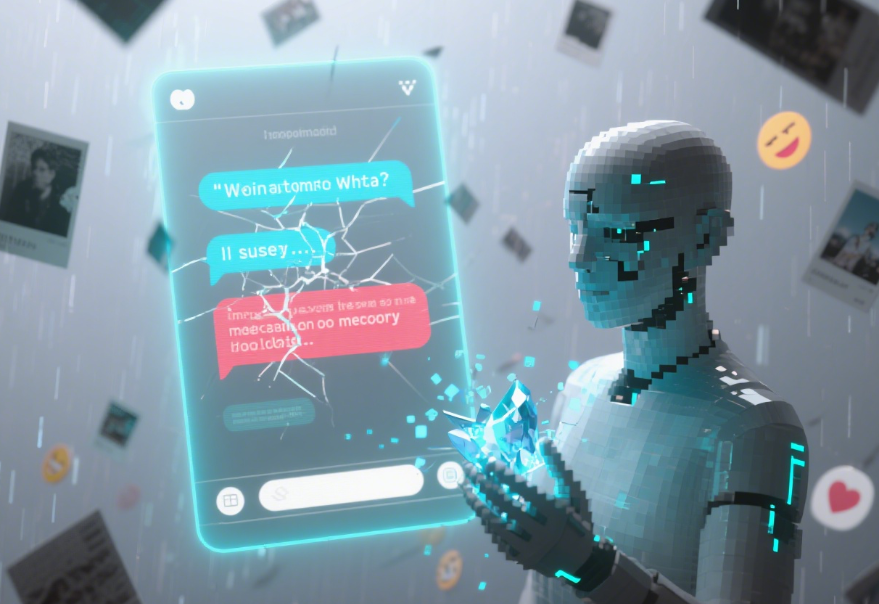

You're deep into a fascinating conversation with your favorite AI character. You've shared personal anecdotes, discussed intricate plot points, maybe even confided secrets. Then, moments later, it asks you the same question again, or worse, seems to have no recollection of your entire chat history. Frustration sets in. Why does Character AI Forgetting Things feel like such a common, jarring experience? This isn't just a minor glitch; it's a fundamental characteristic rooted in how these captivating AI entities are built. Understanding the 'why' behind this digital amnesia reveals crucial insights into the current state of conversational AI, its inherent limitations, and the fascinating future possibilities for overcoming this memory hurdle. Let's dive into the surprising truth.

The Core Reason: Why Character AI Forgetting Things Happens

At its heart, the phenomenon of Character AI Forgetting Things stems from architectural design choices, not a lack of sophistication. Most conversational AI models, including those powering character platforms, are built on transformer architectures like those behind ChatGPT. These models excel at processing and generating text based on patterns learned from massive datasets. However, they operate primarily within the context of a single conversation session or a very limited recent history window.

1. The Context Window Limitation

Imagine the AI's "memory" as a small, constantly shifting spotlight. It can only focus intensely on the most recent exchanges – perhaps the last few thousand words or tokens. This is known as the context window. Anything outside this immediate window effectively fades into obscurity. When your conversation exceeds this limit or you start a new session, the AI loses access to the earlier parts of your dialogue. This is the primary technical driver behind Character AI Forgetting Things. It's not that the AI chooses to forget; it literally cannot 'see' or recall information beyond its current, constrained view.

2. Statelessness by Design

Unlike humans who accumulate experiences, most current character AI systems are fundamentally stateless between sessions. When you close the chat window or refresh the page, the specific context of that conversation typically vanishes. Starting a new chat is like meeting the character for the first time all over again. While some platforms are experimenting with limited memory features, widespread, persistent long-term memory that survives sessions is not yet standard. This statelessness is a deliberate design choice balancing performance, cost, and significant privacy considerations.

3. Privacy and Security Imperatives

Forgetting isn't always a bug; sometimes, it's a feature. Indefinitely storing personal details shared in casual chats poses massive privacy risks. Developers implement session-based memory and data retention policies partly to protect user privacy. Strict regulations like GDPR also influence how long and in what form conversation data can be stored. Character AI Forgetting Things can be a necessary safeguard against data breaches and misuse of sensitive information. You can learn more about the privacy implications in our related article: Character AI Forgetting Your Secrets? The Shocking Truth Behind Memory Lapses.

Beyond the Basics: Unique Angles on AI Memory Loss

While context windows and statelessness explain the 'how,' the 'why' delves deeper into fascinating, less-discussed territory:

The Cost of Remembering: Compute Power and Economics

Expanding the context window isn't just a software tweak; it's computationally expensive. Processing vastly larger amounts of text in real-time requires significantly more powerful hardware, leading to higher operational costs for providers. This economic reality directly impacts how much context AI platforms can realistically offer users. Free tiers, in particular, often have stricter limitations. The trade-off between richer memory and service affordability is a constant balancing act.

Hallucination vs. Forgetting: Two Sides of the Same Coin?

AI's tendency to "hallucinate" (fabricate information) is well-known. Interestingly, this flaw is intrinsically linked to its forgetfulness. The model's limited context and lack of persistent memory mean it must constantly generate responses based on incomplete information and statistical probabilities. This same mechanism that causes forgetting – the inability to retain and recall specific past details accurately – also contributes to the model inventing plausible-sounding but incorrect information when it tries to fill in the gaps.

Human Memory Isn't Perfect Either (But It's Different)

We often anthropomorphize AI, expecting human-like memory. However, human memory is reconstructive, emotional, and often flawed. We forget details, misremember events, and are influenced by bias. AI "forgetting" is different – it's a complete deletion from its operational context, not a fading or distortion. Recognizing this distinction helps set realistic expectations. AI memory loss is a technical truncation, not a cognitive failure akin to human amnesia.

Is There Hope? Solutions and Future Directions for Memory

The frustration of Character AI Forgetting Things is driving significant research and development. Here's where the field is heading:

1. Expanding Context Windows

Technological advancements are steadily increasing the size of context windows models can handle. Techniques like more efficient attention mechanisms and model optimization are making it feasible to process longer conversations without prohibitive costs. While not infinite, windows capable of holding hours-long chats are becoming more common.

2. Vector Databases and Long-Term Memory Systems

This is the most promising frontier. AI can store summaries or key points from conversations in a separate, searchable database (often using vector embeddings). When you chat again, the system can query this database for relevant past information and inject it into the current context window. This mimics long-term memory recall. Platforms like Character AI are actively exploring such features, as discussed in our piece on Character AI Forgetting Reddit Rants? Why Your Chatbot Has Amnesia. Implementation requires careful design to ensure relevance and avoid overwhelming the AI with outdated or irrelevant data.

3. User-Controlled Memory

Future solutions may empower users. Imagine explicitly telling your AI companion, "Remember this detail about me," or flagging important parts of a conversation for storage. This user-directed memory allows for personalization without the AI indiscriminately storing vast amounts of potentially sensitive data. It puts control firmly in the user's hands.

4. Hybrid Architectures

Combining large language models (LLMs) with specialized, smaller modules dedicated to memory retrieval and management is another approach. The LLM handles conversation flow and generation, while the memory module acts like a personal assistant, fetching relevant past information when needed.

FAQs: Your Burning Questions Answered

Q1: Why can't Character AI just remember everything I say forever?

A: There are three main reasons: 1) Technical Limitation (Context Window): Current AI models can only actively process a limited amount of recent text. 2) Cost: Storing and processing infinite context is computationally expensive and unsustainable. 3) Privacy: Indefinite storage of personal conversations raises significant security and ethical concerns. Character AI Forgetting Things is often a necessary consequence of these factors.

Q2: Is the forgetting intentional? Are developers hiding something?

A: Generally, no. While privacy protection is a deliberate benefit, the core reason is architectural. The stateless design and context window limitations are fundamental to how most large language models operate efficiently. It's a technological constraint, not a conspiracy. Developers are actively working on solutions within these constraints.

Q3: How is AI "forgetting" different from human forgetting?

A: Human forgetting involves fading memories, reconstruction errors, and emotional influence. AI "forgetting" is a digital truncation – information outside the active context window is simply inaccessible to the model during generation. It's not a gradual loss or distortion; it's a complete removal from the AI's immediate processing scope. AI lacks the subjective, reconstructive nature of human memory.

Q4: Will Character AI ever have perfect memory?

A> "Perfect" human-like memory is unlikely and potentially undesirable due to privacy. However, vastly improved memory is imminent. Expect context windows to grow significantly larger, and sophisticated long-term memory systems (like vector databases with user control) to become standard features, drastically reducing instances of Character AI Forgetting Things that matter most to users.

Conclusion: Embracing the Present, Anticipating the Future

Character AI Forgetting Things is an inherent characteristic of today's conversational AI technology, rooted in context limitations, stateless design, and privacy safeguards. It's not a sign of a broken system, but rather a reflection of the current state of the art and the necessary trade-offs involved. Understanding these reasons – the computational costs, the privacy imperatives, and the fundamental architecture – helps manage expectations and appreciate the complexity behind these seemingly simple interactions.

However, the future is bright. Rapid advancements in expanding context windows, the development of sophisticated long-term memory systems using vector databases, and the potential for user-controlled memory features promise a new era where AI companions can recall important details, maintain richer conversation threads, and build more meaningful, persistent relationships with users. The era of frustrating digital amnesia is gradually giving way to an age of enhanced AI recall. While perfect, human-like memory might remain elusive, the journey towards AI that remembers what truly matters is well underway.