That whirring sound isn't just from your hard drive – it's the future coming to life in workshops, garages, and labs worldwide. Gone are the days when Building Robots was solely the domain of mega-corporations with billion-dollar budgets. Today, driven by accessible hardware and revolutionary Artificial Intelligence (AI), creating intelligent machines is more achievable than ever. Whether you dream of a household helper, an exploring rover, or a solution to a specific challenge, this guide demystifies the journey of Building Robots in the AI age, revealing not just the how, but the transformative *why* behind this exhilarating pursuit.

What Building Robots Really Means Today

Building Robots has undergone a fundamental shift. Historically focused intensely on complex mechanics and intricate control circuits, the process now pivots on seamlessly integrating sophisticated AI. It’s no longer just about constructing a physical shell that moves; it’s about creating an entity capable of sensing, reasoning, learning, and adapting within its environment. Building Robots today means embedding intelligence: the ability to process data from cameras and sensors, make decisions based on that data, potentially learn from experience, and execute tasks with varying degrees of autonomy.

Your Blueprint: The Step-by-Step Journey of Building Robots

While every project is unique, the core process of Building Robots follows these fundamental steps:

1. Define the 'Why' & Scope

What problem should your robot solve? What task will it perform? Be specific (e.g., "A robot to autonomously water my houseplants based on soil moisture," not just "a cool robot"). Defining the goal dictates every subsequent design choice.

2. Conceptual Design & Simulation

Sketch out your robot's form and function. What sensors will it need (cameras, LiDAR, ultrasonic, temperature)? How will it move (wheels, legs, tracks)? Choose actuators (motors, servos) accordingly. Utilize free tools like Tinkercad or Gazebo for basic virtual prototyping to test concepts before cutting metal or printing plastic.

3. Selecting the Nervous System: Hardware Platform

This is the robot's body and nerves:

Microcontroller (MCU): The basic brain (e.g., Arduino, ESP32) – great for simpler tasks with predefined logic.

Single-Board Computer (SBC): The powerhouse brain (e.g., Raspberry Pi, NVIDIA Jetson Nano/Orin) – essential for running complex AI algorithms like computer vision or deep learning. The Jetson platform, for instance, is practically purpose-built for AI in robotics.

AI Focus: For robots requiring significant AI processing (real-time vision, complex decision trees, learning), an SBC, especially one with a capable GPU like the Jetson series, is often non-negotiable.

4. Sensors & Actuators: Inputs and Outputs

Select sensors based on the environmental data your robot needs to perceive (e.g., camera for vision, ultrasonic for distance, IMU for orientation). Choose actuators powerful enough to move the robot as needed. Balance functionality with power consumption and weight.

5. The AI Engine: Software & Intelligence

This is the *soul* of modern robot Building:

Operating System: Linux (Ubuntu, ROS variants) reigns supreme for flexibility and AI support.

Middleware: ROS (Robot Operating System) is the de facto standard, providing libraries, tools, and communication protocols crucial for complex robot development, *especially* when integrating AI modules.

AI Frameworks: Leverage libraries like TensorFlow Lite, PyTorch Mobile, or ONNX Runtime to run pre-trained machine learning models on your robot's SBC. Models can handle image recognition (What object is this?), speech recognition (What did the user say?), navigation path planning (How do I get there?), or anomaly detection.

Programming: Python is the lingua franca for AI integration due to its vast ecosystem (NumPy, SciPy, OpenCV). C++ is critical for performance-intensive tasks.

6. Power Up: Battery & Management

Choose batteries (LiPo, Li-Ion) with sufficient capacity (mAh) and voltage for your motors and SBC. Power management circuits (BMS) are critical for safety and longevity.

7. Integration, Testing, Iteration

Assemble components. Flash firmware onto the MCU/SBC. Write and integrate software modules (e.g., sensor reading, motor control, AI decision logic). Test relentlessly. Expect things to break! Refine mechanics, code, and AI models based on real-world performance. This iterative loop is core to successful robot Building.

AI: The Game Changer in Building Robots

AI has fundamentally reshaped what's possible and practical:

Computer Vision: AI algorithms enable robots to "see" and understand their surroundings – recognizing objects, people, navigating obstacles, reading signs – tasks impossible with traditional programming alone.

Sensor Fusion & Perception: AI excels at combining data from multiple sensors (camera + LiDAR + IMU) to build a more accurate and robust understanding of the environment.

Adaptive Behavior: Machine Learning (ML), particularly Reinforcement Learning (RL), allows robots to learn optimal behaviors through trial and error in simulated or controlled real-world environments. Think a robot arm learning the most efficient grasp for an unfamiliar object.

Natural Interaction: Large Language Models (LLMs), while computationally heavy, are starting to enable more natural voice control and contextual understanding in human-robot interaction.

Predictive Maintenance: AI models can analyze sensor data (motor vibration, temperature) to predict hardware failures before they occur.

Building Robots in Action: Real-World AI Case Studies

AI isn't theory; it's making tangible differences:

Logistics Automation: AI-powered mobile robots in warehouses use real-time computer vision and dynamic pathfinding algorithms to navigate unpredictable human traffic and optimize package sorting/delivery, increasing throughput by 30-50% compared to older automated systems (Source: Multiple industry reports, e.g., Interact Analysis).

Precision Agriculture: Robots equipped with multispectral cameras and AI analyze crop health plant-by-plant, enabling targeted pesticide/herbicide application, reducing chemical usage by up to 90% (Source: Research trials, e.g., University projects & startups like FarmWise).

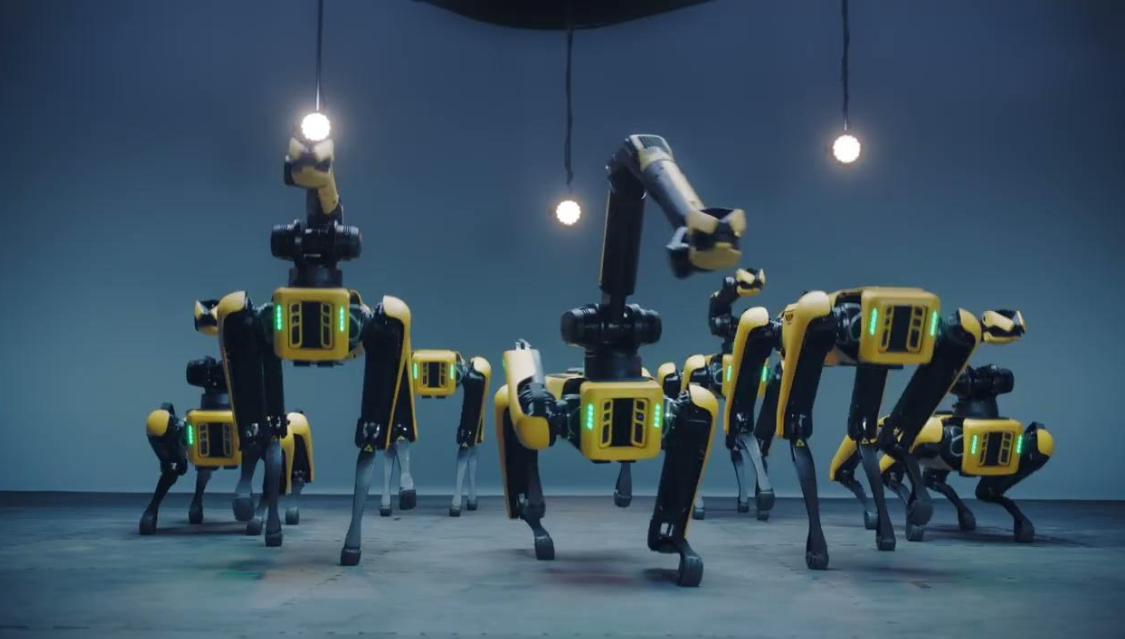

Assistive Robotics: Advanced prosthetics and exoskeletons use AI algorithms, often running on compact embedded systems like the Jetson Orin Nano, to interpret muscle signals (EMG) and sensor data for more intuitive, adaptive movement. Boston Dynamics' transition to AI-powered navigation for Spot dramatically increased its autonomy in complex environments compared to purely state-machine driven versions.

The Future (and Ethics) of Building Robots

The trajectory is clear: robots will become more intelligent, capable, and intertwined with AI. Key trends include:

Edge AI Dominance: Processing AI locally on the robot (edge computing) instead of relying solely on the cloud is essential for real-time responsiveness, reliability (works offline), and privacy. Hardware platforms optimized for this (like NVIDIA's Jetson Orin series) are becoming more powerful and accessible.

Multi-Agent Systems & Swarms: AI enabling coordination between multiple robots for collaborative tasks (e.g., disaster response, large-scale construction).

Learning from Less Data: Advancements in few-shot learning and transfer learning making AI-powered robots more adaptable without requiring massive datasets specific to every new task.

The Critical Ethical Dimension

Building Robots with advanced AI compels us to consider profound questions: Who is responsible when an AI-powered robot makes an error? How do we prevent algorithmic bias from being built into robotic decisions? How do we ensure transparency and explainability in increasingly complex AI systems? Addressing these proactively is not optional; it's fundamental to building a responsible robotic future.

Getting Started: Your First Steps in Building Robots

Ready to begin your journey? Here’s how to dive in:

Start Small: Begin with a kit! Affordable robot kits (like TurtleBot3 for ROS, LEGO Mindstorms EV3/Spike Prime, Arduino Starter Kits) provide a structured introduction to hardware, basic sensors/actuators, and programming.

Learn the Fundamentals: Solidify your understanding of electronics, programming (Python is key), and basic mechanics. Online courses (Coursera, edX, Udacity) and communities (ROS Discourse, Arduino Forum, Hackster.io) are invaluable.

Embrace Simulation: Tools like Gazebo, Webots, or NVIDIA Isaac Sim allow you to build and test sophisticated virtual robots, learning complex concepts like sensor simulation and AI model deployment without hardware costs or risks.

Experiment with AI: Utilize cloud-based AI APIs initially (e.g., Google Vision, Azure Cognitive Services) for experimentation. Progress to deploying lightweight pre-trained models on a Raspberry Pi or Jetson Nano.

The world of Building Robots is vast, challenging, and incredibly rewarding. With AI as your co-pilot, the potential to create machines that genuinely perceive, learn, and interact with the world is within your grasp. Start building!

Frequently Asked Questions (FAQs) on Building Robots

A: While formal education is beneficial, it's absolutely not a prerequisite! Many successful robot builders are passionate hobbyists and self-learners. Strong dedication, a willingness to learn continuously, and hands-on practice with kits, online tutorials (many on ROS and AI integration), and community resources are the most critical ingredients. Start simple and build your skills progressively.

A: Costs vary tremendously. Simple wheeled robots using an Arduino and basic sensors can start under $50. Incorporating an SBC like Raspberry Pi ($35-$75) allows for basic computer vision ($10-$50+ for a decent camera module) using OpenCV. Stepping into dedicated AI hardware like NVIDIA's Jetson Nano (starting around $150) enables significantly more complex models. Full-blown development platforms for advanced robots can cost hundreds to thousands. The key is starting within your budget and scaling up as needed. Simulation is also a very cost-effective way to learn complex AI-robotics principles.

A: Not strictly necessary for *all*, but it's highly recommended, especially for complex robots or those heavily relying on AI. ROS provides standardized communication between sensors, actuators, and AI software modules, offers powerful tools for visualization (RViz) and simulation (Gazebo), and boasts a massive ecosystem of pre-existing packages for navigation, perception, manipulation, and integration with frameworks like TensorFlow and PyTorch. While simpler robots can function without it, mastering ROS significantly accelerates development and capability when Building Robots with sophisticated intelligence.

A: Often, it's the "integration gap." Understanding hardware (sensors, motors), software (firmware, control logic), and AI (model training, deployment) individually is challenging enough. Bridging these worlds seamlessly – getting sensor data efficiently to the AI model, processing it in real-time, and translating the AI's output into meaningful motor commands – is frequently the steepest learning curve. Focusing on good system architecture (leveraging ROS helps immensely here) and starting with well-documented examples are crucial strategies to overcome this.