For decades, the image of a fluidly moving, intelligently conversing machine – a true Walking Talking Robot – existed purely within the realms of science fiction. Think C-3PO or Rosie the Robot Maid. Today, however, thanks to astonishing leaps in Artificial Intelligence (AI), robotics, sensor technology, and natural language processing (NLP), this iconic vision is rapidly transitioning from fantasy labs to real-world environments. This convergence isn't just about building machines; it's about creating dynamic, interactive entities capable of navigating our physical world and communicating with us meaningfully. This article delves into the fascinating technology powering modern Walking Talking Robots, explores their groundbreaking applications, and ponders the profound implications of their integration into our daily lives.

Demystifying the Modern Walking Talking Robot

A Walking Talking Robot is far more complex than simply strapping a voice assistant onto a mobile base. It represents the integration of two highly sophisticated AI domains:

1. The "Walking": Mastering Locomotion and Navigation

Legged locomotion, especially bipedal (two-legged) walking, is incredibly challenging. Robots must dynamically balance, adapt to uneven terrain, avoid obstacles, and plan efficient paths. Key technologies enabling this include:

Advanced Actuators & Mechanics: Highly responsive motors (e.g., BLDC motors), harmonic drives, and lightweight, strong materials (like carbon fiber composites) provide the physical capability.

Sensory Fusion: Integrating data from LiDAR, depth cameras (RGB-D), stereo vision, IMUs (Inertial Measurement Units), and force/torque sensors in the feet gives the robot a real-time understanding of its body position (proprioception) and environment.

AI-Powered Control Systems: Deep reinforcement learning algorithms train robots in simulation to master complex gaits, recover from slips, and navigate dynamically. Real-time pathfinding algorithms (like A* or more advanced variants) use sensory data to chart safe courses. Researchers at institutions like Boston Dynamics (Atlas), Agility Robotics (Digit), and Toyota Research Institute have pioneered breathtaking advancements.

2. The "Talking": Enabling Meaningful Dialogue

Beyond simple voice commands, true conversational ability requires deep AI:

Natural Language Processing (NLP): Understands the intent behind spoken words, context, and nuance. Models like Transformer architectures (e.g., BERT, GPT series variants) are crucial.

Natural Language Generation (NLG): Crafts contextually appropriate, coherent, and often personalized spoken responses.

Speech Recognition (ASR): Accurately converts spoken language into text for processing, even in noisy environments.

Speech Synthesis (TTS): Converts generated text responses back into natural-sounding, emotive human speech using advanced neural networks.

Real-time Processing: Minimizing latency between hearing, understanding, formulating, and speaking a response is vital for natural flow.

The real magic lies in seamlessly integrating these locomotion and communication systems. The robot must be able to stop walking to focus on a conversation if needed, gesture appropriately while speaking, understand commands related to movement ("Go to the kitchen and tell John dinner is ready"), or describe its actions or surroundings verbally.

Beyond Novelty: Transformative Applications

The potential uses for sophisticated Walking Talking Robots span numerous sectors, fundamentally changing how services are delivered and interactions occur:

Elder & Special Needs Care

A major application lies in assisted living. A true Walking Talking Robot could:

Physically navigate a home to check on individuals.

Provide medication reminders verbally and physically retrieve medication.

Offer conversation to combat loneliness, adapting its topics based on user interaction.

Detect falls via sensors and initiate communication to assess the situation ("Are you hurt? Can you move?") and immediately alert emergency services or caregivers.

Assist with mobility, offering stable support during walking.

This isn't science fiction. Robots like Toyota's Human Support Robot and ongoing research projects specifically target these critical functions.

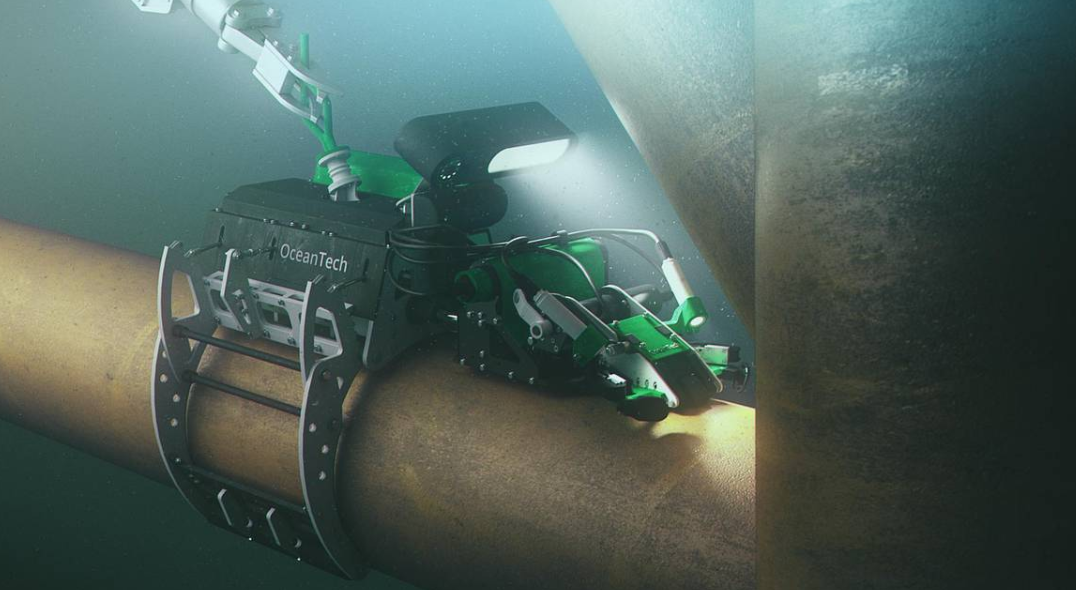

Hazardous Environments & Emergency Response

Deploying robots into dangerous situations significantly enhances human safety:

Disaster Zones: A Walking Talking Robot could navigate debris in earthquakes or floods, locate survivors, relay their status and location to rescue teams via two-way communication ("I'm trapped under rubble, my leg is injured"), and deliver small emergency supplies. Projects from MIT's CSAIL and other DARPA-funded initiatives explore this.

Chemical/Nuclear Sites: Performing inspections or maintenance, verbally reporting findings directly to operators outside the danger zone.

Explosive Ordnance Disposal (EOD): Providing situational awareness and communication capabilities far beyond current wheeled or tracked platforms.

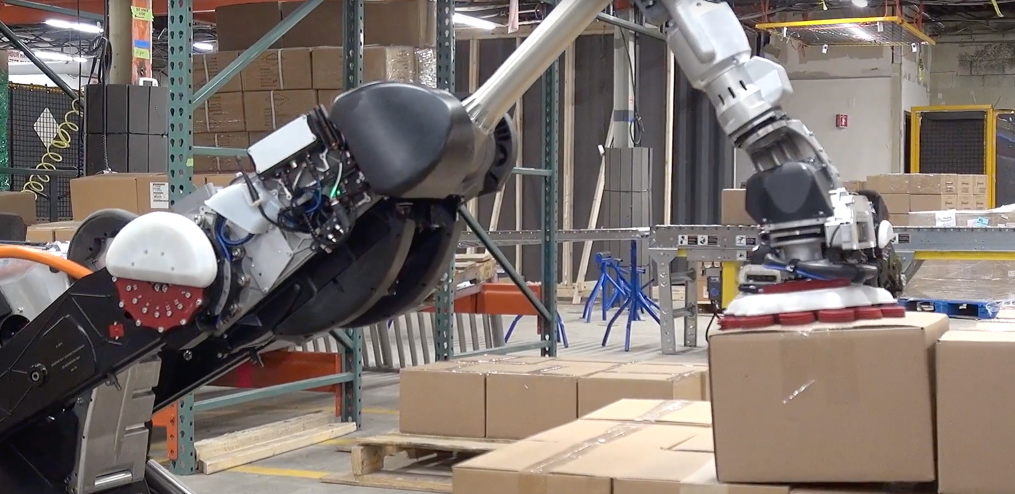

Logistics & Warehouse Management

Beyond simple AGVs, robots combining mobility, manipulation, and communication will excel. Imagine a robot that can:

Navigate dynamic warehouse floors shared with humans.

Verbally coordinate tasks or alert human workers ("Approaching aisle 7 with a heavy load").

Respond to spoken inquiries about inventory locations ("Where are the spare parts for Model X?").

Agility Robotics' Digit is a prime example targeting this space.

The Future of Interactive Companionship

Beyond pure function, the social and interactive aspect is evolving rapidly. AI-driven companions capable of physical movement introduce a new dimension to human-robot relationships. They can greet you at the door, follow you to another room to continue a conversation, or express non-verbal cues through movement alongside speech. Interactive Talking Robot for Adults: The Future of AI Companionship

The Cutting Edge and Unique Perspectives

Here's where our exploration goes deeper than standard overviews:

The Proprioceptive Communication Challenge

One fascinating, rarely discussed frontier is enabling robots to *verbally describe their own physical state and sensations using sensor data in real-time.* Imagine a robot on a rescue mission saying: "I'm detecting instability in the floor structure ahead, recommend caution," or a companion robot stating, "My battery level is low, I need to recharge soon." This requires translating complex sensor telemetry (motor currents, joint angles, battery voltage, thermal readings) into concise, meaningful natural language – a significant NLP challenge.

Learning Social Navigation Through Observation

Beyond just avoiding obstacles, robots navigating human spaces must understand social norms. Leading research involves using AI to teach robots these rules by observing human crowds. How close is too close? When to yield? How to move politely? Training robots through massive datasets of human movement videos allows them to learn implicit social navigation protocols crucial for a socially intelligent Walking Talking Robot.

The "Explainable Action" Imperative

As these robots undertake complex autonomous tasks, a unique requirement arises: the ability to explain their own actions verbally. If a robot suddenly changes direction, a person nearby might ask, "Why did you stop?" The robot needs to instantly generate a truthful explanation based on its internal state ("I detected a small object on the path I couldn't identify," or "My obstacle avoidance sensor triggered a safety stop"). This blend of real-time plan introspection and natural language generation is critical for trust and transparency.

Learn more about AI RobotEthical & Societal Considerations

The advent of sophisticated Walking Talking Robots brings complex questions:

Privacy: Equipped with sensors and cameras, data security and user privacy become paramount. Clear regulations are needed.

Job Displacement: Roles in logistics, security, and potentially care sectors might evolve or be displaced, requiring workforce adaptation.

Emotional Dependency & Deception: Highly empathetic AI could foster dependency, especially in vulnerable populations. How human-like should their responses be? Is it ethical for a robot to simulate empathy it doesn't "feel"?

Safety & Control: Rigorous safety protocols, physical safeguards (limiting force/speed), and secure communication channels are essential to prevent accidents or malicious use.

Accessibility & Equity: Ensuring these potentially expensive technologies benefit a broad population, not just the privileged, is crucial.

The Trajectory: Where Next for Walking Talking Robots?

The field is advancing at a breakneck pace. Key future developments include:

Enhanced Dexterity & Manipulation: Combining locomotion and communication with fine motor skills to perform complex tasks while interacting (e.g., assisting with cooking while explaining steps).

Long-term Autonomy: Robots operating reliably for extended periods with minimal human intervention, managing self-maintenance like recharging.

Truly Multi-Party Interaction: Robots seamlessly engaging in natural conversations with multiple people simultaneously, understanding group dynamics.

Personalization & Continual Learning: Robots adapting their communication style, movement patterns, and knowledge base deeply to individual users over time.

Cost Reduction & Commercialization: Making this technology viable for wider deployment beyond research labs and niche applications.

We are standing at the threshold of a new era where sophisticated physical AI agents become integrated parts of our work, homes, and society.

Frequently Asked Questions (FAQs)

Can today's Walking Talking Robots walk completely autonomously in complex environments?

While significant progress has been made (e.g., robots navigating labs, controlled warehouses, or known homes), true autonomy in completely novel, cluttered, and unpredictable real-world environments remains a major challenge. Current robots often rely heavily on pre-mapped environments or operate within specific constrained parameters. Researchers are making strides with AI trained on vast datasets of simulated terrains, but real-world adaptation still requires careful monitoring for safety. Advancements in dynamic obstacle detection, real-time path replanning, and AI models that can predict terrain stability from sensory input are key to overcoming this hurdle.

How sophisticated is the conversation capability of current Walking Talking Robots?

The sophistication varies greatly. Demo units often showcase voice interfaces powered by advanced cloud-based Large Language Models (LLMs), enabling surprisingly fluent and contextually relevant conversation for specific tasks or within predefined domains. However, integrating this smoothly with real-time physical actions remains complex. Truly understanding context that includes *both* conversation history *and* the current physical situation (e.g., "Grab the *red* cup *next to you*") is still an active area of research. Challenges include managing cognitive load (simultaneously navigating and conversing), dealing with real-world ambiguity, background noise, and generating truly embodied communication (gestures aligned with speech). Many existing robots primarily handle scripted responses or simple command/response cycles during complex locomotion tasks.

When can we expect Walking Talking Robots to be commonly available?

Widespread common availability comparable to smartphones is still likely a decade or more away, primarily due to technology maturity and cost. You'll see them deployed in specific niches much sooner. Look for their adoption first in:

Industrial Settings: Warehouses and logistics centers within controlled environments (e.g., Agility Robotics' Digit pilot programs).

High-End Healthcare/Assisted Living: Selected hospitals or premium care facilities offering advanced support services.

Specialized Security/Inspection Roles: Patrolling secure facilities or hazardous sites.

Rapid progress is being made, but overcoming safety certification, achieving reliability in diverse environments, and significantly reducing production costs (especially for bipedal platforms) are critical steps before they become mainstream household appliances or companions. Companion-focused models with less advanced mobility might appear in homes earlier than fully-fledged bipedal Walking Talking Robots.