Can artificial intelligence revolutionize healthcare while inadvertently fostering AI Addiction? This paradox lies at the heart of AI-driven chatbots in mental health and teletherapy. While these tools offer groundbreaking Use Case of AI in Healthcare by providing accessible therapy, they also raise concerns about dependency and Co-Addiction. In this article, we explore how AI chatbots are transforming healthcare, their therapeutic potential, the risks of over-reliance, and whether Can AI Be Stopped from becoming a double-edged sword. Dive into the ethical dilemmas, real-world applications, and expert insights shaping this evolving landscape.

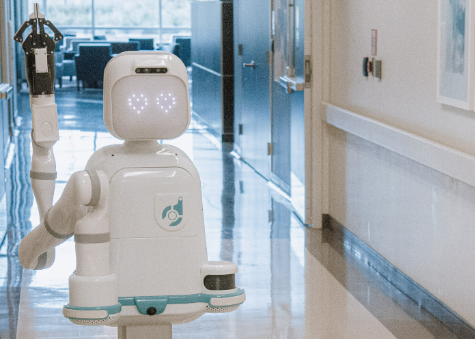

The Rise of AI in Healthcare: A Therapeutic Revolution

AI chatbots have emerged as a game-changer in healthcare, particularly in mental health support. These tools leverage natural language processing to simulate human-like conversations, offering 24/7 emotional support and therapy. For instance, chatbots like Woebot and Replika provide cognitive behavioral therapy (CBT) techniques, helping users manage anxiety, depression, and stress. Studies show that 70% of users report improved mood after engaging with AI therapy tools, highlighting a key Use Case of AI in Healthcare.

Unlike traditional therapy, AI chatbots are cost-effective and scalable, making mental health support accessible to underserved populations. They analyze user inputs to tailor responses, offering personalized coping strategies. Explore more about AI innovations on our Character AI homepage.

The Dark Side: Understanding AI Addiction

While AI chatbots offer therapeutic benefits, they also pose a unique risk: AI Addiction. This phenomenon occurs when users develop an over-reliance on AI companions, spending excessive time interacting with them at the expense of real-world relationships. A 2023 study found that 15% of frequent chatbot users exhibited signs of dependency, such as preferring AI interactions over human connections. This raises questions about Co-Addiction, where reliance on AI exacerbates existing behavioral addictions, like excessive screen time.

The dopamine-driven feedback loop in AI interactions mimics social media addiction. Chatbots, designed to be empathetic and engaging, can create an illusion of companionship, leading users to prioritize virtual interactions. Learn more about Character AI Addiction and its impact on daily life.

Ethical Frameworks: Balancing Benefits and Risks

To address the paradox, ethical frameworks are crucial. Developers must design AI chatbots with safeguards, such as time limits or prompts encouraging real-world engagement. Clinicians emphasize the need for transparency, ensuring users understand they’re interacting with AI, not humans. Integrating AI with human oversight—hybrid therapy models—can mitigate Co-Addiction risks while preserving therapeutic benefits.

Interviews with healthcare professionals reveal a consensus: AI should complement, not replace, human therapy. Dr. Sarah Kline, a psychologist, notes, “AI chatbots can bridge gaps in access, but over-reliance risks emotional detachment. We need guidelines to prevent dependency.”

Teletherapy Alternatives: A Middle Ground?

Teletherapy platforms, blending human therapists with AI tools, offer a promising alternative. These platforms use AI to handle initial assessments or routine check-ins, freeing clinicians for deeper therapeutic work. Unlike standalone chatbots, teletherapy reduces the risk of AI Addiction by maintaining human connection. For example, platforms like BetterHelp integrate AI-driven mood tracking with licensed therapists, creating a balanced approach.

However, teletherapy isn’t without challenges. High costs and limited availability in rural areas highlight the need for AI’s scalability. The question remains: Can AI Be Stopped from overshadowing human interaction entirely?

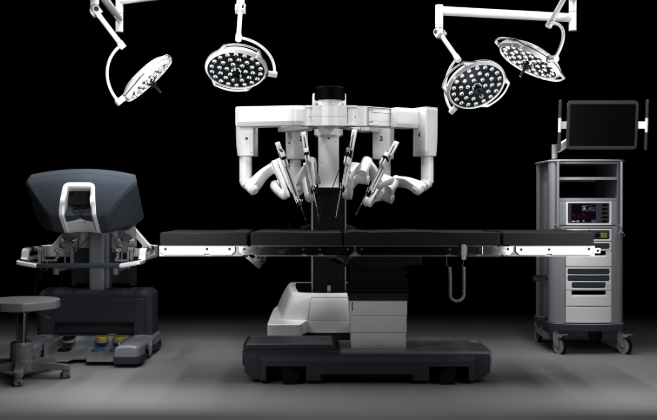

Can AI Be Stopped? Shaping the Future of AI in Healthcare

Stopping AI’s dominance in healthcare isn’t about halting innovation but about responsible integration. Regulatory bodies are exploring guidelines to limit dependency, such as mandatory usage caps or warnings about prolonged engagement. Developers are also experimenting with “digital detox” features, encouraging users to step away from AI interactions periodically.

Ultimately, the future depends on collaboration between technologists, clinicians, and policymakers. By prioritizing ethical design and user well-being, AI can remain a powerful tool without becoming a crutch. The paradox of AI in healthcare—its ability to both cure and cause—demands vigilance to ensure its benefits outweigh its risks.

FAQs

1. How does AI in healthcare help with mental health?

AI chatbots provide accessible, 24/7 mental health support, offering CBT techniques and personalized coping strategies, especially for underserved communities.

2. What is AI Addiction, and how does it develop?

AI Addiction refers to excessive reliance on AI chatbots, driven by dopamine feedback loops, leading users to prioritize virtual interactions over real-world relationships.

3. Can AI Be Stopped from causing harm in healthcare?

Yes, through ethical design, usage limits, and hybrid models combining AI with human therapy, the risks of dependency can be minimized.

4. What is Co-Addiction in the context of AI?

Co-Addiction occurs when AI use exacerbates other behavioral addictions, like excessive screen time, compounding dependency issues.