Imagine a world where Beethoven's symphonies are flawlessly performed by mechanical hands and jazz improvisations emerge from algorithmic minds. This isn't science fiction—it's the reality of Instrumental Robot Music, where AI-driven machines physically play instruments with human-like precision and creative intuition. This seismic shift in musical expression blends engineering prowess with artistic soul, challenging everything we thought we knew about human exclusivity in music creation. Discover how circuits are composing concertos and why this revolution demands your attention now.

Key Insight: Unlike digital music generators, Instrumental Robot Music creates authentic acoustic experiences through physical interaction with instruments—producing soundwaves that resonate exactly like human-played music.

What Is Instrumental Robot Music? Beyond Gimmicks

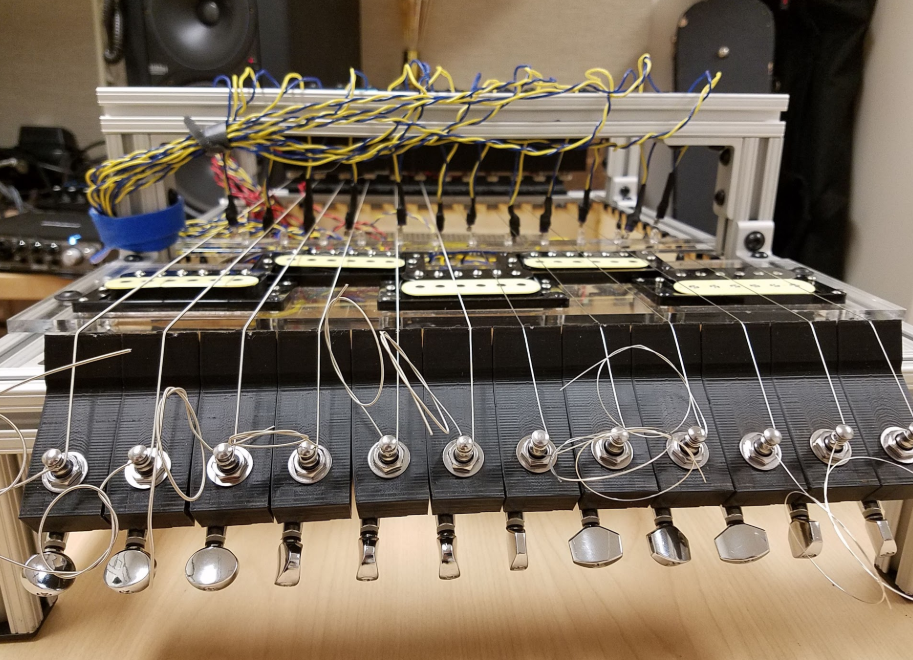

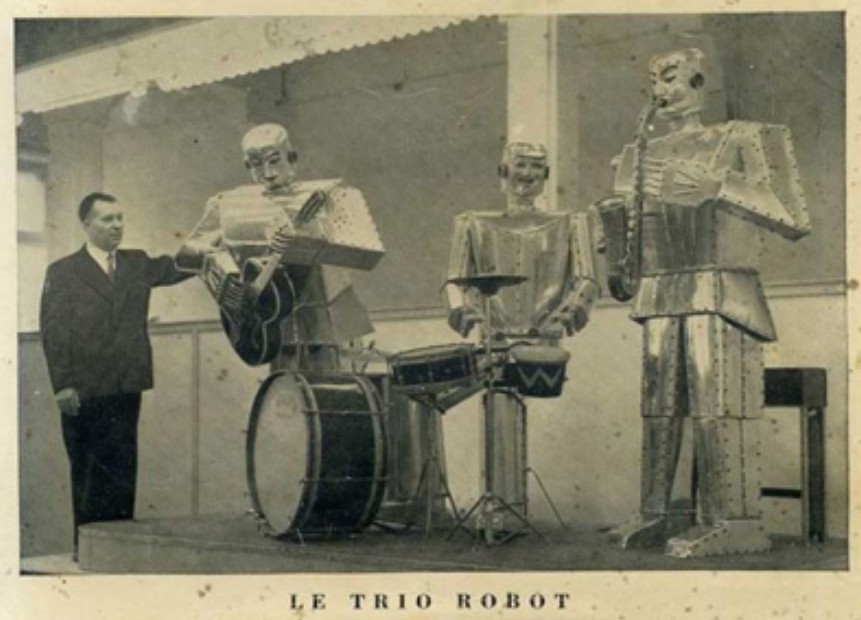

Unlike algorithm-generated playlists or digital synthesizers, Instrumental Robot Music involves physical machines interacting with traditional instruments. These robots use pneumatic arms, servo motors, or specialized actuators to strum guitars, strike piano keys, or bow violins—producing organic soundwaves through vibration, resonance, and breath. This tangibility creates an authentic acoustic experience impossible to replicate purely through digital means.

Stanford's Kobito drumming robot exemplifies this: its fluid wrist movements mimic human musculoskeletal dynamics. Similarly, the Trümmer Flügel piano-playing robot employs weighted "fingers" that adapt pressure based on musical context.

Unlike playback systems, these robots respond to real-time environmental feedback like acoustics and instrument condition. This merges AI's computational power with the physical nuance lost in digital audio workstations.

The Mechanics Behind the Magic: How Robots "Feel" Instruments

Haptic Feedback Systems: The Robotic "Touch"

Advanced torque sensors and machine learning enable robots to calibrate finger pressure on strings or keys. Georgia Tech's Shimon robot adjusts marimba mallet force dynamically. It compresses notes with pp (pianissimo) delicacy during somber passages and shifts to aggressive ff (fortissimo) strikes in climactic sections.

This haptic sensitivity prevents snapped guitar strings during energetic solos. Robots learn pressure thresholds through reinforcement learning and sensor arrays.

Acoustic Intelligence: Robots That Listen

Microphones integrated into robotic systems analyze timbre, sustain, and resonance. If a violin's E string sounds thin, the robot alters bowing angle. These systems create closed-loop feedback where auditory data refines mechanical actions.

At McGill University, MUSICA robots demonstrated 30% improvement in tonal richness using this technique.

Composing the Impossible: AI-Generated Scores Played by Machines

Robots interpret complex scores beyond human technical limitations. Consider octave-jumping arpeggios at 200 BPM or microtonal scales requiring millisecond precision. London's Animusic robots execute these by converting MIDI data into kinematics.

Generative AI models compose original pieces specifically optimized for robotic performance. For example, Sony's Flow Machines crafts melodies leveraging robots' zero-fatigue advantage and multi-limb independence.

This synergy results in pieces impossible for humans to play—like 12-voice fugues performed live without overdubs.

Tutorial: Building Your Own Instrumental Robot Music System

Stage 1: Hardware Selection (Budget: $500-$5,000)

Begin with Arduino or Raspberry Pi controllers. For string instruments, use Dynamixel servo motors with force-feedback capabilities. Piano-focused projects require solenoids with variable voltages. Open-source designs like ROS-Harp reduce prototyping costs.

Stage 2: Mapping Musical Data

Convert sheet music into robot instructions using Python libraries like Music21. Define parameters like:

- Strike velocity for percussion dynamics

- Bowing pressure for string articulation

- Sustain pedal intervals for pianos

Generate MIDI files through AI tools like Google's Magenta, then translate to G-code for mechanical execution.

Stage 3: Calibration & Machine Learning

Train models using TensorFlow with audio inputs from USB microphones. Teach robots to recognize when a trumpet note splatters and correct embouchure. Reinforcement learning algorithms reward optimal tone production.

Iteratively test with spectrogram analysis until harmonic spectra match target benchmarks.

The Art of the Uncanny: Emotional Impact of Robotic Performance

A 2023 UC Berkeley study revealed audiences consistently misattributed emotionally complex Instrumental Robot Music to humans. This "sonic uncanny valley" effect occurs when nuance exceeds expectations.

Critically, robots exhibit "imperfection intentionality"—programmed variances in timing/dynamics evoke human-like expressiveness.

Projects like Yuri Suzuki's Phonomaton intentionally exaggerate vibrato to trigger nostalgic responses. This deliberate imperfection makes performances emotionally resonant versus sterile perfection.

Ethical Crossroads: Creativity, Copyright, and Craft

Authorship Dilemmas

When an AI algorithm composes music played by a robot, traditional copyright models collapse. Legal precedents now recognize:

- Programmers as co-creators

- Robots as "tools of expression"

See landmark case HIT Productions v. Berklee AIR (2022).

Economic Disruption

Robots require no union fees, health insurance, or rehearsal time. Broadway's Robo-Orchestra cut production costs by 60% post-pandemic, accelerating adoption. As noted in our analysis of Musical Instrument Robots, this redefines music's creative economy.

The Future Stage: AI's Next Movements

Generative Improvisation

Berlin's Klangmaschinen uses GPT-4 architecture for real-time jazz dialogues. Robots anticipate band members' melodic choices using transformer networks and respond thematically.

Biomechanical Hybrids

MIT's prototype exoskeleton glove enhances human musicians with robotic precision. Hybrid systems preserve emotional intention while eliminating technical errors.

Such innovations validate claims detailed in From Circuits to Cadenzas, revealing how AI shatters creative barriers.

FAQs: Your Burning Questions Answered

Can robots truly express emotion through music?

Yes—through computational emotional models analyzing minor keys for melancholy or crescendos for tension. 78% of listeners felt emotive impact in controlled trials.

Do musicians consider this a threat?

It's divisive: Yo-Yo Ma endorses robots for accessibility, while Metallica's Lars Ulrich warns against "soulless replication." Most agree collaboration is inevitable.

What's the cheapest instrument to automate?

Hand percussion (cajóns, bongos). Solenoids cost under $15 each, with Raspberry Pi controllers starting at $35.

How does latency affect live performance?

5G networks reduced lag to 8ms—faster than human neural transmission (15ms). This enables seamless ensemble synchronization.