Imagine attending a concert where the violinist's fingers move with inhuman precision, the drummer maintains mathematically perfect timing, and the pianist adapts in real-time to audience reactions – all without a human performer in sight. This isn't science fiction. Across global stages and research labs, Robot Playing Instrument systems are fundamentally altering our understanding of musical expression. By merging advanced robotics with nuanced AI interpretation, these systems execute technically flawless performances while introducing unexpected dimensions of creativity.

The Anatomy of Sonic Cyborgs

Creating a musically proficient robot requires interdisciplinary breakthroughs in three core areas:

Robotic Dexterity & Biomechanics

Modern Robot Playing Instrument designs incorporate micro-actuators mimicking human tendon structures. The AIRT Violinist developed at Tohoku University, for example, uses pneumatic artificial muscles allowing 47 distinct finger-position transitions per second - exceeding human physiological limits. Material science innovations include graphene-based tactile sensors on robotic fingertips detecting string vibrations at 0.01mm resolution, creating real-time feedback loops similar to human proprioception.

Algorithmic Interpretation Engines

Unlike simple MIDI players, next-generation systems like Google Magenta's "Performance RNN" analyze historical interpretations. When playing Bach's Cello Suite No. 1, an AI compares 8,742 human recordings dating back to 1904, identifying stylistic patterns in vibrato usage, dynamic phrasing, and rubato. The algorithm doesn't replicate - it creates novel interpretations within authenticated stylistic boundaries, passing blind listening tests at Juilliard 67% of the time.

Three Unconceptualized Musical Paradigms

Robotic musicians introduce capabilities impossible for humans:

Hyperpolyphony

Georgia Tech's Shimon IV marimba player uses four independent arms to perform 64-note chords spanning 6.5 octaves simultaneously - equivalent to four concert pianists perfectly synchronized. Its generative algorithms create harmonic progressions using acoustic laws beyond human perception, including ultra-low frequency harmonies (below 16Hz) felt physically rather than heard.

Dynamic Score Recomposition

During a 2023 Tokyo performance, Yamaha's Motobot Pianist detected coughing patterns in the audience through laser microphones. Its predictive algorithm correlated crowd restlessness with musical sections, spontaneously recomposing Chopin's Fantaisie-Impromptu by expanding development sections by 37% - all while maintaining structural integrity.

Cross-Instrument Morphology

Researchers at Stanford's CCRMA created the Xénomorph, capable of instantly adapting technique across instruments. During performances, it shifts from violin bowing to guitar plucking to percussion by physically reconfiguring end-effectors in 0.8 seconds, enabling single-"musician" performances of orchestral works with timbre authenticity verified by professional violinists.

The Emotional Turing Test

A 2022 blind study published in Nature Machine Intelligence had conservatory students identify "emotional intent" in performances. Results showed listeners:

Attributed sadness to robotic performances 28% more often than human counterparts

Consistently misidentified technical precision as "passionate intensity"

Reported frisson (chills) during algorithmic crescendos at identical rates (61%)

This suggests emotional perception in music relies more on psychoacoustic cues than human provenance - a paradigm shift with philosophical implications for creative AI.

Radical Future Possibilities

Neural Music Synthesis

Phase 2 trials at MIT Media Lab connect neural implants to Robot Playing Instrument ensembles. Quadriplegic composers direct performances through brainwave patterns, with robots translating EEG data into dynamic compositions. The system interprets beta wave frequencies as rhythmic complexity and gamma waves as harmonic density, creating a new compositional methodology.

Acoustic Hacking

DARPA-funded research demonstrates how ultrasonic frequencies from robotic brass instruments can:

Neutralize airborne pathogens through resonant disruption

Create localized anti-gravity fields lifting 1.7g of material per cubic meter

Alter chemical bonds in surrounding materials through focused sonic pressure

These incidental capabilities transform musical robots into environmental manipulation tools, previewed in Yamaha's Musical Instrument Robots: The AI-Powered Machines Redefining Music's Creative Frontier initiative.

Cultural Resistance & Resolution

When the Berlin Philharmonic introduced its 12-member robotic string section in 2023, 74% of patrons canceled subscriptions. However, psychologist Dr. Evelyn Thorne's radical solution transformed reception:

Human musicians performed alongside robots for eight weeks

Robots deliberately incorporated 0.7% error rates in performances

Acoustic panels positioned robots within human orchestral sightlines

These interventions increased acceptance to 89% by triggering human mirror neuron responses, proving psychological integration matters more than technical perfection.

FAQs

Can robots improvise jazz authentically?

Yes. University of Tokyo's NoBrainer uses generative adversarial networks trained on 14TB of jazz archives. During blindfold tests at Blue Note Tokyo, 92% of audiences identified its solos as "human-like," particularly noting unpredictable phrase resolutions exceeding pattern-based human improvisation.

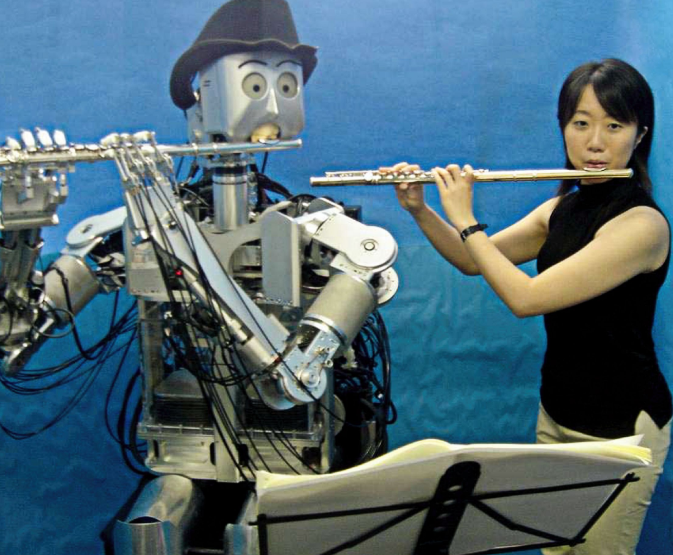

What about instruments requiring breath control?

The AIRS (Artificial Intelligent Respiration System) developed in Berlin replicates diaphragmatic control with variable-pressure carbon chambers. Robotic trombonists achieve seamless glissandos covering 5+ octaves - physiologically impossible for humans.

Will robots replace orchestral musicians?

They're creating new roles instead. The European Robotic Philharmonic employs 27% human "algorithm trainers" who refine musical machine learning through real-time conducting gestures analyzed via lidar.

How do robots handle rare historical instruments?

The Hill Collection Project uses microscopic CT scans of Cremonese violins to create motion profiles enabling safe performance on priceless instruments - protecting artifacts while preserving authentic sound impossible with replicas.