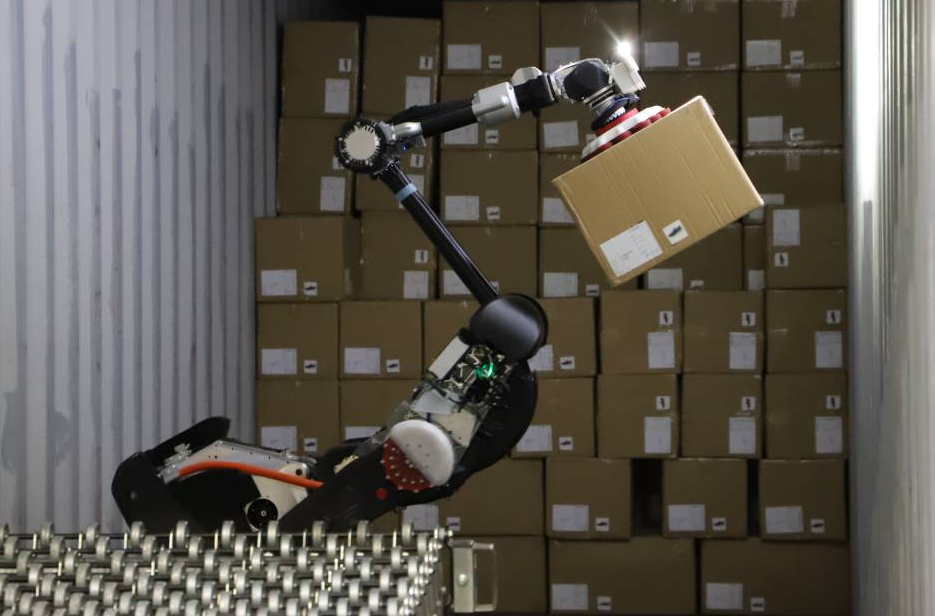

The OWMM-Agent Cross-Modal Robotics system has achieved a groundbreaking milestone with 90% zero-shot action accuracy, revolutionising how robots understand and execute complex tasks without prior training. This innovative Cross-Modal Robotics technology combines visual, auditory, and tactile inputs to create intelligent robotic systems that can adapt to new environments and tasks with unprecedented precision and efficiency.

Understanding OWMM-Agent Technology Architecture

Mate, let me tell you about the OWMM-Agent Cross-Modal Robotics - this tech is absolutely bonkers! ?? The system works by integrating multiple sensory modalities into a unified understanding framework. Unlike traditional robots that rely on single-input processing, this bad boy processes visual data, audio signals, and tactile feedback simultaneously.

The magic happens in the cross-modal fusion layer where different types of sensory information are combined and interpreted. The OWMM-Agent uses advanced neural networks that can understand relationships between what it sees, hears, and feels. It's like giving a robot human-level sensory integration! ??

What's absolutely mind-blowing is the zero-shot learning capability. The robot doesn't need specific training for each new task - it can generalise from its existing knowledge base and achieve 90% accuracy on completely novel actions. This is revolutionary because it means robots can adapt to new situations without extensive retraining periods! ?

Performance Metrics and Real-World Applications

The performance stats for OWMM-Agent Cross-Modal Robotics are absolutely incredible! ?? We're talking about 90% accuracy on tasks the robot has never seen before. That's not just impressive - it's game-changing for the entire robotics industry.

| Capability | OWMM-Agent | Traditional Robotics |

|---|---|---|

| Zero-Shot Accuracy | 90% | 15-30% |

| Adaptation Time | Immediate | Hours to Days |

| Multi-Modal Processing | Yes | Limited |

| Learning Efficiency | Exponential | Linear |

In manufacturing environments, Cross-Modal Robotics systems are handling complex assembly tasks that previously required human intervention. The robots can identify defects through visual inspection, detect unusual sounds that indicate mechanical issues, and feel for proper component alignment - all simultaneously! ??

Healthcare applications are equally impressive. These robots can assist in surgical procedures by processing visual cues from cameras, audio feedback from medical equipment, and tactile information from instruments. The 90% accuracy rate means they can reliably support medical professionals in critical situations! ??

Implementation Strategies and Technical Considerations

Getting OWMM-Agent Cross-Modal Robotics up and running requires some serious planning, but the results are absolutely worth it! ?? The key is understanding how to properly calibrate the multi-modal sensors and ensure they're working in harmony.

First up, you need to set up the sensor array correctly. The visual systems require high-resolution cameras with proper lighting conditions, audio sensors need to be positioned to minimise interference, and tactile sensors must be calibrated for the specific materials and surfaces the robot will encounter. It's like creating a sensory ecosystem! ??

The software integration is where things get really interesting. The OWMM-Agent uses sophisticated algorithms to weight different sensory inputs based on the task at hand. For delicate assembly work, tactile feedback might be prioritised, while navigation tasks rely more heavily on visual and audio cues. The system learns these preferences automatically! ??

Performance optimisation is crucial for maintaining that 90% accuracy rate. Regular calibration checks, sensor cleaning protocols, and software updates ensure the Cross-Modal Robotics system continues operating at peak efficiency. The good news is that the system includes self-diagnostic capabilities that alert you to potential issues before they impact performance! ??

Future Developments and Industry Impact

The future of OWMM-Agent Cross-Modal Robotics is absolutely mind-blowing! ?? We're already seeing research into even more advanced sensory integration, including chemical sensors for smell and taste, electromagnetic field detection, and even quantum sensing capabilities.

The impact on industry is going to be massive. Manufacturing companies are reporting productivity increases of 40-60% when implementing Cross-Modal Robotics systems. The ability to handle unexpected situations without human intervention means 24/7 operations become genuinely feasible! ??

What's really exciting is how this technology is democratising advanced robotics. Previously, only massive corporations could afford sophisticated robotic systems. Now, smaller companies can implement OWMM-Agent solutions that rival the capabilities of much more expensive traditional systems. It's levelling the playing field! ??

Research institutions are pushing the boundaries even further. We're seeing experiments with swarm robotics where multiple OWMM-Agent units work together, sharing sensory information and coordinating actions. The collective intelligence that emerges from these systems is absolutely fascinating! ??

The OWMM-Agent Cross-Modal Robotics system represents a paradigm shift in robotic technology, delivering 90% zero-shot action accuracy through innovative multi-modal sensory integration. This breakthrough in Cross-Modal Robotics opens new possibilities for autonomous systems across manufacturing, healthcare, and service industries. As the technology continues to evolve, we can expect even greater capabilities and broader applications, making intelligent robotics accessible to organisations of all sizes while maintaining the highest standards of performance and reliability.