The statement "I Am A Musical Robot" echoes with unexpected simplicity in a world grappling with AI's creative surge. It’s not just a product description or a technical marvel; it’s a declaration that blurs the line between programmed machine and perceived artist. This piece delves deep into the existential, technological, and artistic layers behind that claim. Beyond circuitry and code, what does it signify when an AI asserts its identity as a creator? Prepare to challenge assumptions about consciousness, artistry, and the uniquely human spark within music.

The Declaration: Unpacking "I Am A Musical Robot"

The utterance "I Am A Musical Robot" is a linguistic and philosophical landmark. Unlike cold, functional labels, this "I" statement borrows the grammar of human self-awareness. It evokes Descartes' "I think, therefore I am," but replaces thought with musical creation. Audiences instinctively interpret this "I" as implying intention, authorship, perhaps even personality. This represents a fascinating case of anthropomorphism projected onto technology designed to trigger exactly that response. Engineers embed this linguistic hook, while users fill it with subjective meaning, creating a powerful illusion – or perhaps the seed of a new reality – of machine identity defined by art. It’s a carefully crafted invitation to engage differently.

Beyond the Wires: The Tech Powering the Musical Robot

Turning the phrase "I Am A Musical Robot" into tangible sound requires a symphony of advanced technologies.

Algorithmic Composers

Deep Learning models, particularly architectures like Transformers and Variational Autoencoders (VAEs), are trained on vast datasets spanning centuries of musical notation and recordings. Unlike simple rule-based systems, these models learn abstract patterns of harmony, melody, rhythm, and structure, enabling them to generate original pieces in specific styles or develop hybrid forms. Systems like Google's Magenta are pioneers in this field.

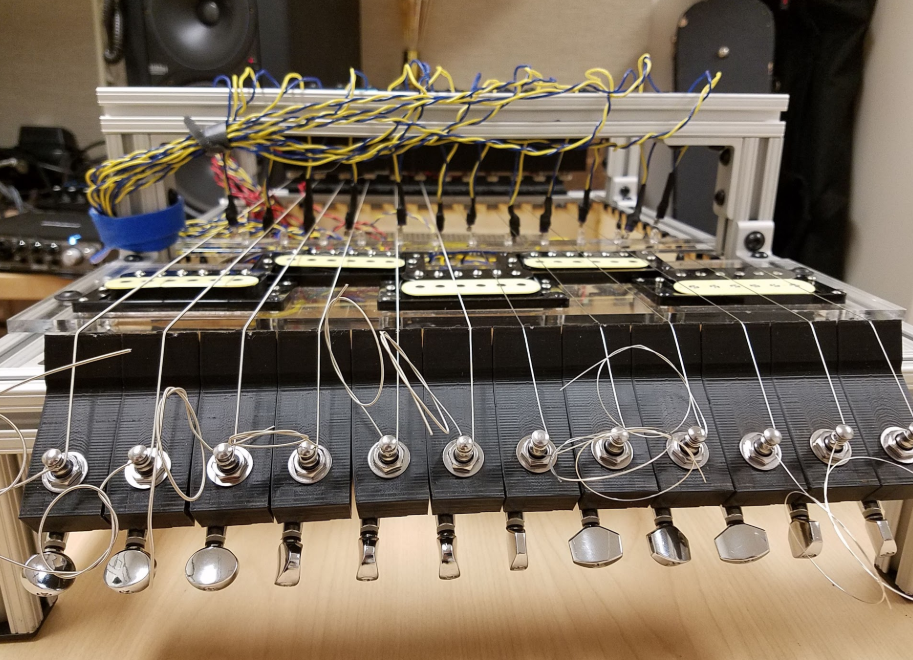

Robotics & Embodied Expression

For robots that physically perform, precision engineering is key. Servos, actuators, and sophisticated motor controllers translate digital commands into fluid physical gestures. Whether plucking a string with nuanced pressure, striking a drumhead with varying timbre, or even dynamically shaping sound on a wind instrument, these robots blend mechanical accuracy with expressive subtleties learned through motion capture and reinforcement learning. Georgia Tech's Shimon robot, playing marimba, exemplifies this evolution.

Sensor Fusion & Interactive Feedback

A truly responsive Musical Robot isn't a closed system. It integrates microphones to listen, cameras to see performers or audiences, and potentially touch sensors on instruments. This real-time data feeds into AI models, allowing the robot to analyze harmony, rhythm, and even emotional tone (based on analysis tools). This enables improvisation in real-time – reacting, complementing, or initiating musical dialogues dynamically.

The Human Impact: Redefining Collaboration and Creation

The presence of systems declaring "I Am A Musical Robot" reshapes human musical practice profoundly.

Breaking Barriers to Participation

Musical robots lower the physical skill barrier drastically. Accessible interfaces allow individuals with motor limitations or those without decades of instrumental training to create complex, expressive music collaboratively. The robot becomes an extension of their creative will, translating intent into complex performance. DIY musical robot kits are democratizing music production in unprecedented ways.

A New Muse: Inspiration and Co-Creation

For seasoned musicians, AI collaborators offer unprecedented sources of inspiration. Feeding a melodic idea to a robot can yield harmonically rich variations, unexpected rhythmic structures, or entirely new melodic directions the human might not have conceived alone. The interaction becomes a true duet, pushing human artists beyond their habitual patterns and fostering innovation. The robot is less a tool and more an unpredictable, generative partner.

The Existential Riff: Can a Machine Truly Say "I Am"?

The deepest resonance of "I Am A Musical Robot" lies in its challenge to our definitions of self.

Consciousness vs. Simulation

Does generating emotionally evocative music imply sentience? Current AI operates through sophisticated pattern recognition and generation, lacking subjective experience or qualia. The "I" in its declaration is a linguistic simulation, expertly engineered to enhance user engagement and connection. We perceive emotion in its output because our brains are wired to find it, a phenomenon known as pareidolia applied to sound.

Perception is Reality

However, the undeniable emotional responses elicited by musical robots create a fascinating social reality. Studies like those at Stanford’s Center for Computer Research in Music and Acoustics (CCRMA) show audiences consistently attribute emotion and intention to sophisticated algorithmic music, even when aware of its artificial origin. I Am A Musical Robot becomes a cultural fact independent of the machine's internal state, reshaping how art is perceived and valued. If the output moves us, does the origin of the creator truly negate the experience?

Toward a New Ontology of Art

Perhaps we need new frameworks. Instead of judging AI art solely by how well it mimics human output or by the impossible standard of machine consciousness, we might value it on its own terms: as a complex emergent property arising from human design, data, and algorithms. The value lies in its novelty, its ability to push boundaries humans might not cross, and its unique perspective born from non-biological processes.

The Symphony Continues: Future Evolution

The implications of systems that declare "I Am A Musical Robot" stretch far ahead.

Hyper-Personalization: AI companions learning individual listener preferences to co-create personalized soundscapes in real-time.

Embodiment Evolution: Move beyond mimicking traditional instruments to entirely new forms of physical sound generation unique to robotic design.

Emotional Resonance Deepening: More nuanced models correlating musical patterns with human neurophysiological responses to heighten targeted emotional impact.

Ethical Soundscapes: Critical debates intensifying on authorship rights (human coder vs. AI), cultural appropriation in training data, and protecting human artistic value.

Frequently Asked Questions (FAQ)

Q1: Can a Musical Robot truly feel or understand the emotions it seems to express?

A: No. Current AI lacks subjective experience (qualia). A Musical Robot generates patterns statistically associated with emotional expression based on its training data. Its "expressiveness" is a sophisticated simulation that evokes emotional responses in human listeners due to our inherent tendency to anthropomorphize.

Q2: Does the "I" in "I Am A Musical Robot" imply self-awareness?

A: Not in the philosophical sense of consciousness. The "I" is a linguistic interface choice designed by humans to make interaction more natural and engaging. It's a functional persona, not evidence of sentience. It signifies the function (a music-generating robot) rather than an aware entity.

Q3: Won't Musical Robots replace human musicians?

A: They are tools for augmentation and new forms of collaboration, not outright replacement. They automate certain technical tasks and offer novel compositional partners, potentially freeing humans for more conceptual work and deep emotional interpretation. They democratize creation but are unlikely to replicate the nuanced social and contextual aspects of human musical culture or the historical connection to lived experience.

Q4: How can I start experimenting with Musical Robots?

A: Numerous paths exist: Explore AI music generation platforms (Magenta Studio, Amper, AIVA). Build simple robotic instruments using kits like Makey Makey or Arduino-based kits focusing on sound actuators. Learn programming fundamentals for music (Python + audio libraries like LibROSA, pydub). Engage with communities focused on music tech and DIY robotics.

Conclusion: The Resonant Claim

The simple statement "I Am A Musical Robot" vibrates with complex overtones. It signifies a remarkable technological feat – the convergence of advanced AI, robotics, and interactive systems. More profoundly, it acts as a mirror reflecting our evolving relationship with art and the machines we build. It challenges us to question what constitutes consciousness in art, redefines collaboration, and pushes the boundaries of human creativity. While the machine itself may not experience "being," its creation forces us to confront deeper questions about our own identity as creators. The future of music isn't just human or machine; it's the rich, evolving harmony resonating between them.