Picture a stage pulsing with energy: the driving beat of drums, the wail of an electric guitar solo, the thumping bassline. Now, imagine the musicians delivering this electrifying performance aren't human. Instead, they're precise, mechanical marvels – a fully functional Musical Robot Band. No longer confined to science fiction, these robotic ensembles are a striking reality, blending cutting-edge engineering, artificial intelligence (AI), and art to challenge our fundamental understanding of musicianship and performance. Are they gimmicks, technical novelties, or the avant-garde of a new musical era? This exploration delves deep into the captivating realm where circuits compose, servos strum, and algorithms orchestrate, revealing how Musical Robot Bands are learning to jam, evolve, and perhaps even one day, create genres beyond human imagination.

Beyond the Novelty Act: What Defines a True Musical Robot Band?

While robots playing instruments individually have existed for decades, a true Musical Robot Band signifies a synchronized ensemble. These are not just glorified music boxes playing pre-recorded sequences. Key characteristics distinguish them:

Interacting Autonomous Agents

Each robot musician operates with a degree of autonomy. Equipped with sensors, actuators, and onboard processing, they perceive their environment (including bandmates), respond in real-time, and execute musical actions based on shared compositional rules or AI algorithms. Think less playback, more coordinated, reactive performance.

Synchronized Musical Output

The core purpose is to produce coherent, rhythmically synchronized music together. This requires sophisticated communication systems – whether through MIDI, wireless networks, or even auditory listening – allowing them to stay in tempo, match dynamics, and respond to solos or changes initiated by another band member, much like human musicians.

Embodiment of Instruments

Unlike purely software-based AI composers, Musical Robot Bands interact physically with traditional (or modified) musical instruments. This physicality presents immense engineering challenges: replicating the nuanced control required for strumming a guitar, striking a drum with varying velocity, or manipulating breath control on a wind instrument.

Decoding the Mechanics: How Do Musical Robot Bands Actually Work?

Creating a robot capable of playing an instrument effectively is complex; orchestrating a group is exponentially harder. Several core technologies converge:

Hardware & Actuation

Each robot musician needs specialized mechanisms tailored to its instrument. This involves:

Precision Motors & Actuators: High-torque motors for drum sticks, servo motors for delicate finger placement on strings or keys, solenoids for rapid picking or valve actuation.

Robotic Arms & Manipulators: Arms providing the range of motion needed for strumming, bowing, or positioning over instruments.

Sensors Galore: Optical encoders tracking motor position, force sensors for delicate key presses, cameras for visual cues (like the conductor or other robots), microphones for listening to their own sound and the overall band mix.

The Brains: AI, ML, and Composition Engines

The intelligence layer is critical:

Low-Level Control: Algorithms governing precise motor movements and instrument interaction (e.g., how hard to hit a drum, exact finger placement on a fretboard).

Musical Generative AI: Systems using machine learning (ML) trained on vast datasets of music to generate melodies, harmonies, and rhythms. These can be rule-based (following specific styles) or employ neural networks like Transformers for more complex composition, potentially even improvising based on learned patterns.

Synchronization & Conducting: A central "brain" (human conductor or AI conductor robot) often sends tempo signals and cues via MIDI or network protocols. Alternatively, robots use auditory processing (listening to a click track or each other's beats) and complex time-syncing algorithms to stay perfectly aligned.

Adaptability & Interaction: Advanced AI allows robots to react to each other in real-time. If the drummer speeds up slightly, the bassist adjusts. Machine Learning models trained on ensemble playing can enable these subtle interactions, fostering a sense of musical dialogue.

The Synapse: The magic happens at the intersection of real-time sensor data, AI-driven musical decisions, and hyper-precise mechanical execution, all synchronized across the entire Musical Robot Band.

Legends of the Machine: Famous Musical Robot Bands

Several pioneering groups have pushed the boundaries:

Compressorhead (Germany)

Arguably the most famous industrial Musical Robot Band. Featuring members like "Stickboy" (drums), "Fingers" (guitar), and "Bones" (bass) – constructed from scrap metal. Known for blistering covers of AC/DC, Ramones, and Metallica.

Achievement: Demonstrated high-energy performance capabilities and endurance impossible for humans, captivating audiences worldwide.

Toyota's Partner Robots (Japan)

Toyota showcased robots playing brass instruments like trumpets and trombones. Their robots demonstrated exceptional breath control and finger dexterity, playing complex classical pieces and jazz standards with remarkable accuracy.

Achievement: Highlighted the precision required for wind instruments and sophisticated fluid control systems.

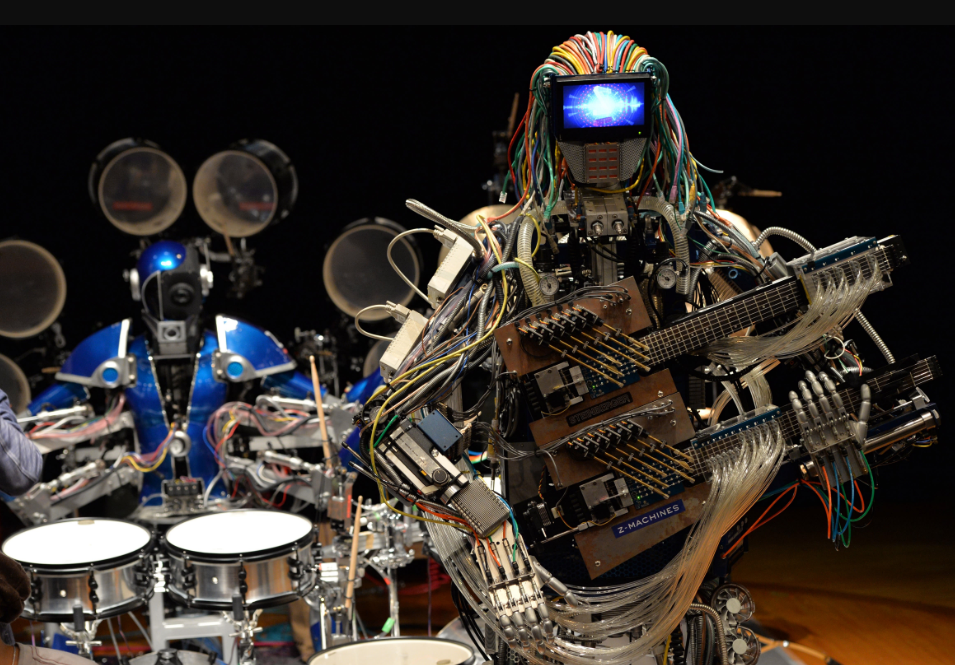

Z-Machines (Japan)

Commissioned to create music impossible for humans to play. Featuring a guitarist with 78 fingers and a drummer with 22 arms. They collaborated with human composers to create uniquely complex pieces.

Achievement: Explored the creation of entirely new musical possibilities beyond human physical limitations.

Shimon (Georgia Tech, USA)

While technically an improvising marimba-playing robot that *collaborates* with humans, Shimon represents the AI frontier. Trained on vast music datasets and using deep learning, it improvises melodies and rhythms in response to human musicians.

Achievement: Pioneering human-robot jazz improvisation and sophisticated AI-driven musical creativity. Find out how smaller AI companions are entering the musical sphere in our article on The Musical Robot Dog Revolution: How AI Companions Are Learning to Jam.

Beyond Metal Covers: Original Music & Genre Exploration

The initial wave focused on replicating rock anthems. The frontier now involves original composition and exploring diverse genres:

AI Composers & Robot Performers: AI like Google's Magenta, OpenAI's MuseNet, or custom models generate original scores, which robot bands then perform.

Genre Fusion: Robots aren't limited by genre biases. They can effortlessly blend elements of classical, techno, jazz, and traditional music in ways humans might not conceive, potentially creating entirely new sonic landscapes.

Algorithmic Improvisation: Advancements in reinforcement learning are enabling robots to make creative musical choices in real-time, not just pre-programmed solos. They can respond to each other's phrases and the audience's energy.

Human vs. Machine: Strengths and Controversies

The rise of the Musical Robot Band sparks debate:

Advantages

Unparalleled Precision & Speed: Flawless timing, impossible tempos, perfect pitch execution.

Inhuman Endurance & Consistency: Play for hours without fatigue, identically night after night.

Exploring the Sonically Impossible: Play complex polyrhythms or melodies requiring more limbs than humans possess. Generate compositions based on vast datasets, creating novel structures.

Educational & Inspirational Tools: Demystifying technology and music, inspiring STEM/STEAM learning and perhaps lowering barriers to musical experimentation for everyone. Discover how you can start creating with AI in our guide: Unlock Your Inner Mozart: How a Musical Robot Kit Makes Anyone an AI Maestro.

Challenges & Criticisms

The "Soul" Question: Can robots convey genuine emotion or intent? Does their music lack the intangible feeling derived from human experience? This remains a profound philosophical debate.

Technical Limitations (Nuance, Expression): Achieving the subtle dynamics, micro-timing imperfections, and expressive vibrato that make human music deeply resonant is extremely difficult for machines.

Cost & Complexity: Building and maintaining sophisticated robot musicians is currently very expensive and requires specialized expertise.

Job Displacement Fears: While unlikely to replace top-tier artists, concerns exist about robots replacing session musicians or performers in specific contexts.

The Uncanny Valley of Performance: Seeing humanoid robots perform can sometimes feel unsettling rather than engaging.

Building Your Own Musical Robot Band (Or Starting Small)

Creating a full band is ambitious, but the DIY spirit is strong:

Concept & Instrument Choice: Start simple (percussion robots like a single drum or marimba bot are easiest). Define the musical goal.

Hardware Platform: Use accessible microcontrollers (Arduino, Raspberry Pi), off-the-shelf servo motors, solenoids, 3D printers for custom parts.

Actuation Design: Design mechanisms to physically strike keys, strum strings, or hit drums. Precision is key.

Control Software: Write code to control motor movements precisely (timing, velocity).

AI/Composition Integration (Advanced): Integrate with AI music generation libraries (Magenta.js, TensorFlow) or sequencing software (Pure Data, Max/MSP) to generate musical input.

Synchronization: Implement MIDI sync or network-based time protocols to coordinate multiple robots.

Online communities and open-source projects provide valuable resources and inspiration for budding roboteers.

The Future Symphony: What's Next for Musical Robot Bands?

The evolution is rapid:

Enhanced AI for True Collaboration

Moving beyond pre-composed scores to AI that improvises creatively in real-time, reacting to human band members, audience input, and environmental factors – creating unique performances every time.

Improved Embodiment & Expression

More sophisticated actuators and sensors will capture the nuanced dynamics and expressive qualities of human performance, blurring the line between human and machine execution.

Haptic Feedback & Learning

Robots incorporating touch and force feedback could learn to "feel" the instrument, refining their technique through embodied learning algorithms.

Generative Visuals & Multimodal Performance

Integration with AI-generated visualizations, synced lighting, and robotic stage elements for truly immersive, multi-sensory experiences.

Accessibility & Democratization

Simpler, more affordable kits allowing musicians, artists, and educators to experiment with robotic musicianship, fostering wider innovation.

Frequently Asked Questions

Can a Musical Robot Band improvise like a jazz band?

True improvisation is the holy grail and an active research area. Current robot bands excel at pre-programmed sequences and can handle some variations or pre-learned "licks." Advanced systems like Shimon demonstrate significant progress in AI-driven improvisation, responding to musical cues with novel phrases generated in real-time. While they may not yet match the deep, intuitive flow of experienced human jazz musicians, the ability to generate surprising and coherent musical responses based on complex models is rapidly evolving.

Are robot bands a threat to human musicians?

Robots are unlikely to replace human musicians en masse or replicate the deep emotional connection humans have with performers. Their primary impact will likely be:

1. Creation of New Roles: Designers, programmers, robot operators, AI composers.

2. Enhanced Live Shows: Robots augmenting human performances with impossible visuals or sounds.

3. Niche Performance: Specific venues, technical demonstrations, endurance tasks.

4. Tools for Creativity: Human musicians using AI and robots as collaborators or instruments. They represent a new musical tool and art form rather than direct competition for most musicians.

How much does a sophisticated Musical Robot Band cost to build?

Costs vary enormously. DIY hobbyist projects using Arduinos, basic servos, and 3D-printed parts could start in the hundreds to low thousands of dollars. Professional-grade bands like Compressorhead, involving custom engineering, industrial-grade robotics, robust materials, and complex control systems, likely cost hundreds of thousands, if not millions, of dollars to design, prototype, and build. Factors include the complexity of instruments, degree of autonomy, materials, and AI integration.

The rise of the Musical Robot Band is more than a technological parlor trick; it's a profound exploration at the intersection of art, engineering, and artificial intelligence. From their raw industrial power to the delicate touch they strive to achieve, these mechanical ensembles challenge our definitions of creativity and performance. While they may never replace the soulful wail of a human blues singer, they open doors to sonic territories previously unimaginable, push the limits of precision, and serve as mesmerizing ambassadors for the potential of intelligent machines. Whether witnessed headbanging on a festival stage or subtly weaving complex algorithmic melodies, Musical Robot Bands are composing a fascinating, ever-evolving symphony for the future of sound.