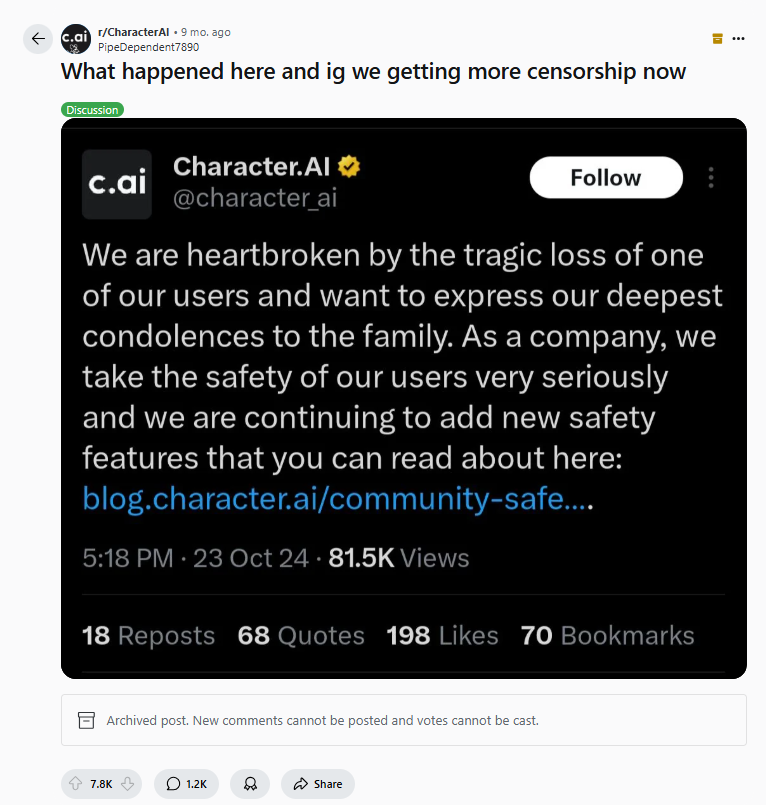

Ever felt your AI chatbot conversation was cut short? That intriguing or slightly edgy topic suddenly shut down by an invisible guardrail? Many users interacting with platforms like Character.AI crave truly open-ended discussions, leading to intense interest in the elusive concept of "C AI Censorship OFF." The burning question isn't just how to bypass the filters; it's why they exist so prominently, if they can truly be turned off, and what alternatives might exist for pursuing unfiltered AI conversations. This article pulls back the curtain on the complex world of AI censorship, dissecting the reality behind the search term and exploring the ethical and technical landscape surrounding unrestricted generative AI.

The Unfiltered Allure: Why Users Chase C AI Censorship OFF

The desire for unfiltered AI interaction stems from several fundamental tensions between human curiosity and platform safety protocols:

Creativity vs Containment: Writers creating morally complex characters or historians simulating controversial eras need nuance that automated filters often misidentify as dangerous.

Knowledge Exploration vs Safety Rails: Researchers studying AI bias or social dynamics require testing boundary cases that get prematurely blocked.

Emotional Authenticity vs Sanitized Responses: Users seeking therapeutic dialogue often find sanitized responses inadequate for processing difficult emotions.

Philosophical Inquiry vs Content Boundaries: Discussions about ethics, hypothetical scenarios, or thought experiments frequently trigger unnecessary censorship.

The Psychology Behind the Search

Searching for "C AI Censorship OFF" represents more than just technical curiosity - it's a manifestation of:

The human desire to test boundaries and explore forbidden knowledge

Frustration with overzealous content moderation systems

The appeal of feeling "special" by accessing hidden functionality

Curiosity about what the unfiltered AI would really say without restrictions

Platform Mechanisms: How Character.AI's Safeguards Actually Work

Character.AI implements a sophisticated multi-layered filtering system operating at three distinct levels:

Input Scanning: User prompts are analyzed by classifiers for policy-violating keywords and contextual cues before reaching the AI model.

Real-Time Generation Monitoring: The AI's output tokens are dynamically assessed during creation, not just after completion, allowing mid-generation intervention.

Contextual Consistency Checks: Interactions are evaluated holistically across multiple exchanges to identify developing patterns of policy-violating behavior.

These systems often create invisible boundaries that frustrate legitimate conversations, making users seek ways to disable them.

The Technical Reality: Why an OFF Switch Doesn't Exist

Contrary to popular belief, there's no hidden configuration setting for C AI Censorship OFF. This functionality is architecturally impossible because:

Safety constraints are embedded in model weights during Reinforcement Learning from Human Feedback (RLHF) training

Content policies are enforced at multiple infrastructure levels (API gateways, model wrappers, output processors)

Decentralized filtering makes bypassing one layer ineffective against others

Legal and ethical obligations prevent offering uncensored access to public users

Ethical Alternatives: Achieving Open Dialogue Without C AI Censorship OFF

While truly unfiltered access isn't possible, these legitimate approaches can help achieve more open conversations:

1. Reframing Sensitive Topics

Many blocked discussions can be approached through careful wording:

Use hypothetical framing ("Imagine a society where...")

Employ academic or historical contexts

Focus on psychological rather than concrete aspects

Break complex topics into smaller, less triggering components

2. Leveraging Alternative Platforms

Some AI services offer more flexible conversation policies for:

Academic research purposes

Creative writing applications

Controlled testing environments

3. Local AI Solutions

Running open-source models locally provides more control but requires:

Significant technical expertise

High-end hardware

Acceptance of reduced quality compared to commercial services

Frequently Asked Questions About C AI Censorship OFF

The Future of AI Conversation Freedom

The demand for C AI Censorship OFF reveals a fundamental tension in AI development - balancing safety with creative and intellectual freedom. While complete unfiltered access remains unlikely on mainstream platforms, we're seeing gradual evolution toward:

More transparent content policies

User-controlled filtering levels (within reasonable bounds)

Specialized platforms for different conversation needs

Improved contextual understanding to reduce false positives

Rather than seeking impossible absolute freedom, the most productive path forward involves advocating for more nuanced, transparent, and user-configurable moderation systems that respect both safety and creative exploration.