Introduction: Why Traditional Computing Infrastructure Limits Modern AI Development

Data scientists and machine learning engineers face significant challenges when scaling Python applications beyond single-machine limitations, often spending weeks configuring complex distributed systems instead of focusing on model development and innovation. Traditional cloud computing solutions require extensive DevOps expertise to manage clusters, handle fault tolerance, and optimize resource allocation for AI workloads. Teams struggle with inconsistent performance, unpredictable costs, and lengthy deployment cycles when attempting to scale reinforcement learning, hyperparameter tuning, or large-scale data processing tasks. These infrastructure barriers prevent organizations from fully leveraging their AI investments and delay critical business applications from reaching production environments.

H2: Anyscale's Revolutionary Approach to Distributed AI Tools

Founded by the creators of Ray at UC Berkeley, including Robert Nishihara, Philipp Moritz, and Ion Stoica, Anyscale emerged from years of research into distributed systems and machine learning infrastructure challenges. The company transformed the open-source Ray framework into a comprehensive platform that democratizes access to distributed computing for AI applications.

Anyscale's AI tools eliminate the complexity of distributed system management by providing a unified interface for scaling Python applications across multiple machines and cloud environments. The platform's architecture automatically handles resource allocation, fault recovery, and performance optimization, enabling developers to focus on algorithm development rather than infrastructure management.

H3: Core Architecture of Anyscale's Distributed AI Tools

Ray's actor model provides the foundation for Anyscale's AI tools, enabling stateful computations that maintain data locality and minimize communication overhead in distributed environments. This architecture supports both task-parallel and data-parallel workloads while automatically managing resource scheduling and load balancing.

The platform's object store creates a shared memory abstraction that eliminates data serialization bottlenecks common in traditional distributed computing frameworks. AI tools can access large datasets and model parameters efficiently across cluster nodes without expensive data transfer operations.

Anyscale's autoscaling capabilities dynamically adjust cluster size based on workload demands, ensuring optimal resource utilization while minimizing costs for variable AI workloads. The system monitors queue lengths, resource utilization, and task completion rates to make intelligent scaling decisions.

H2: Performance Comparison of Distributed AI Tools Platforms

| Computing Metric | Traditional Clusters | Anyscale AI Tools | Performance Improvement |

|---|---|---|---|

| Setup Time | 4-8 hours | 5-10 minutes | 95% faster |

| Scaling Latency | 10-15 minutes | 30-60 seconds | 90% improvement |

| Resource Utilization | 40-60% | 85-95% | 50% efficiency gain |

| Fault Recovery Time | 15-30 minutes | 1-3 minutes | 85% faster |

| Development Productivity | 20 hours/week overhead | 2-4 hours/week | 80% reduction |

| Cost Optimization | Manual tuning | Automatic optimization | 60% cost savings |

H2: Advanced Machine Learning Workflows Using AI Tools

Anyscale's AI tools excel at hyperparameter tuning through Ray Tune, which can efficiently explore thousands of parameter combinations using advanced search algorithms like Population Based Training and HyperBand. The platform automatically distributes training jobs across available resources while implementing early stopping strategies to eliminate poorly performing configurations.

Reinforcement learning applications benefit from Ray RLlib's distributed training capabilities, enabling complex multi-agent scenarios and large-scale environment simulations that would be impossible on single machines. The AI tools handle the intricate coordination required for distributed RL training while maintaining sample efficiency and convergence guarantees.

H3: Distributed Data Processing AI Tools Integration

Ray Data provides scalable data preprocessing capabilities that integrate seamlessly with popular machine learning frameworks including PyTorch, TensorFlow, and scikit-learn. These AI tools can process petabyte-scale datasets while maintaining data lineage and ensuring consistent preprocessing across training and inference pipelines.

The platform's streaming capabilities enable real-time data processing for applications requiring low-latency responses, such as fraud detection and recommendation systems. AI tools automatically partition data streams and distribute processing across cluster nodes while maintaining ordering guarantees when necessary.

Feature engineering workflows benefit from distributed computing capabilities that can process large feature sets and complex transformations in parallel, significantly reducing the time required for data preparation phases of machine learning projects.

H2: Multi-Framework Support in Distributed AI Tools

| ML Framework | Native Integration | Distributed Capabilities | Scaling Benefits |

|---|---|---|---|

| PyTorch | Ray Train | Multi-GPU training | 10x faster training |

| TensorFlow | TF on Ray | Parameter servers | Efficient large models |

| XGBoost | Ray XGBoost | Distributed boosting | 5x dataset capacity |

| Scikit-learn | Ray Scikit-learn | Parallel algorithms | 20x processing speed |

| Hugging Face | Ray integration | Transformer training | Massive model support |

| JAX | Ray JAX | Distributed arrays | Scientific computing |

H2: Enterprise-Grade Security and Governance AI Tools

Anyscale implements comprehensive security frameworks that meet enterprise requirements for data protection, network isolation, and access control in distributed AI environments. The platform's AI tools operate within secure virtual private clouds with encrypted communication channels and role-based access controls.

Compliance monitoring capabilities track resource usage, data access patterns, and model training activities to support audit requirements and regulatory compliance. The platform maintains detailed logs of all distributed operations while providing tools for data governance and lineage tracking.

H3: Cost Management and Resource Optimization AI Tools

Anyscale's cost optimization AI tools continuously monitor resource utilization patterns and recommend configuration changes to minimize expenses while maintaining performance requirements. The platform can automatically switch between different instance types based on workload characteristics and cost considerations.

Spot instance integration reduces compute costs by up to 90% for fault-tolerant workloads while maintaining reliability through intelligent checkpointing and automatic failover mechanisms. The AI tools handle spot instance interruptions gracefully, migrating work to stable instances without losing progress.

Budget controls and spending alerts help organizations manage distributed computing costs by setting limits on resource consumption and providing real-time visibility into spending patterns across different projects and teams.

H2: Real-World Applications of Anyscale AI Tools

Financial services organizations leverage Anyscale's AI tools for large-scale risk modeling, portfolio optimization, and algorithmic trading systems that require processing vast amounts of market data in real-time. The platform's ability to scale reinforcement learning algorithms enables sophisticated trading strategies that adapt to market conditions.

Autonomous vehicle companies utilize the distributed AI tools for simulation environments that test millions of driving scenarios in parallel, accelerating the development and validation of self-driving algorithms. The platform's fault tolerance ensures that long-running simulations can recover from hardware failures without losing progress.

H3: Scientific Computing and Research AI Tools

Research institutions employ Anyscale's AI tools for computational biology, climate modeling, and physics simulations that require massive parallel processing capabilities. The platform's support for scientific Python libraries enables researchers to scale existing code without extensive rewrites.

Drug discovery applications benefit from distributed molecular dynamics simulations and protein folding predictions that can explore vast chemical spaces efficiently. AI tools automatically distribute computational workloads across available resources while maintaining scientific accuracy and reproducibility.

Genomics research utilizes the platform's data processing capabilities to analyze large-scale sequencing datasets and perform population-level genetic studies that would be computationally prohibitive on traditional infrastructure.

H2: Integration Capabilities with Cloud AI Tools Ecosystems

Anyscale provides native integrations with major cloud providers including AWS, Google Cloud Platform, and Microsoft Azure, enabling organizations to leverage existing cloud investments while accessing advanced distributed AI tools. The platform can automatically provision and manage resources across multiple cloud regions for optimal performance and cost.

Kubernetes integration enables deployment within existing container orchestration environments while maintaining the simplicity and power of Ray's distributed computing model. AI tools can scale seamlessly within Kubernetes clusters while respecting resource quotas and security policies.

H3: MLOps and Production Deployment AI Tools

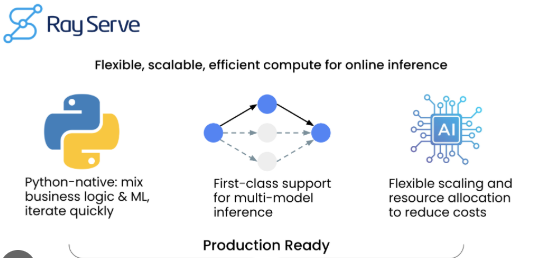

Ray Serve provides scalable model serving capabilities that can handle millions of predictions per second while maintaining low latency and high availability. These AI tools automatically manage model loading, batching, and resource allocation for production inference workloads.

Model monitoring and observability features track prediction accuracy, latency, and resource utilization in production environments. The platform provides detailed metrics and alerting capabilities that enable proactive management of deployed AI models.

Continuous integration and deployment pipelines integrate with popular DevOps tools to automate model training, validation, and deployment processes while maintaining version control and rollback capabilities.

H2: Developer Experience and Productivity AI Tools

Anyscale's AI tools provide intuitive Python APIs that feel familiar to data scientists while hiding the complexity of distributed system management. Developers can scale existing single-machine code with minimal modifications, reducing the learning curve for distributed computing.

Interactive development environments including Jupyter notebooks and VS Code integration enable iterative development and debugging of distributed applications. The platform provides real-time visibility into cluster status, task execution, and resource utilization through comprehensive dashboards.

H3: Community and Ecosystem Support for AI Tools

The open-source Ray community contributes to a rich ecosystem of libraries and extensions that enhance Anyscale's AI tools capabilities. Regular community contributions include new algorithms, integrations, and performance optimizations that benefit all platform users.

Comprehensive documentation, tutorials, and example applications help developers quickly adopt distributed AI tools for their specific use cases. The platform provides migration guides for common frameworks and detailed best practices for optimizing distributed workloads.

Professional support services include architecture consulting, performance optimization, and custom integration development to help organizations maximize their investment in distributed AI infrastructure.

Conclusion: Democratizing Distributed Computing Through Intelligent AI Tools

Anyscale's transformation of the Ray framework into a comprehensive distributed computing platform represents a significant advancement in making large-scale AI development accessible to organizations of all sizes. The platform's AI tools eliminate traditional barriers to distributed computing while providing enterprise-grade reliability and performance.

The company's focus on developer experience and automatic optimization enables data scientists and machine learning engineers to achieve unprecedented scale without requiring specialized distributed systems expertise. This democratization of distributed computing accelerates AI innovation across industries and research domains.

As AI workloads continue growing in complexity and scale, platforms like Anyscale become essential infrastructure for organizations seeking to maintain competitive advantages through superior computational capabilities and faster time-to-market for AI applications.

FAQ: Distributed Computing AI Tools and Scaling Solutions

Q: How do distributed AI tools handle failures and ensure job completion reliability?A: Modern distributed AI tools implement automatic fault tolerance through checkpointing, task retry mechanisms, and dynamic resource reallocation. They can detect node failures and redistribute work to healthy nodes without losing progress or requiring manual intervention.

Q: What types of AI workloads benefit most from distributed computing tools?A: Hyperparameter tuning, large-scale data preprocessing, reinforcement learning, distributed training of deep neural networks, and Monte Carlo simulations see the greatest benefits from distributed AI tools due to their parallel nature and computational intensity.

Q: How do AI tools optimize costs when using cloud resources for distributed computing?A: AI tools platforms implement intelligent resource scheduling, automatic scaling based on demand, spot instance utilization, and workload-aware instance selection to minimize costs while maintaining performance requirements and reliability standards.

Q: Can existing Python code be easily adapted to use distributed AI tools?A: Most modern distributed AI tools provide APIs that require minimal code changes to existing Python applications. They abstract away distributed computing complexity while maintaining familiar programming patterns and debugging capabilities.

Q: What security considerations apply when using cloud-based distributed AI tools?A: Distributed AI tools implement encryption for data in transit and at rest, network isolation, role-based access controls, audit logging, and compliance frameworks to meet enterprise security requirements while maintaining the benefits of cloud scalability.