The Rise of AI Music Therapy—and Why Ethics Matter More Than Ever

AI music therapy is no longer a futuristic concept—it’s here, and it’s being tested in hospitals, clinics, and even AI music therapy pods like the one unveiled by the AIMT Lab at WAIC 2025. These intelligent systems use biometric data—brainwaves, heart rate, and even emotional cues—to generate real-time, customized music interventions designed to reduce stress, manage pain, and support mental well-being.

Sounds groundbreaking? Absolutely.

But as this trend accelerates, ethical concerns around AI music therapy are becoming harder to ignore. While technology offers personalized healing at scale, it also brings questions about data privacy, human oversight, consent, and the authenticity of emotional engagement.

Let’s explore the ethical dilemmas surrounding AI-driven music therapy and why addressing them is essential for its long-term success.

What Is AI Music Therapy and Why Is It Growing?

AI music therapy refers to the use of artificial intelligence to design, deliver, or optimize therapeutic music interventions. Instead of a human therapist selecting songs or guiding a session, AI tools process real-time data like EEG, heart rate variability, and mood patterns to create or recommend music tailored to the user's psychological state.

Products like Endel, Lucid, and AIMT Lab’s AI Music Therapy Pod are at the forefront of this movement. These systems can:

Adjust musical compositions based on stress levels.

Create ambient soundscapes that promote sleep or focus.

Provide non-invasive support for anxiety, depression, or cognitive disorders.

With demand for mental wellness tools surging—the global music therapy market is projected to reach $2.29 billion by 2027 (Allied Market Research)—AI is seen as a scalable, cost-effective supplement to traditional therapy.

The Core Ethical Concerns in AI Music Therapy

Despite the benefits, ethical concerns in AI music therapy raise fundamental questions. Here are the most pressing issues:

1. Informed Consent and User Understanding

Many users engaging with AI music therapy systems may not fully understand how their data is collected, processed, or used. This is especially problematic in therapeutic settings involving vulnerable populations such as children, the elderly, or people with mental health conditions.

Key Question: Are users being given clear, accessible explanations before using AI-powered tools?

2. Data Privacy and Biometric Surveillance

Personalized music therapy often relies on deeply intimate data—EEG patterns, emotional states, biometric signals. The potential for this data to be misused or inadequately secured is a serious risk.

Case in Point: While the AIMT Pod ensures encrypted data handling on-site, not all commercial apps (like Brain.fm or Lucid) offer transparent data privacy policies.

Ethical Challenge: Who owns the brain data once it’s collected? And how is it stored?

3. Algorithmic Bias and Fair Access

AI systems can unintentionally reinforce bias. If training data comes predominantly from a narrow demographic, the generated music therapy may be less effective—or even emotionally dissonant—for users outside that group.

Moreover, people in low-income regions may not have access to the tech infrastructure needed for these AI therapies.

Ethical Gap: Is AI music therapy inclusive? Or is it deepening digital health divides?

4. Authenticity and Emotional Manipulation

Music is a powerful emotional trigger. AI systems can simulate emotional responses, but do they truly understand context? There’s a difference between a human therapist choosing a calming piano piece after a patient’s difficult session, and an AI matching "sadness" to "minor key ambient."

This blurring of emotional authenticity raises philosophical and psychological concerns. Are we being manipulated by machines that simulate empathy but lack awareness?

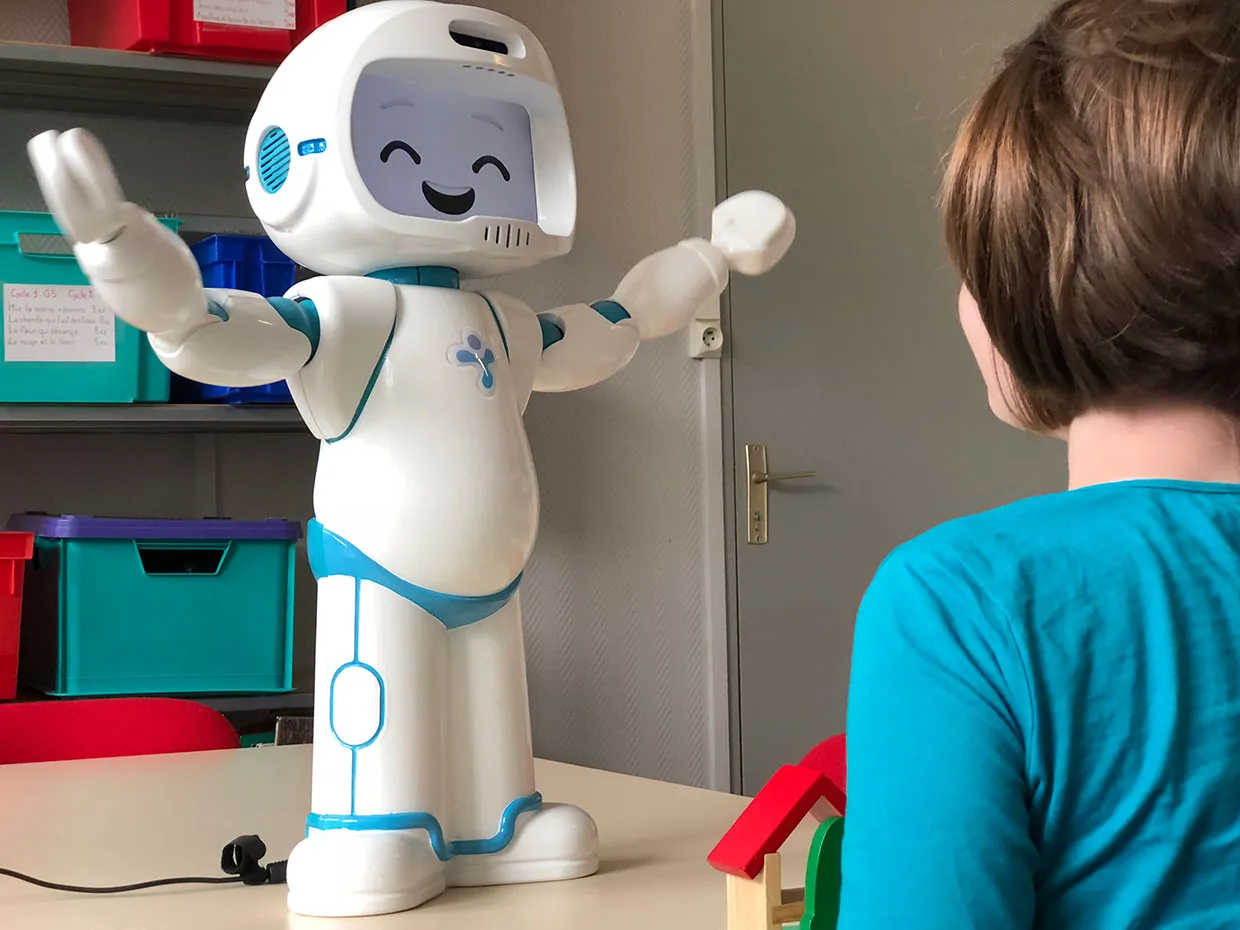

5. Loss of Human Connection in Therapy

Music therapy has long relied on the therapist-client relationship. As AI music therapy grows, the human element may fade. For some, this means losing trust, warmth, and non-verbal therapeutic cues.

Open Question: Can AI complement therapists without replacing them? Or are we inching toward fully automated healing?

Real-World Examples: Ethics in Action

AIMT Lab's AI Music Therapy Pod at WAIC 2025

At WAIC 2025, the AIMT Lab (Shanghai Conservatory of Music) showcased a pod that uses biometric sensors and AI composition models to create hyper-personalized therapy sessions. The pod promises to support cognitive rehabilitation and mental wellness—but the team emphasized user consent, real-time anonymization, and on-device processing to protect data privacy.

This sets a hopeful benchmark—but can commercial companies maintain such ethical rigor?

Endel’s Partnerships With Healthcare Providers

Endel, an AI-powered soundscape generator, now partners with health organizations to provide mental wellness soundtracks for patients. But critics note that Endel’s closed AI model limits transparency about how emotional data is interpreted—raising concerns about accountability if an output is ineffective or distressing.

Ethical Guidelines and the Path Forward

To build trust in AI music therapy, experts and developers are pushing for the following standards:

Transparent AI design: Open documentation about how music is generated, and what data is used.

Opt-in biometric tracking: No data collection without clear, affirmative consent.

Human-in-the-loop therapy: AI as a co-pilot, not a replacement.

Cultural and demographic inclusivity: Diverse training datasets to reduce bias.

Long-term safety studies: Monitoring for unintended emotional or psychological effects.

International bodies like the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems and WHO’s digital health ethics framework are actively guiding policy in these areas.

Conclusion: Ethics Are Not Optional

AI music therapy offers exciting potential for personalized healing—but it’s not without trade-offs. Data sensitivity, human dignity, algorithmic fairness, and emotional authenticity all hang in the balance. If these systems are to truly serve humanity, ethical concerns in AI music therapy must move to the center of innovation, not remain an afterthought.

By building technology that prioritizes transparency, consent, and empathy, we can ensure that AI in music therapy becomes a source of healing—not harm.

FAQs About Ethical Concerns in AI Music Therapy

Q1: Is AI music therapy regulated by any medical authority?

Currently, there's no unified global regulation. However, individual countries like Germany and Japan are starting to certify digital therapeutics (including music-based ones) under digital health acts.

Q2: What kind of data do AI music therapy tools collect?

They may collect biometric signals (heart rate, EEG), emotion recognition data, or user input related to mood and goals.

Q3: Can AI music therapy replace human therapists?

It’s designed to complement, not replace, traditional therapy. Human interpretation and emotional intelligence remain irreplaceable.

Q4: Are there open-source AI music therapy projects?

Projects like MuseNet (OpenAI) and Magenta (Google) offer music generation tools, but they aren’t specifically therapeutic or ethics-focused.

Q5: How can users protect their data when using these tools?

Always check for end-to-end encryption, avoid tools without a privacy policy, and choose platforms that allow local data processing.

Learn more about AI MUSIC