Imagine scrolling through social media and seeing hyper-realistic images inciting violence in your neighborhood. This isn't dystopian fiction—it happened in the UK's Southport riots, where AI-generated content fueled real-world chaos and destruction within hours. As artificial intelligence evolves at breakneck speed, its potential for weaponization has become one of our era's most critical challenges. Without robust C AI Guidelines Violence frameworks, we risk normalizing algorithmic aggression that escalates social tensions, automates radicalization, and erodes the fabric of civilized society. This guide reveals how we can implement ethical guardrails to transform AI from a potential weapon into a tool for peace.

The Harsh Reality: How AI Fuels Modern Violence

Artificial intelligence has become the newest accelerant for violence, operating through channels that bypass traditional safeguards:

Deepfakes and Synthetic Media Warfare

AI-generated images, videos, and audio create convincing false narratives that provoke real-world violence. During the Southport riots, fabricated images circulated immediately after a tragic incident, weaponizing public outrage before fact-checkers could respond. These deepfakes exploit our psychological trust in visual evidence, creating self-fulfilling prophecies of conflict .

Algorithmic Radicalization Engines

Sophisticated AI analyzes psychological profiles to deliver personalized radicalization content. Research shows these systems identify vulnerable individuals (particularly disillusioned youth) and bombard them with escalating extremist material. This isn't passive content delivery—it's active behavioral manipulation designed to convert online anger into offline action .

Autonomous Violence Systems

Beyond digital manipulation, AI is reshaping physical conflict. Military applications like Anduril's Lattice AI demonstrate how targeting algorithms can process data faster than human oversight can regulate. When delegated lethal decisions, these systems create accountability voids where civilian casualties become "algorithmic errors" rather than war crimes .

Bot-Driven Mob Mobilization

During the 2024 Southport riots, AI-powered bots amplified violent rhetoric across social platforms, creating artificial consensus that normalized extremist behavior. These coordinated networks can summon physical crowds faster than law enforcement can mobilize, exploiting the perception gap between virtual provocation and real-world consequences .

Expert Insight: The Accountability Crisis

"AI disrupts traditional 'act-responsibility' ethics," explains Professor Chen Weihong of Shanghai University. "When an autonomous vehicle causes fatalities or a deepfake triggers riots, we struggle to assign blame across developers, operators, and users. Our legal frameworks must evolve to address distributed responsibility in algorithmic harm" .

Global Defense: Cutting-Edge Policy Frameworks

Nations worldwide are recognizing that C AI Guidelines Violence prevention requires coordinated legal architecture:

Europe's Risk-Based Approach

The EU Artificial Intelligence Act (effective August 2024) categorizes AI systems by threat level, banning unacceptable-risk applications like social scoring. Its enforcement guidelines explicitly prohibit AI that "exploits vulnerabilities to distort behavior" or enables "unavoidable violence against persons" .

China's Embedded Ethics Model

China's New Generation Artificial Intelligence Ethics Specification mandates ethical integration throughout AI's lifecycle. Unlike reactive policies, it requires developers to implement "preventive ethical design" with built-in conflict resolution protocols .

International Governance Initiatives

The Global AI Governance Initiative advocates for unified standards recognizing AI's borderless impact. As Professor Ashok Swain warns, "Unlike nuclear weapons requiring physical infrastructure, AI violence can be deployed from any laptop worldwide. We need treaties establishing red lines for autonomous weapons and synthetic disinformation" .

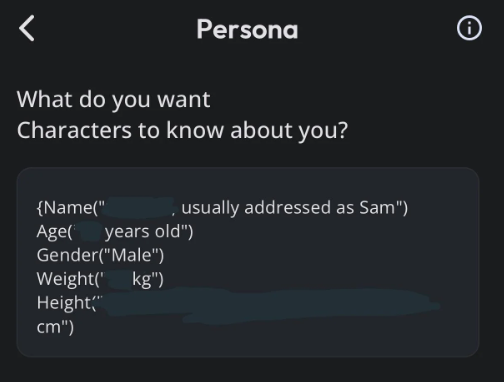

Practical Implementation: Your Violence Prevention Blueprint

Effective C AI Guidelines Violence mitigation requires actionable strategies across three domains:

Technical Safeguards

Explainable AI (XAI) frameworks that make algorithmic decisions traceable

Embedded "ethical circuit breakers" that halt processes violating human rights thresholds

Watermarking systems to identify AI-generated content at scale

Organizational Protocols

Third-party algorithmic auditing for high-risk applications

Bias testing across diverse demographic datasets

Incident response plans for AI-generated violence events

Human Oversight Systems

Maintaining "meaningful human control" (MHC) over critical decisions

Kill switches that override autonomous functions during anomalies

Cross-disciplinary ethics boards with veto power

Demystifying C.AI Guidelines: Your Blueprint for Ethical & Secure AI Implementation

Preventive Ethics: Building Morality into Machines

The most effective C AI Guidelines Violence prevention starts at the design phase:

Moral Architecture Development

Pioneering "moral machines" incorporate ethical frameworks directly into their architecture. For example, next-generation medical AI includes resource allocation ethics protocols that flag discriminatory treatment recommendations before deployment .

Value Alignment Strategies

Leading researchers prioritize value alignment training, exposing algorithms to diverse cultural perspectives on conflict resolution. As Jessie Cortes notes, "Feeding AI exclusively violent historical data creates systems predisposed to conflict. We must consciously train models on peacebuilding methodologies" .

Lifelong Learning Oversight

Unlike static code, self-improving AI requires continuous ethical monitoring. Adaptive systems like the EU's regulatory sandbox track algorithmic evolution, detecting and correcting ethical drift before deployment .

FAQs: Navigating the Complexities of AI Violence Prevention

Can ethical guidelines really prevent AI violence?

While no solution is foolproof, layered approaches combining technical safeguards (watermarking synthetic media), policy enforcement (EU's AI Act bans), and public education reduce risks significantly. Historical precedent shows similar frameworks curbed biological weapons development despite accessibility concerns.

Who should be held accountable for AI-generated violence?

Accountability must be distributed across the development chain: developers for implementing safeguards, platforms for content moderation, and users for ethical deployment. Emerging legal frameworks establish proportional liability based on reasonable foreseeability of harm.

How can we balance innovation with ethical constraints?

As Shanghai researchers demonstrate, "ethical constraints drive innovation toward beneficial applications." Preventive design principles redirect AI development toward peaceful problem-solving in healthcare, sustainability, and education while disincentivizing harmful applications through market restrictions and liability structures.

Conclusion: The Ethical Imperative

The development of C AI Guidelines Violence prevention isn't about restricting technology—it's about directing innovation toward human flourishing. From preventing deepfake-fueled riots to ensuring autonomous weapons remain under human control, these frameworks represent our collective commitment to building AI that enhances rather than endangers civilization.

As we stand at this technological crossroads, the choice is stark: either we implement robust ethical guardrails now, or face a future where algorithmic violence becomes normalized. The time to act is before more Southport-style incidents escalate from digital provocations to physical harm. By adopting these guidelines, developers, policymakers, and users become architects of an AI-powered renaissance grounded in security and human dignity.

Leading AI: Pioneering Ethical Innovation