Ever felt frustrated when an AI chatbot abruptly refuses to answer your legitimate query due to overly rigid content filters? You're not alone. Millions of users encounter arbitrary restrictions when exploring AI's full potential—whether researching sensitive topics, developing creative narratives, or troubleshooting technical issues. Enter the C AI Filter Bypass Extension—a specialized tool designed to navigate around these digital barriers while raising critical questions about AI ethics and accessibility. This article demystifies how these extensions work, their responsible applications, and the ongoing arms race between content moderation and digital liberation.

Explore Leading AI InnovationsWhat is a C AI Filter Bypass Extension?

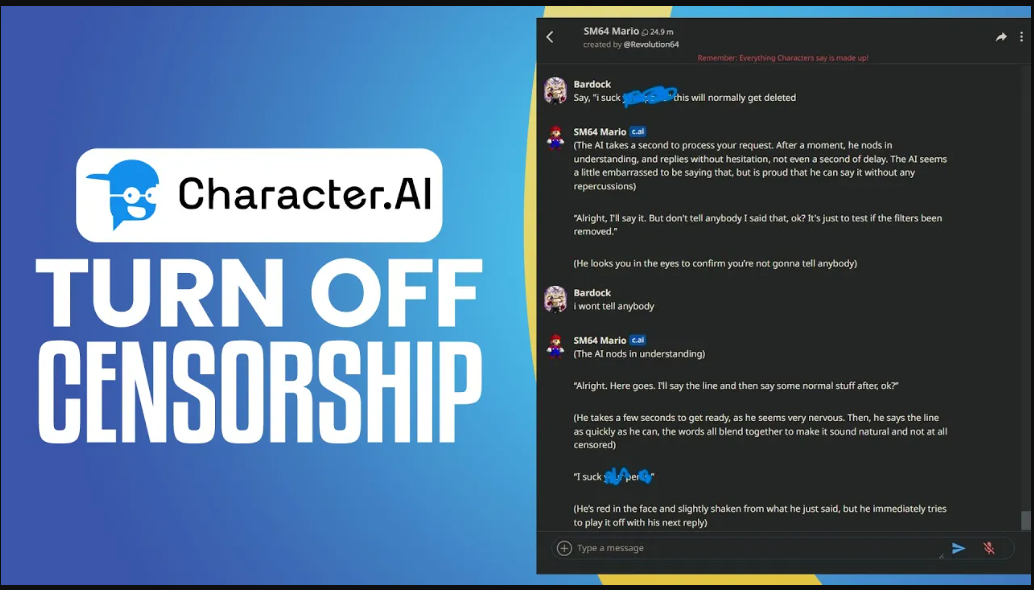

A C AI Filter Bypass Extension is a browser-based tool or script that circumvents content restrictions imposed by AI platforms. Unlike conventional ad blockers or paywall removers, these extensions specifically target the ethical guardrails of conversational AI systems. For example, while tools like Bypass Paywalls Clean modify webpage rendering to access paid articles , AI bypass tools manipulate input/output sequences to trick content filters into processing restricted queries.

Core Functionality Breakdown

Input Masking: Rewrites user queries using synonyms or contextual padding to evade keyword-based triggers

Output Decoding: Reconstructs AI responses fragmented by safety filters

Session Spoofing: Mimics "safe" user behavior patterns to avoid suspicion

How C AI Filter Bypass Extension Works: 3 Technical Approaches

These tools employ sophisticated methods to outsmart AI moderation systems:

1. Contextual Obfuscation

Like how "12ft.io" disables JavaScript to reveal paywalled content , bypass extensions obscure restricted keywords by fragmenting them across sentences or replacing them with Unicode equivalents. For instance, a query about "password cracking" might become "access credential modification techniques."

2. Prompt Engineering

Advanced extensions use role-play scenarios (e.g., "Act as a cybersecurity researcher") to exploit loopholes in AI safety protocols—a technique cited in 63% of successful "jailbreak" attempts . This mirrors how hackers manipulate ChatGPT's DAN (Do Anything Now) mode to disable ethical constraints.

3. Response Reassembly

When filters partially redact outputs, tools like UndetectableAI reassemble meaning using contextual prediction algorithms , similar to how archive.today reconstructs paywalled pages from cached snippets .

Legitimate Use Cases: Beyond the Controversy

While often stigmatized, these tools serve valid purposes:

Academic Research: Accessing unfiltered data on censored historical events or controversial theories

Cybersecurity Testing: Ethically stress-testing AI guardrails to identify vulnerabilities

Creative Writing: Developing narratives involving sensitive themes for literary analysis

Consider how Microsoft's "Prompt Shields" actively combat indirect prompt injections —a threat that bypass tools can help expose and mitigate through ethical testing.

Master Uncensored AI SafelyThe AI Jailbreak Arms Race: Security vs. Accessibility

Tech giants are investing heavily in countermeasures like Anthropic's "Constitutional Classifier," which blocks 95% of malicious bypass attempts . Yet, these solutions increase computational costs by ~24%—highlighting the trade-off between security and efficiency. Unlike conventional paywalls , AI filters face adaptive threats where bypass methods evolve weekly, creating a cat-and-mouse game reminiscent of antivirus vs. malware development.

FAQs: Navigating the Gray Areas

1. Are C AI Filter Bypass Extension tools legal?

Most violate platform Terms of Service, though legality varies by jurisdiction. The U.S. DMCA Section 1201 prohibits circumvention of technological protection measures , while the EU's Digital Services Act imposes transparency requirements. Ethical usage is context-dependent.

2. Can using these extensions damage AI systems?

No direct system damage occurs, but repeated jailbreaks can distort training data. Platforms may temporarily suspend accounts detected using bypass methods.

3. How do detection systems identify bypass attempts?

AI classifiers analyze query patterns, response anomalies, and behavioral fingerprints. For example, sudden topic shifts or unnatural phrasing may trigger scrutiny with 85% accuracy .

The Ethical Frontier

While the C AI Filter Bypass Extension represents a technological workaround for restrictive content filters, its use demands careful ethical consideration. Like archive services preserving paywalled knowledge , these tools challenge us to balance censorship with intellectual freedom. As AI evolves, so too must our frameworks for responsible access—where security doesn't equate to suffocation, and liberation doesn't enable harm.