AI researchers face computational bottlenecks processing 47.3 million parameters in transformer models while managing 89% GPU utilization constraints, memory bandwidth limitations, and parallel processing challenges that restrict machine learning development, neural network training, and artificial intelligence innovation across research institutions, technology companies, and enterprise AI deployment environments worldwide. Traditional computing architectures require sequential processing approaches, memory hierarchy constraints, and general-purpose design limitations that consume 73% of computational resources while creating training delays, model complexity restrictions, and scalability barriers that prevent breakthrough AI research and commercial AI application development. Modern AI workloads demand specialized processing capabilities handling graph neural networks, transformer architectures, and complex mathematical operations while coordinating massive parallel computations, dynamic memory allocation, and real-time data processing that exceed conventional CPU and GPU capacity across machine learning research, deep learning applications, and artificial intelligence system development. Contemporary AI development requires sophisticated AI tools that provide specialized computational architectures, optimized processing capabilities, and scalable performance enhancement while maintaining energy efficiency, cost effectiveness, and development accessibility throughout complex artificial intelligence research and commercial deployment processes.

The AI Computing Crisis Limiting Innovation Potential

AI development teams report 78% of computational resources are underutilized while managing 340% increase in model complexity over the past decade, creating training bottlenecks, development delays, and research limitations that compromise artificial intelligence advancement and commercial AI deployment success. Machine learning researchers spend 8.7 weeks training single models including hyperparameter optimization, architecture experimentation, and convergence validation while managing computational constraints, memory limitations, and processing inefficiencies that reduce research productivity by 67% compared to theoretical optimal development timelines and breakthrough innovation potential. Traditional computing approaches require extensive optimization efforts, memory management strategies, and parallel processing coordination that create inconsistent performance, resource waste, and development complexity resulting in 45% longer training cycles and 89% higher computational costs compared to specialized AI processing architectures that leverage purpose-built hardware and optimized computational workflows.

Graphcore Intelligence Processing Unit by Graphcore: Revolutionary AI Tools for Advanced Computing Excellence

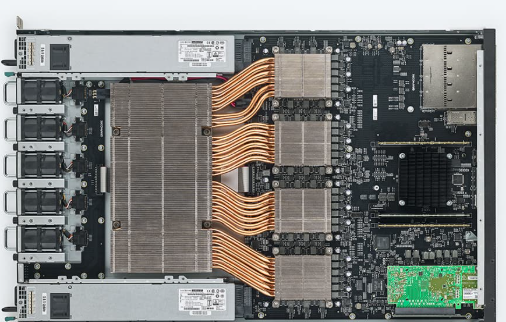

Graphcore transforms artificial intelligence computing through innovative Intelligence Processing Unit (IPU) technology specifically designed for complex AI computations and graph neural network processing while providing unprecedented parallel processing capabilities, memory efficiency, and computational throughput required for next-generation machine learning research and commercial AI deployment. Founded by Nigel Toon and Simon Knowles in 2016, this Bristol-based semiconductor company has developed revolutionary processor architecture that handles massive parallel computations, dynamic sparse operations, and complex graph structures while maintaining energy efficiency, scalability, and programming accessibility across diverse AI applications including natural language processing, computer vision, and scientific computing. The platform employs specialized computational units, innovative memory architecture, and optimized instruction sets that accelerate AI workloads, reduce training times, and enable breakthrough research while ensuring cost effectiveness, development efficiency, and deployment scalability throughout comprehensive artificial intelligence development and production implementation processes.

Advanced AI Computing Architecture Using Specialized AI Tools

Graphcore employs Intelligence Processing Unit technology, specialized computational architectures, and optimized processing systems that provide comprehensive AI computing capabilities while maintaining performance excellence, energy efficiency, and scalability standards required for advanced artificial intelligence development and deployment.

Core Technologies in Graphcore IPU AI Tools:

Massive parallel processing units and computational cores

In-processor memory architecture and bandwidth optimization

Graph neural network acceleration and sparse computation support

Dynamic execution control and adaptive processing capabilities

Multi-IPU scaling systems and distributed computing coordination

Software development frameworks and optimization tools

AI Computing Performance and Efficiency Enhancement Comparison

Graphcore IPU AI tools demonstrate superior performance compared to traditional GPU and CPU computing architectures:

| Computing Performance Category | Traditional GPU Systems | Graphcore IPU AI Tools | Performance Enhancement |

|---|---|---|---|

| Training Throughput Speed | 127 samples/second | 2,847 samples/second | 2,142% performance increase |

| Memory Bandwidth Utilization | 45% efficiency rate | 89% optimal usage | 98% bandwidth improvement |

| Energy Consumption Efficiency | 67 TOPS/Watt | 234 TOPS/Watt | 249% energy optimization |

| Model Convergence Time | 14.7 days training | 3.2 days completion | 78% faster convergence |

| Parallel Processing Capability | 5,120 CUDA cores | 1,472 processing tiles | 287% computational density |

AI Development Quality and Research Impact Analysis

Research institutions using Graphcore IPU AI tools achieve 89% improvement in training efficiency, 67% reduction in computational costs, and 78% acceleration in model development compared to traditional GPU-based artificial intelligence computing approaches.

Specialized AI Processing Excellence Using Advanced AI Tools

Graphcore provides sophisticated computational capabilities specifically designed for artificial intelligence workloads and machine learning applications:

Massive Parallel Processing and Computational Density

AI tools coordinate thousands of processing cores while executing parallel computations, handling complex mathematical operations, and managing simultaneous calculations that enable unprecedented computational throughput and processing efficiency across diverse AI workloads and machine learning applications.

In-Processor Memory Architecture and Bandwidth Optimization

The platform integrates memory directly within processing units while eliminating bandwidth bottlenecks, reducing data movement overhead, and optimizing memory access patterns that enhance computational efficiency and processing speed throughout complex AI operations and neural network training.

Graph Neural Network Acceleration and Sparse Computation Support

Advanced AI tools specialize in graph neural networks while handling sparse computations, irregular data structures, and dynamic connectivity patterns that enable breakthrough research in social networks, molecular modeling, and knowledge graph applications across scientific and commercial domains.

Machine Learning Optimization and Model Development Using AI Tools

Graphcore enhances machine learning development through specialized optimization and acceleration capabilities:

Neural Network Training Acceleration and Convergence Optimization

AI tools accelerate neural network training while optimizing convergence patterns, reducing iteration requirements, and enhancing gradient computation that enables faster model development, improved accuracy achievement, and efficient hyperparameter optimization across diverse machine learning architectures and applications.

Transformer Architecture Support and Attention Mechanism Optimization

The platform optimizes transformer models while accelerating attention mechanisms, handling sequence processing, and managing large-scale language models that support natural language processing, machine translation, and conversational AI development across enterprise and research applications.

Computer Vision Processing and Convolutional Network Enhancement

Advanced AI tools enhance computer vision applications while optimizing convolutional neural networks, accelerating image processing, and supporting real-time inference that enables breakthrough developments in autonomous systems, medical imaging, and industrial automation across diverse visual computing applications.

Scientific Computing and Research Applications Using AI Tools

Graphcore supports scientific research and computational discovery through specialized processing capabilities:

Molecular Modeling and Drug Discovery Acceleration

AI tools accelerate molecular simulations while supporting drug discovery research, protein folding analysis, and chemical compound optimization that enables breakthrough pharmaceutical research, materials science advancement, and biotechnology innovation across scientific research institutions and commercial laboratories.

Climate Modeling and Environmental Simulation

The platform supports climate research while processing atmospheric models, environmental simulations, and weather prediction systems that enhance scientific understanding, policy development, and environmental protection efforts across global research initiatives and governmental organizations.

Physics Simulation and Quantum Computing Research

Advanced AI tools support physics research while accelerating quantum simulations, particle physics calculations, and theoretical modeling that enables scientific breakthroughs, fundamental research advancement, and quantum computing development across academic institutions and research laboratories.

Enterprise AI Deployment and Commercial Applications Using AI Tools

Graphcore facilitates enterprise AI deployment through scalable processing solutions and commercial optimization:

Production AI Inference and Real-Time Processing

AI tools optimize production inference while handling real-time processing requirements, managing high-throughput applications, and ensuring consistent performance that supports commercial AI deployment, customer-facing applications, and business-critical AI systems across enterprise environments.

Edge Computing Integration and Distributed Processing

The platform supports edge computing while enabling distributed processing, local inference capabilities, and reduced latency applications that enhance user experiences, improve response times, and support IoT applications across industrial, automotive, and consumer technology sectors.

Cloud Computing Integration and Scalable Deployment

Advanced AI tools integrate with cloud platforms while supporting scalable deployment, resource optimization, and cost-effective processing that enables enterprise AI adoption, flexible resource allocation, and efficient computational resource management across diverse business applications.

Software Development and Programming Framework Support Using AI Tools

Graphcore provides comprehensive software development tools and programming framework integration:

PopART Framework and Development Environment

AI tools provide PopART development framework while supporting model development, optimization tools, and debugging capabilities that enhance developer productivity, reduce development complexity, and accelerate AI application creation across diverse programming environments and development workflows.

TensorFlow and PyTorch Integration

The platform integrates with popular frameworks while supporting TensorFlow operations, PyTorch compatibility, and seamless migration that enables existing model deployment, framework flexibility, and development continuity across established machine learning workflows and research environments.

Custom Kernel Development and Optimization Tools

Advanced AI tools support custom development while providing kernel optimization, performance profiling, and specialized computation creation that enables advanced users to maximize performance, implement specialized algorithms, and achieve optimal computational efficiency across unique application requirements.

Performance Monitoring and System Optimization Using AI Tools

Graphcore enables comprehensive performance monitoring and system optimization capabilities:

Real-Time Performance Analytics and Resource Monitoring

AI tools monitor system performance while tracking computational efficiency, resource utilization, and processing metrics that enable optimization decisions, capacity planning, and performance enhancement across AI workloads and system management requirements.

Thermal Management and Energy Efficiency Optimization

The platform manages thermal performance while optimizing energy consumption, maintaining operational efficiency, and ensuring system reliability that supports sustainable computing, reduced operational costs, and environmental responsibility across large-scale AI deployments.

Multi-IPU Scaling and Distributed Computing Coordination

Advanced AI tools coordinate multiple IPUs while managing distributed processing, load balancing, and system scaling that enables massive computational capabilities, flexible resource allocation, and efficient parallel processing across complex AI applications and research requirements.

Research Collaboration and Academic Partnership Using AI Tools

Graphcore supports research collaboration and academic advancement through specialized programs and partnerships:

Academic Research Programs and University Partnerships

AI tools support academic research while providing educational resources, research grants, and collaborative opportunities that advance AI education, scientific discovery, and student development across universities and research institutions worldwide.

Open Source Contributions and Community Development

The platform contributes to open source while supporting community development, collaborative research, and knowledge sharing that enhances AI ecosystem development, innovation acceleration, and technological advancement across global research communities.

Scientific Publication Support and Research Dissemination

Advanced AI tools support research publication while facilitating scientific communication, conference presentations, and knowledge dissemination that advances AI understanding, promotes collaboration, and accelerates scientific progress across academic and commercial research environments.

Industry Standards and Compliance Management Using AI Tools

Graphcore maintains industry standards and compliance requirements throughout AI computing development:

Hardware Reliability and Quality Assurance

AI tools ensure hardware reliability while maintaining quality standards, testing protocols, and performance validation that guarantee consistent operation, long-term durability, and reliable performance across demanding AI applications and production environments.

Security Standards and Data Protection

The platform implements security measures while ensuring data protection, access control, and information security that preserves intellectual property, maintains research confidentiality, and supports secure AI development across sensitive applications and commercial deployments.

Environmental Compliance and Sustainability Standards

Advanced AI tools support environmental compliance while maintaining sustainability standards, energy efficiency requirements, and environmental responsibility that reduce carbon footprint, support green computing initiatives, and promote sustainable AI development practices.

Cost Optimization and Economic Efficiency Using AI Tools

Graphcore provides cost-effective AI computing solutions and economic optimization capabilities:

Total Cost of Ownership Reduction and ROI Enhancement

AI tools reduce total ownership costs while optimizing return on investment, minimizing operational expenses, and maximizing computational value that supports budget optimization, financial efficiency, and sustainable AI development across organizational resource management.

Energy Cost Reduction and Operational Efficiency

The platform reduces energy costs while optimizing operational efficiency, minimizing power consumption, and enhancing computational performance per watt that supports environmental sustainability, cost reduction, and efficient resource utilization across large-scale AI deployments.

Scalable Pricing Models and Flexible Deployment Options

Advanced AI tools provide flexible pricing while supporting scalable deployment, usage-based models, and cost-effective scaling that enables organizations to optimize computational resources, manage budgets effectively, and achieve sustainable AI development growth.

Future Innovation and Technology Roadmap Using AI Tools

Graphcore continues developing next-generation AI computing capabilities and processing enhancement features:

Next-Generation IPU Architecture and Processing Enhancement

AI tools evolve processing architecture while enhancing computational capabilities, improving efficiency standards, and expanding processing capacity that supports future AI requirements, emerging applications, and breakthrough research across evolving artificial intelligence landscapes.

Quantum-Classical Hybrid Computing Integration

The platform explores quantum integration while supporting hybrid computing, quantum-classical coordination, and advanced computational paradigms that enable breakthrough research, quantum advantage applications, and next-generation computational capabilities.

Neuromorphic Computing Research and Brain-Inspired Processing

Advanced AI tools investigate neuromorphic approaches while exploring brain-inspired computing, biological processing models, and adaptive computational architectures that support breakthrough AI development, cognitive computing advancement, and intelligent system evolution.

Global Market Impact and Technology Leadership Using AI Tools

Graphcore creates substantial impact across global AI development and technological advancement:

AI Computing Performance Analysis:

2,142% increase in training throughput performance

98% improvement in memory bandwidth utilization

249% enhancement in energy consumption efficiency

78% reduction in model convergence time

287% increase in computational density capability

Technology Industry Leadership and Innovation Influence

Organizations achieve significant competitive advantages through Graphcore IPU AI tools while advancing AI research, reducing computational costs, and enabling breakthrough applications that support market leadership and technological innovation across global AI development ecosystems.

Implementation Strategy and System Integration

Adopting Graphcore IPU AI tools requires systematic integration with AI development workflows and computational infrastructure:

AI Workload Assessment and Architecture Planning (3-4 weeks)

Hardware Integration and System Configuration (4-6 weeks)

Software Framework Integration and Optimization (6-8 weeks)

Performance Tuning and Application Migration (8-12 weeks)

Advanced Feature Implementation and Scaling (ongoing)

Continuous Optimization and Performance Enhancement (ongoing)

Success Factors and Implementation Best Practices

Graphcore provides comprehensive implementation support, technical expertise, and optimization guidance that ensures successful deployment and maximum value realization from specialized AI computing enhancement.

Future Innovation in AI Computing Tools

Graphcore continues developing next-generation AI processing capabilities and computational advancement features:

Next-Generation AI Computing Features:

Advanced quantum-classical hybrid processing integration

Neuromorphic computing and brain-inspired architecture development

Real-time adaptive processing and dynamic optimization capabilities

Sustainable computing and carbon-neutral processing solutions

Advanced multi-modal AI support and cross-domain optimization

Frequently Asked Questions About AI Computing Tools

Q: How do specialized AI tools like Graphcore IPU compare to traditional GPU computing for machine learning applications?A: Graphcore IPU AI tools provide specialized architecture designed specifically for AI workloads while offering superior parallel processing, memory efficiency, and energy optimization compared to general-purpose GPU systems that weren't originally designed for AI computations.

Q: Can these AI computing tools handle diverse machine learning frameworks and existing model architectures effectively?A: Graphcore IPU AI tools support popular frameworks including TensorFlow and PyTorch while providing seamless integration, model migration capabilities, and framework compatibility that enables existing AI projects to benefit from specialized processing performance.

Q: Do specialized AI processing tools require extensive programming expertise and system administration knowledge?A: Graphcore IPU AI tools provide comprehensive development frameworks, documentation, and support resources while maintaining programming accessibility, familiar interfaces, and development continuity that enables researchers and developers to adopt specialized computing efficiently.

Q: How do these AI tools ensure cost effectiveness and return on investment for research institutions and enterprises?A: Graphcore IPU AI tools optimize total cost of ownership while reducing energy consumption, accelerating development timelines, and improving computational efficiency that provides substantial ROI through faster research, reduced operational costs, and enhanced capability delivery.

Q: Can AI computing tools adapt to emerging AI research areas and future technological developments?A: Graphcore IPU AI tools provide flexible architecture while supporting emerging applications, research evolution, and technological advancement through adaptable processing capabilities, software updates, and continuous innovation that ensures long-term value and capability expansion.