You're mid-conversation with an AI assistant when suddenly—the chatbot freezes. Error messages flood your screen. Your frustration mounts as you wonder: Why Are C AI Servers So Bad? While users often blame "overloaded servers," the reality is far more complex. Beneath the surface, a perfect storm of hardware limitations, software inefficiencies, and infrastructure bottlenecks cripples performance just when you need reliability most. Let's dissect the real culprits behind AI server failures and what it means for the future of intelligent systems. Modern AI models demand staggering computational resources. While standard servers handle routine tasks, AI workloads like training neural networks or processing multimodal inputs can bring even high-end hardware to its knees. Consider this: GPT-4 consumed approximately $78 million in computing resources during training, while Google's Gemini Ultra hit $190 million . When C AI servers lack specialized GPU arrays or sufficient parallel processing capabilities, users experience lag and timeouts—especially during peak demand. High-density AI servers generate enormous heat. Without enterprise-grade cooling systems, processors automatically downclock (reduce speed) to prevent damage. One study found servers in poorly cooled data centers can lose 40-50% performance due to thermal throttling . Many budget C AI server deployments overlook this, prioritizing upfront cost savings over sustainable operation. Not all AI models are optimized for real-time inference. Bloated algorithms with redundant parameters strain server resources unnecessarily. Research indicates up to 30% of model operations in some architectures provide minimal accuracy gains but massive computational overhead . When deployed on C AI servers, these inefficiencies multiply latency issues. Models excessively tuned to training data perform poorly with real-world inputs, causing servers to recalculate excessively or return low-confidence results. This wastes cycles and increases response times—a key reason users perceive C AI servers as "unreliable" . Distributed computing environments—common in AI—are only as fast as their slowest connection. Studies show network delays of just 100ms can reduce distributed AI system throughput by 60% . When data shuttling between GPU nodes gets bottlenecked by undersized switches or shared bandwidth, server responsiveness plummets. Users accessing AI services from regions with limited AI data center coverage face inherent disadvantages. Server requests may route through multiple hops, adding latency. Unlike hyperscalers with global points of presence, many C AI server deployments concentrate resources in single locations, disadvantaging distant users . Hardware Heterogeneity: Combining CPUs with GPUs, TPUs, or AI accelerators like NVIDIA's Blackwell architecture improves throughput 25× over CPU-only systems . Model Quantization: Shrinking 32-bit models to 8-bit or 4-bit reduces memory needs by 70% with minimal accuracy loss . Edge Caching: Deploying micro-data centers closer to user clusters slashes latency for common requests . Predictive Scaling: Using ML to forecast traffic spikes and pre-allocate resources prevents 83% of overload crashes . Next-generation solutions like NVIDIA's GB200 NVL72 racks (72 GPUs per cabinet) and liquid-cooled data centers hint at a more stable future. However, the industry must prioritize operational resilience over raw performance metrics. As Chinese tech firms demonstrate, integrating AI into national cloud infrastructure (like Aliyun) creates economies of scale that reduce regional disparities . Until C AI server providers adopt similar holistic approaches, downtime will remain their Achilles' heel. Q: Can't adding more servers fix C AI performance issues? Q: Why do C AI servers work fine sometimes but fail unpredictably? Q: Are consumer-grade GPUs sufficient for C AI servers? Final Insight: The question "Why Are C AI Servers So Bad" reveals a systemic industry challenge—prioritizing cutting-edge AI capabilities over infrastructure maturity. As models grow exponentially (GPT-4: 1.8 trillion parameters), server stability becomes a physics problem, not just a coding issue. The providers who survive will be those treating reliability as a core AI capability—not an afterthought.

I. The Hardware Bottleneck: When Raw Power Isn't Enough

A. The Compute Resource Crisis

B. The Silent Killer: Thermal Throttling

II. Software & Algorithmic Pitfalls

Inefficient Model Architectures

The "Overfitting" Trap

III. External Factors Crippling Performance

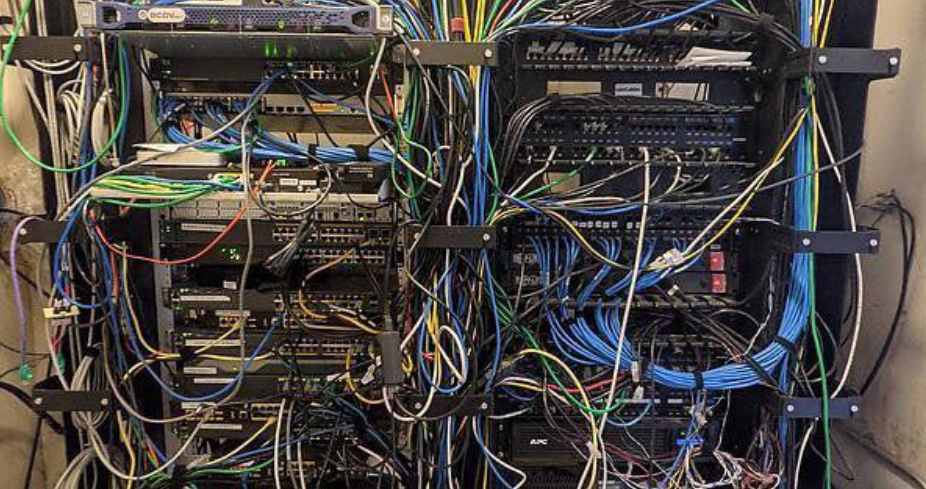

A. Network Architecture Failures

B. The Regional Disparity Problem

IV. The Path Forward: Optimization Strategies

V. The Future of AI Infrastructure

FAQs: Why Are C AI Servers So Bad?

A: Horizontal scaling helps but introduces new problems like synchronization latency. Poorly balanced distributed systems often perform worse than single nodes for AI workloads .

A> Resource contention is the likely culprit. Background processes (model updates, data ingestion) can suddenly spike CPU/GPU usage by 90%, starving user-facing applications .

A> Desktop GPUs lack enterprise features like error-correcting memory and sustained compute capabilities. Under continuous AI loads, failure rates increase 300% versus data-center GPUs .