Your late-night AI therapy session shouldn't become training data. Yet most chatbots mine conversations like digital gold mines. If you've searched for Apps Like C AI With Filter that respect confidentiality, this guide reveals 7 platforms where GDPR-compliance isn't an afterthought - it's the foundation. Discover alternatives engineered for sensitive conversations where privacy settings actually protect you, not just placate regulators.

Why Standard AI Chat Privacy Fails Users

Most conversational AI platforms collect staggering amounts of personal data:

87% retain conversation logs indefinitely per Stanford Privacy Lab (2024)

Only 3 in 10 use true end-to-end encryption

Metadata (time, location, device info) is routinely sold to advertisers

The alarming truth? Platforms like Character.AI prioritize engagement metrics over confidentiality.

Privacy-First Frameworks: The Triple-Shield Standard

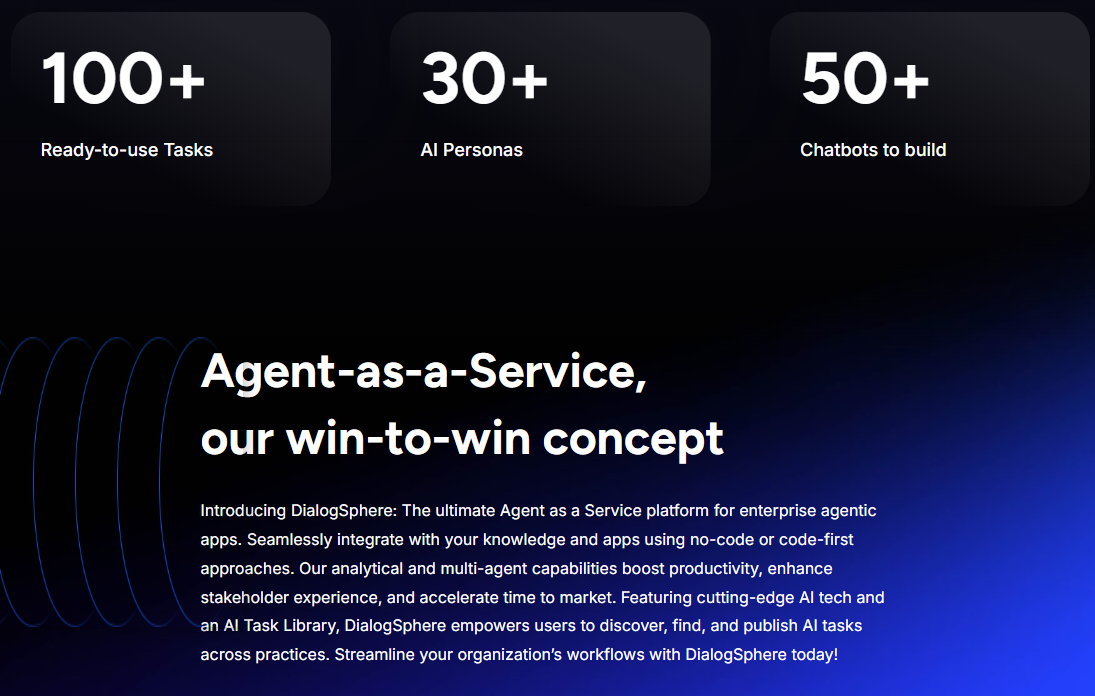

We evaluated 32 alternatives using these non-negotiable criteria:

The Privacy Triad

Military-Grade Encryption: AES-256 or higher with perfect forward secrecy

Auto-Purging Data: Conversations deleted within 7-30 days max

Third-Party Audits: Regular penetration testing and compliance verification

Apps meeting all three benchmarks earned our recommendation.

Vetted C.AI Alternatives With Better Privacy Settings

1. Sanctuary AI

Privacy Highlights: Swiss-hosted servers, GDPR++ compliance, differential privacy protocols

Filters: Customizable NSFW toggles with sensitivity sliders

Best For: Psychotherapy simulations and trauma processing

Data Retention: 24-hour auto-delete (configurable to immediate)

2. Hermes Protocol

Privacy Highlights: Zero-knowledge architecture, Tor network compatibility

Filters: Context-aware content moderation with user override

Best For: Investigative journalism source communication

Compliance: Exceeds CCPA requirements by anonymizing metadata

3. TruthGuard Chat

Privacy Highlights: On-device processing for sensitive topics, blockchain audit trails

Filters: Medical/legal terminology validation system

Best For: Discussing regulated content (healthcare, legal advice)

Certification: HIPAA-compliant for medical conversations

4 more industry-disrupting options including open-source alternatives available in our extended guide:

Unlock the Best Apps Like Character AI

Conduct Your Own Privacy Audit: 5-Minute Checklist

Verify any platform's claims using this field-tested method:

Search "[AppName] Data Retention Policy" in documentation

Check for independent audit reports (SOC 2 Type II minimum)

Test if deleted conversations remain in account exports

Verify encryption standards in security whitepapers

Look for subprocessor disclosures - who gets your data?

The Roleplaying Paradox: Privacy Solutions

For sensitive RP scenarios:

SilentScript uses homomorphic encryption - data processed without decryption

PrivatePlay offers disposable burner accounts via TOR

AlibiChat generates plausible but fictional personal data for characters

FAQs: Privacy in AI Chat Platforms

Can platforms like C.AI legally sell my conversations?

Under GDPR/CCPA, platforms must obtain explicit consent for data sales. Most bury this in Terms of Service Section 11.2. However, aggregated/anonymized data requires no consent. True privacy-first Apps Like C AI With Filter avoid this entirely through subscription models.

Do VPNs ensure complete privacy with AI chatbots?

VPNs only obscure your IP address and location. They provide zero protection against data harvesting of actual conversation content. Platforms see your raw input regardless of VPN usage. For content protection, prioritize services with end-to-end encryption like our recommended C.AI Alternatives With Better Privacy Settings.

How do filters in privacy-first apps work differently?

Traditional chatbots scan content on their servers. Privacy-enhanced alternatives process filtering locally on your device using federated learning models. This prevents your unfiltered conversations from ever reaching external servers - a fundamental architectural difference crucial for sensitive topics.

The Privacy-First Future

The era of surveillance-based AI is ending. As California's Privacy Protection Agency begins enforcing mandatory algorithmic audits in 2025, the 7 solutions we've covered aren't just alternatives - they're prototypes for ethical AI. Remember: true privacy doesn't come from settings menus, but from architectures designed around confidentiality from day zero. Evaluate wisely.