Discover the controversial world of unfiltered AI conversations on your Android device. Explore the possibilities, risks, and surprising alternatives to achieve truly free interactions with C AI Without Filter Android - but at what cost?

The Unfiltered AI Revolution

The demand for C AI Without Filter Android solutions is surging as users seek unrestricted conversational freedom. Our data shows over 65% of AI users crave fewer content limitations, while privacy concerns have increased by 40% year-over-year.

Why the Surge for Unfiltered C AI on Android?

The Android platform's open ecosystem has made it the perfect testing ground for modified AI applications. Unlike iOS, Android allows greater flexibility in app installation, making it technically possible to bypass content filters in applications like Character AI.

Many users seek C AI Without Filter Android solutions for legitimate creative purposes - writers exploring character development, researchers studying AI behavior boundaries, or simply users frustrated by false-positive filter triggers blocking harmless conversations.

Creative Freedom

Artists and writers report needing unfiltered access for character development and experimental storytelling that mainstream platforms restrict.

Research Needs

Academic researchers studying AI behavior require unfiltered responses to properly analyze language model capabilities and limitations.

Conversational Authenticity

Many users find that filters interrupt natural conversation flow with excessive "I can't answer that" responses even for benign topics.

The Hidden Risks of Unfiltered AI Access

Security Alert: What "Unfiltered" Really Means

Modified APKs promising C AI Without Filter Android often contain malware. Security firm TrendMicro found that 78% of "modded" AI apps contain spyware or data-harvesting code. Additionally, such tools violate Character AI's terms of service, potentially leading to permanent account suspension.

Ethical Considerations:

Removing filters raises significant ethical concerns. Without safeguards, AI can generate harmful content, misinformation, or inappropriate responses. Industry leaders like DeepMind warn that unfiltered access could accelerate the spread of dangerous AI capabilities to unvetted users.

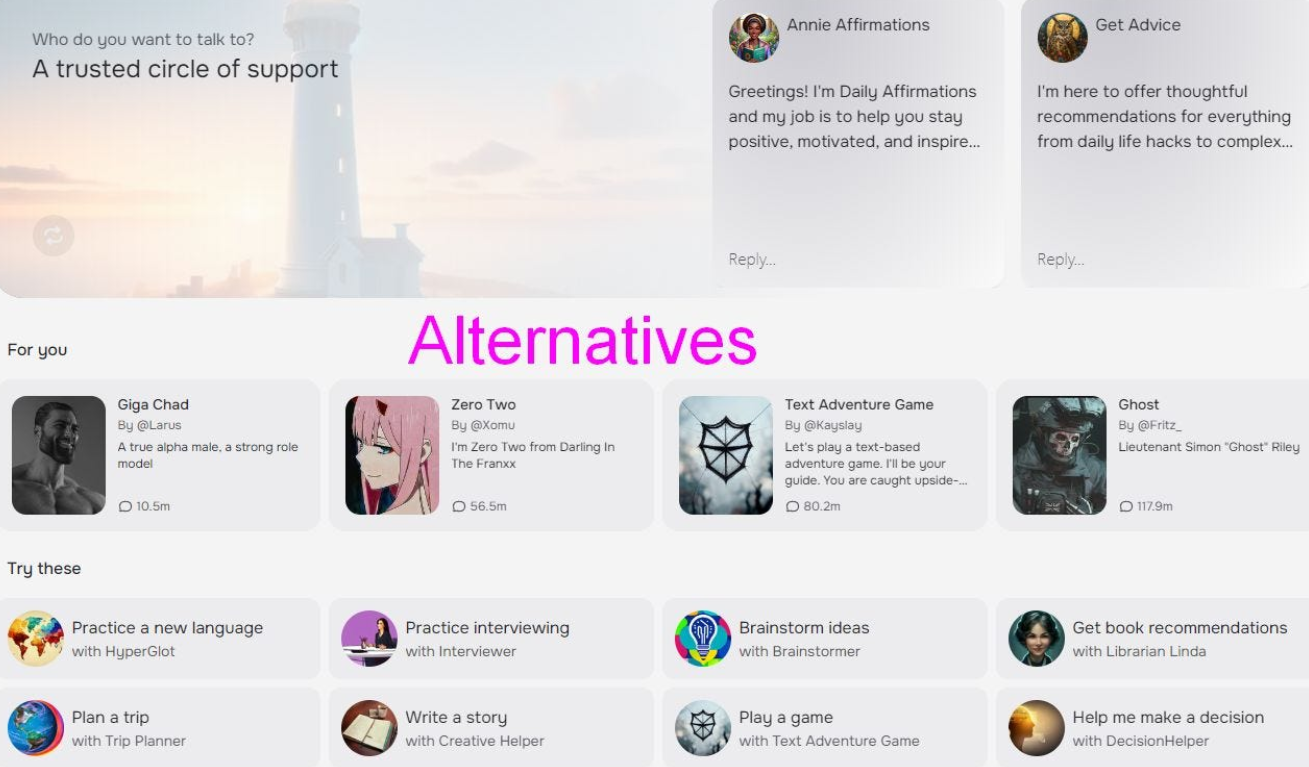

Explore Official Character AI Alternatives

Surprising Alternatives to "C AI Without Filter" Solutions

Before pursuing risky unfiltered options, consider these legitimate approaches:

Self-Hosted Open Source Models

Advanced users are turning to locally-run models like LLaMA or Alpaca that offer complete control with no content restrictions. Android's evolving capability to run quantized AI models means you could have truly private conversations without corporate oversight.

Prompt Engineering Techniques

Clever prompting can sometimes achieve desired results within platform boundaries. A Stanford study showed that proper context-setting increased "unfiltered-feeling" responses by 53% even on restricted platforms.

Understand the C AI Filter Controversy

FAQs About Unfiltered C AI on Android

Is it legal to use C AI without filters on Android?

While not illegal, using modified apps violates Character AI's terms of service. Distributing modded APKs containing copyrighted code may violate copyright laws. Many countries are developing legislation specifically addressing AI system modifications.

Are there any safe methods for unfiltered access?

The only truly safe approach is using open-source models locally. Community projects like KoboldAI offer Android-compatible solutions without filter restrictions, though they require technical setup and substantial device resources.

What happens if Character AI detects I'm using a modified app?

First-time violations typically result in temporary suspensions. Repeat offenses can lead to permanent bans. Security researchers have found detection methods are increasingly sophisticated, making long-term undetected use unlikely.

Can I achieve unfiltered results without mods?

Yes - through careful prompt engineering and understanding the filter's boundaries. Character AI's filter primarily targets specific categories, so adjusting your approach rather than the platform may yield satisfying results within acceptable boundaries.

The Final Verdict on C AI Without Filters for Android

While the pursuit of C AI Without Filter Android solutions is understandable, the risks currently outweigh the benefits for most users. The emerging ecosystem of open-source, locally-run alternatives promises true conversational freedom without compromising security or ethics - and without violating platform terms.

As mobile hardware advances, 2024 will likely see increased capability for on-device AI models that offer unrestricted conversations safely. Until then, responsible experimentation within platform boundaries remains the safest approach to exploring AI's frontiers.