Looking to run Grok 3 smoothly? Whether you're a developer, researcher, or just an AI enthusiast, this guide dives into everything you need to know about Grok 3 system requirements, from hardware compatibility checks to performance optimization hacks. Spoiler: Your trusty laptop might not cut it! ??

Why Grok 3 Demands High-End Hardware?

Grok 3 isn't your average chatbot—it's a beast built for complex reasoning, real-time data crunching, and multi-modal tasks like generating 3D animations or coding games. To handle its 10x computational power boost over Grok 2, you'll need:

? NVIDIA GPUs: Preferably H100 or A100 for training; RTX 4090 for lightweight local runs .

? Massive RAM: 128GB+ to manage its 1.5 trillion parameters.

? Storage: SSDs with 1TB+ capacity for datasets and model caching.

But don't panic—here's how to check if your setup survives the Grok 3 storm!

Step-by-Step Hardware Compatibility Check

Step 1: Audit Your GPU Power

Grok 3 thrives on parallel processing. Use tools like GPU-Z or NVIDIA System Management Interface (nvidia-smi) to:

Confirm GPU model (e.g., RTX 3090 vs. H100).

Check CUDA cores (aim for ≥10,000).

Monitor VRAM usage during small tests.

Example: My RTX 4090 (24GB VRAM) handled basic queries but crashed with video generation. Switching to a cloud-based H100 cluster fixed it!

Step 2: RAM & CPU Compatibility

? Minimum RAM: 64GB DDR5 for local runs.

? CPU: AMD EPYC or Intel Xeon for server setups; Ryzen 9 7950X for personal use.

? Check compatibility: Use HWiNFO to ensure PCIe lanes support GPU bandwidth.

Step 3: Storage Speed & Capacity

Grok 3's real-time data ingestion requires:

? NVMe SSDs: ≥7GB/s read/write speeds.

? RAID Configuration: For multi-drive setups to avoid bottlenecks.

Step 4: Network Requirements

For cloud-based usage:

? Bandwidth: ≥1Gbps for seamless API calls.

? Latency: <50ms to prevent voice/video lag.

Step 5: Benchmark Your Setup

Run a sample task (e.g., solving a 24-game puzzle) using Grok 3's CLI tool. If it takes >5 minutes, your hardware's struggling!

Optimization Hacks for Grok 3

Hack 1: Memory Management

? Use CUDA-aware MPI: For multi-GPU setups.

? Enable FlashAttention-2: Reduces VRAM usage by 30%.

Hack 2: Quantization & Pruning

? 8-bit Quantization: Cuts model size by 40% (trade-off: minor accuracy loss).

? Layer Pruning: Remove redundant neural layers via PyTorch Pruning Toolkit.

Hack 3: Distributed Computing

? Horovod Framework: Splits tasks across 4+ GPUs.

? AWS/GCP Spot Instances: Cost-effective for large-scale runs.

Top 3 Tools for Grok 3 Optimization

NVIDIA NGC: Pre-configured containers for seamless deployment.

Optimum Library: Optimizes model inference on consumer hardware.

Groq LPU: Dedicated AI accelerators for 10x faster inference (if budget allows).

Common Issues & Fixes

| Problem | Solution |

|---|---|

| Out-of-Memory Errors | Use --gradient_checkpointing flag |

| Slow Response Times | Enable TensorRT for FP16 precision |

| API Timeouts | Switch to xAI's Enterprise Tier |

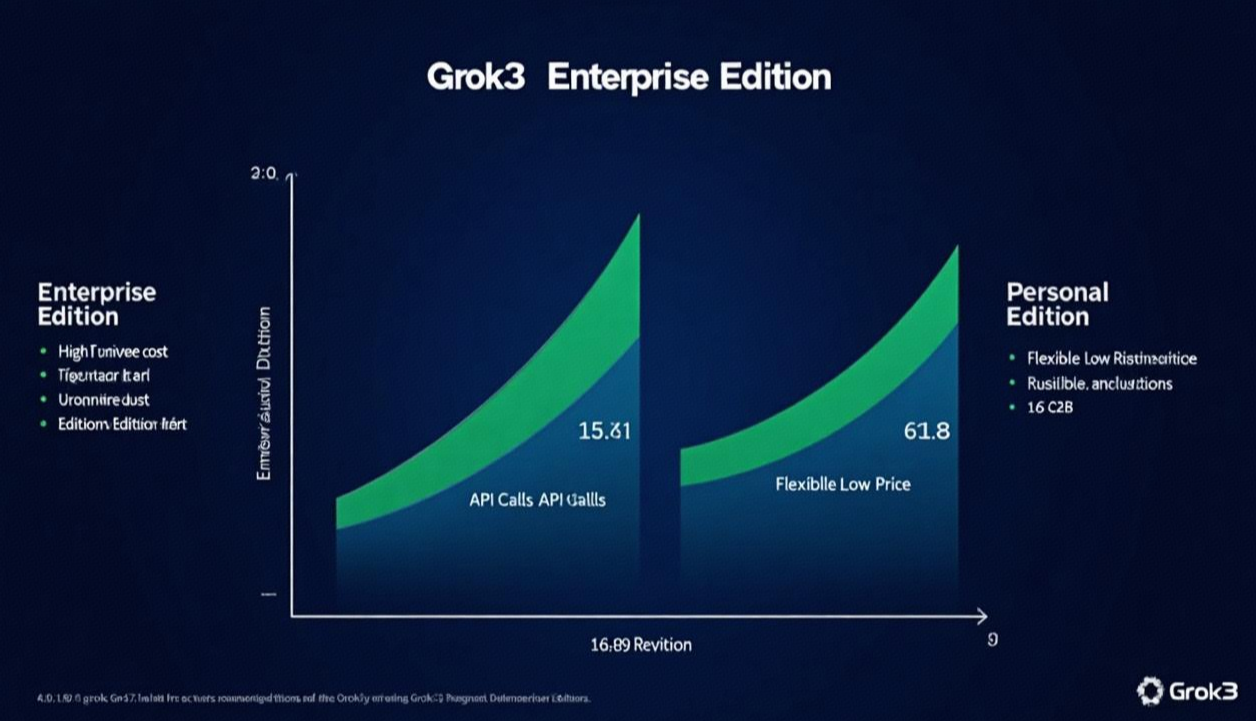

Should You Build a Grok 3 Rig?

| Use Case | Recommendation |

|---|---|

| Personal Projects | Rent an H100 cloud instance (~$30/hr) |

| Enterprise Deployment | Invest in NVIDIA DGX Station |

| Hobbyists | Stick to ChatGPT-4o for now |

Final Tips:

? Always validate data inputs—Grok 3's “truth-seeking” nature can't fix garbage data .

? Join the Grok Community on Discord for real-time troubleshooting!