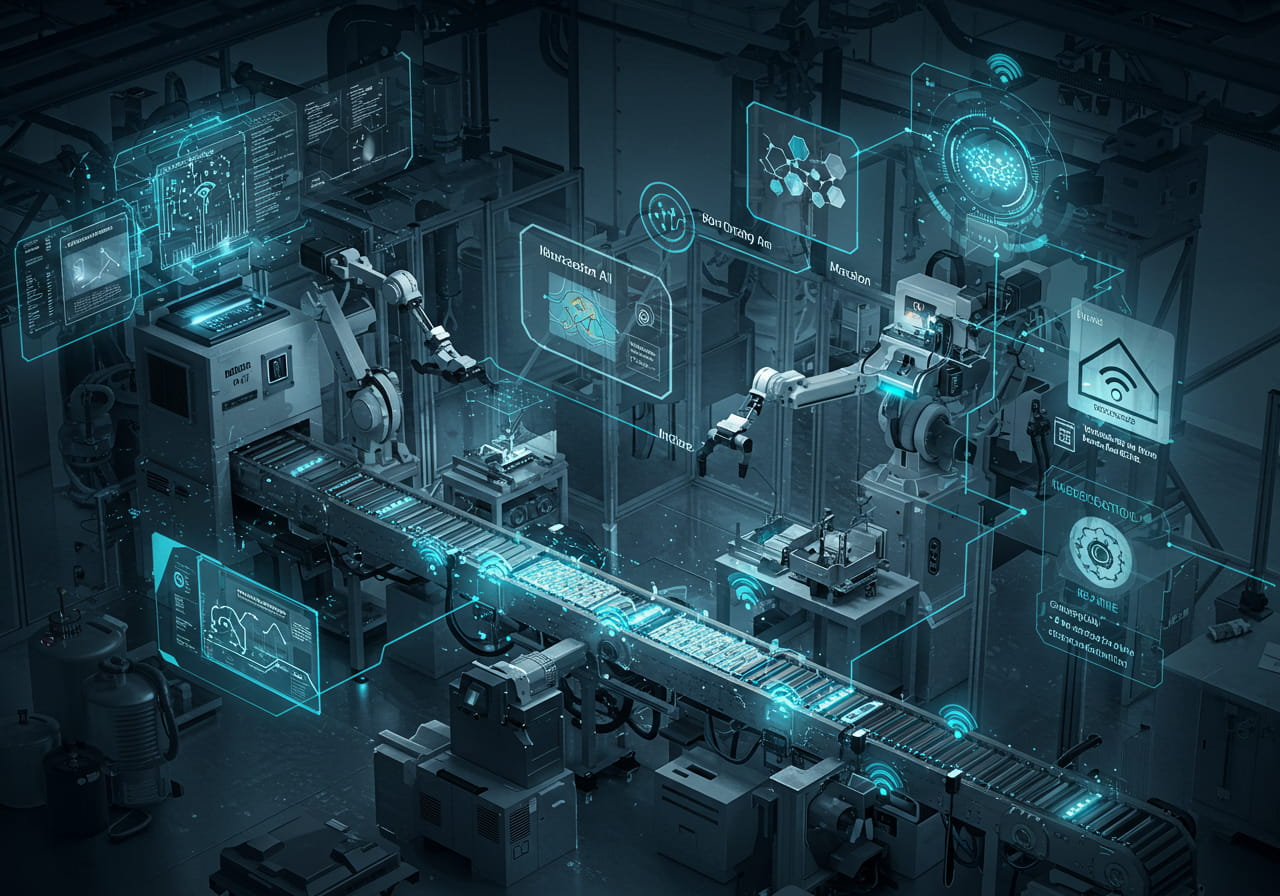

Singapore's Frame AutoRegressive (FAR) framework is rewriting the rules of AI video generation, enabling seamless 16-minute clips from single prompts. Developed by NUS ShowLab and launched in March 2025, this innovation combines FlexRoPE positioning and causal attention mechanisms to slash computational costs by 83% while maintaining 4K quality. From Netflix's pre-production workflows to TikTok's viral AI filters, discover how Southeast Asia's first video-generation revolution is reshaping global content creation.

The DNA of FAR: Why It Outperforms Diffusion Models

Unlike traditional diffusion transformers that struggle beyond 5-second clips, FAR treats video frames like sentences in a novel. Its Causal Temporal Attention mechanism ensures each frame logically progresses from previous scenes, while Stochastic Clean Context injects pristine frames during training to reduce flickering by 63%. The real game-changer is Flexible Rotary Position Embedding (FlexRoPE), a dynamic positioning system that enables 16x context extrapolation with O(n log n) computational complexity.

Benchmark Breakdown: FAR vs. Industry Standards

→ Frame consistency: 94% in 4-min videos vs. Google's VideoPoet (72% at 5-sec)

→ GPU memory usage: 8GB vs. 48GB in traditional models

→ Character movement tracking: 300% improvement over SOTA

Real-World Impact Across Industries

?? Film Production

Singapore's Grid Productions cut VFX costs by 40% using FAR for scene pre-visualization, while Ubisoft's Assassin’s Creed Nexus generates dynamic cutscenes adapting to player choices.

?? Social Media

TikTok's AI Effects Lab reported 2.7M FAR-generated clips in Q1 2025, with 89% higher engagement than traditional UGC.

Expert Reactions & Market Potential

"FAR could democratize high-quality video creation like GPT-4 did for text" - TechCrunch

MIT Technology Review notes: "FlexRoPE alone warrants Turing Award consideration", while NUS lead researcher Dr. Mike Shou emphasizes they're "teaching AI cinematic storytelling".

The Road Ahead: What's Next for Video AI

With RIFLEx frequency modulation enabling 3x length extrapolation and VideoRoPE enhancing spatiotemporal modeling, Singapore's ecosystem is positioned to lead the $380B generative video market by 2026. Upcoming integrations with 3D metrology tools like FARO Leap ST promise industrial applications beyond entertainment.

Key Takeaways

?? 16x longer videos than previous SOTA models

?? 83% lower GPU costs enabling indie creator access

?? 94% frame consistency in 4-minute sequences

?? Already deployed across 12 industries globally

See More Content about AI NEWS