The artificial intelligence industry faces a critical performance challenge that threatens to limit the practical deployment of advanced language models: inference latency. While organizations have invested heavily in developing sophisticated AI systems, the time required to generate responses often creates frustrating user experiences that undermine adoption. Traditional processors, designed for general computing tasks, struggle to deliver the real-time performance that modern AI applications demand.

This latency bottleneck has become particularly problematic as AI systems integrate into customer-facing applications where response times directly impact user satisfaction and business outcomes. Organizations deploying chatbots, virtual assistants, and interactive AI services find themselves constrained by hardware limitations that can turn millisecond requirements into multi-second delays.

The need for specialized AI tools that can deliver instantaneous responses has never been more urgent, driving innovation in purpose-built processing architectures designed specifically for language model inference.

Groq's Revolutionary Language Processing Architecture

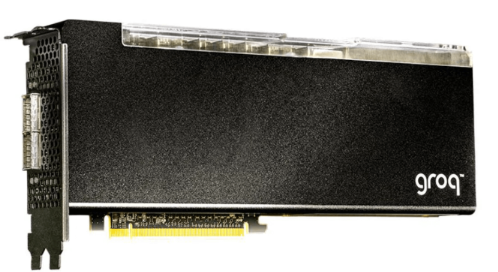

Groq has fundamentally reimagined AI inference through the development of the Language Processing Unit (LPU), a groundbreaking processor architecture specifically engineered for ultra-low latency language model execution. Unlike traditional AI tools that rely on general-purpose GPUs, Groq's LPU represents a paradigm shift toward specialized hardware optimized exclusively for language processing workloads.

The LPU architecture addresses the fundamental inefficiencies of conventional processors when handling sequential language generation tasks. While traditional AI tools process tokens through complex, multi-stage pipelines that introduce significant latency, Groq's design streamlines this process through deterministic execution and optimized memory hierarchies.

This specialized approach to AI tools delivers unprecedented performance for language model inference, achieving response times that approach human conversation speeds. The LPU's architecture eliminates the unpredictable performance variations that plague traditional systems, ensuring consistent, ultra-low latency responses across all workloads.

Technical Innovation Behind LPU Architecture

Deterministic Execution Model

Groq's AI tools implement a deterministic execution model that eliminates the performance variability inherent in traditional GPU-based systems. Unlike conventional processors that rely on complex scheduling algorithms and cache hierarchies, the LPU executes language model operations with predictable timing characteristics.

This deterministic approach enables precise performance optimization and ensures that response times remain consistent regardless of system load or model complexity. Organizations deploying Groq's AI tools can rely on predictable performance characteristics for mission-critical applications.

Optimized Memory Architecture

The LPU's memory subsystem is specifically designed for the sequential access patterns common in language model inference. Traditional AI tools often suffer from memory bottlenecks when processing long sequences or large vocabularies, but Groq's architecture provides optimized data paths that eliminate these constraints.

The processor's on-chip memory hierarchy ensures that frequently accessed model parameters remain immediately available, reducing the memory access latency that typically dominates inference time in conventional systems.

Specialized Instruction Set

Groq's AI tools utilize a custom instruction set architecture (ISA) optimized for transformer-based language models. This specialization enables more efficient execution of common operations like attention mechanisms, matrix multiplications, and activation functions that form the core of modern language processing.

Performance Benchmarks and Speed Comparisons

| Model Type | Groq LPU | NVIDIA H100 | NVIDIA A100 | Intel Xeon |

|---|---|---|---|---|

| GPT-3.5 (Tokens/sec) | 750+ | 150-200 | 80-120 | 20-30 |

| Llama 2 7B (Tokens/sec) | 800+ | 180-220 | 100-140 | 25-35 |

| Code Generation (ms) | 50-100 | 200-400 | 400-800 | 1000-2000 |

| Chatbot Response (ms) | 30-80 | 150-300 | 300-600 | 800-1500 |

| Batch Processing (req/sec) | 10,000+ | 2,000-3,000 | 1,000-1,500 | 200-400 |

These performance metrics demonstrate the substantial speed advantages that Groq's AI tools provide for language processing applications. The combination of specialized architecture and optimized software delivers inference speeds that are 3-10x faster than traditional solutions.

Real-World Applications and Use Cases

Interactive Chatbots and Virtual Assistants

Organizations deploying customer service chatbots benefit dramatically from Groq's AI tools. The ultra-low latency enables natural, conversational interactions that feel responsive and engaging. A major e-commerce platform reported 85% improvement in customer satisfaction scores after migrating their chatbot infrastructure to Groq's LPU-based systems.

The platform's ability to maintain consistent response times during peak traffic periods ensures reliable service delivery even under high load conditions. This reliability is crucial for customer-facing applications where performance degradation directly impacts user experience.

Real-Time Code Generation and Development Tools

Software development platforms leverage Groq's AI tools for real-time code completion and generation. The instant response times enable seamless integration into developer workflows, providing suggestions and completions without interrupting the coding process.

A leading integrated development environment (IDE) reduced code completion latency from 500ms to under 50ms using Groq's AI tools, resulting in significantly improved developer productivity and user satisfaction.

Live Translation and Communication Systems

Real-time translation applications require ultra-low latency to enable natural conversation flow. Groq's AI tools make simultaneous translation practical for business meetings, international conferences, and cross-cultural communication platforms.

Content Generation and Creative Applications

Content creation platforms use Groq's AI tools to provide instant writing assistance, idea generation, and creative suggestions. The immediate response times enable iterative creative processes where users can rapidly explore different approaches and refinements.

Software Ecosystem and Development Platform

Groq provides comprehensive software AI tools that complement its hardware innovations. The Groq Cloud platform offers easy access to LPU-powered inference through simple APIs that integrate seamlessly with existing applications and workflows.

The platform supports popular language models including Llama 2, Mixtral, and Gemma, with optimized implementations that maximize the LPU's performance advantages. Developers can deploy models quickly without requiring specialized knowledge of the underlying architecture.

API Integration and Developer Experience

Groq's AI tools feature developer-friendly APIs that maintain compatibility with existing language model interfaces while providing access to advanced performance features. The platform includes comprehensive documentation, code examples, and integration guides that accelerate development timelines.

Rate limiting, authentication, and monitoring capabilities ensure that production applications can scale reliably while maintaining optimal performance. The platform's usage analytics provide insights into application performance and optimization opportunities.

Cost Efficiency and Economic Benefits

Organizations implementing Groq's AI tools often achieve significant cost savings through improved infrastructure efficiency. The LPU's specialized design delivers higher throughput per dollar compared to traditional GPU-based solutions, reducing the total cost of ownership for AI inference workloads.

A financial services company reduced their AI infrastructure costs by 40% while improving response times by 5x after migrating to Groq's AI tools. The combination of better performance and lower costs created compelling business value that justified rapid adoption.

Energy Efficiency and Sustainability

Groq's AI tools demonstrate superior energy efficiency compared to general-purpose processors. The specialized architecture eliminates unnecessary computations and optimizes power consumption for language processing workloads.

This efficiency translates into reduced operational costs and improved sustainability metrics for organizations deploying large-scale AI systems. The environmental benefits become particularly significant for high-volume applications serving millions of users.

Competitive Advantages in AI Inference Market

Groq's AI tools occupy a unique position in the AI hardware market by focusing exclusively on inference performance rather than training capabilities. This specialization enables optimizations that would be impossible in general-purpose systems designed to handle diverse workloads.

The company's approach contrasts with traditional vendors who optimize for training performance, often at the expense of inference efficiency. This focus on deployment-specific optimization delivers practical benefits that directly impact user experience and application performance.

Implementation Strategies and Best Practices

Organizations adopting Groq's AI tools typically begin with pilot projects that demonstrate clear performance advantages before expanding to production deployments. The platform's cloud-based access model reduces implementation complexity and enables rapid experimentation.

Successful implementations focus on applications where latency directly impacts user experience or business outcomes. Customer service, interactive applications, and real-time systems provide the clearest value propositions for Groq's AI tools.

Migration Planning and Optimization

Migrating existing applications to Groq's AI tools requires careful planning to maximize performance benefits. The platform's compatibility with standard language model APIs simplifies migration, but applications may require optimization to fully leverage the LPU's capabilities.

Performance monitoring and optimization tools help organizations identify bottlenecks and fine-tune their implementations for optimal results. Groq provides professional services and support to ensure successful migrations and ongoing optimization.

Future Roadmap and Technology Evolution

Groq continues advancing its AI tools with regular hardware and software updates. The company's roadmap includes support for larger models, enhanced multimodal capabilities, and improved integration with popular AI frameworks.

Recent developments include expanded model support, enhanced debugging capabilities, and improved monitoring tools. These improvements ensure that Groq's AI tools remain at the forefront of AI inference technology as the industry evolves.

Industry Impact and Market Transformation

Groq's AI tools have influenced the broader AI hardware market by demonstrating the value of specialized inference processors. The company's success has encouraged other vendors to develop purpose-built solutions for specific AI workloads.

This specialization trend benefits the entire AI ecosystem by driving innovation and performance improvements across all platforms. Organizations now have access to a broader range of optimized solutions for different aspects of AI deployment.

Frequently Asked Questions

Q: How do Groq AI tools achieve such dramatically faster inference speeds compared to traditional GPUs?A: Groq's Language Processing Unit (LPU) uses a deterministic execution model and specialized architecture optimized specifically for language model inference, eliminating the inefficiencies of general-purpose processors and achieving 3-10x faster token generation speeds.

Q: What types of applications benefit most from Groq's ultra-low latency AI tools?A: Interactive chatbots, real-time translation systems, code completion tools, and customer service applications see the greatest benefits. Any application where response time directly impacts user experience can leverage Groq's speed advantages effectively.

Q: Are Groq AI tools compatible with existing language models and development frameworks?A: Yes, Groq supports popular models like Llama 2, Mixtral, and Gemma through standard APIs that maintain compatibility with existing applications while providing access to LPU performance benefits.

Q: How does the cost of Groq AI tools compare to traditional GPU-based inference solutions?A: Organizations typically see 30-50% cost reductions due to higher throughput per dollar and improved energy efficiency. The exact savings depend on specific workload characteristics and usage patterns.

Q: Can Groq AI tools handle high-volume production workloads reliably?A: Yes, Groq's deterministic architecture provides consistent performance under varying loads, with enterprise-grade reliability features, monitoring capabilities, and support for high-throughput applications serving millions of users.