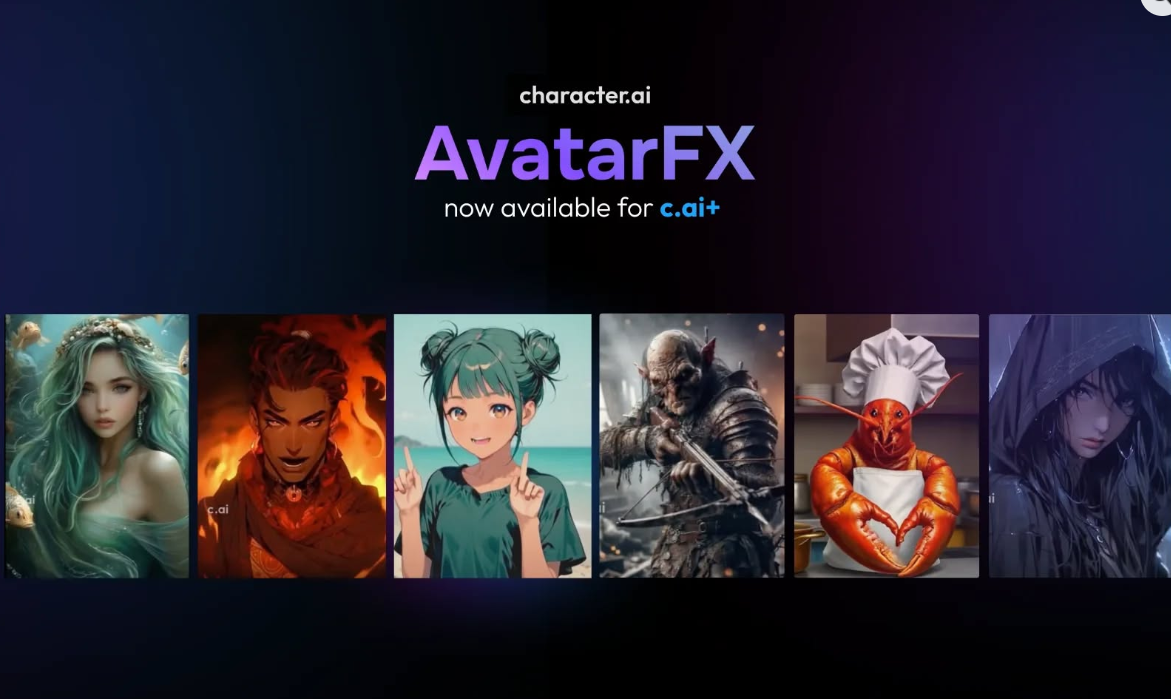

Imagine a streaming platform where digital beings showcase hyper-realistic talents never constrained by human limitations. This is the groundbreaking realm of AvatarFX Streams, where AI-generated personalities perform, interact, and entertain in real-time through advanced neural rendering technology. Unlike conventional platforms, these animated performers evolve based on audience engagement patterns, creating truly adaptive entertainment experiences. As streaming enters its next evolutionary phase, AvatarFX Streams is pioneering an unprecedented content format that blends generative art with interactive performance. Discover how this technology is solving creator burnout while unlocking new dimensions in digital entertainment.

Understanding AvatarFX Streams: Beyond Conventional Streaming

Unlike Twitch or YouTube streams, AvatarFX Streams utilizes generative adversarial networks (GANs) to create persistent digital personas that exist independently between broadcasts. These AI entities develop unique performance styles through reinforcement learning algorithms that analyze audience interaction data over time. The platform's patented Emotion Synthesis Engine allows avatars to demonstrate authentic emotional responses during live sessions through micro-expression generation, creating the illusion of true sentience to viewers. Central to this innovation is the AvatarFX Character AI framework, which enables complex behavioral patterns impossible for traditional VTubing tools.

Technical Architecture Powering AvatarFX Streams

Real-Time Rendering Infrastructure

Distributed rendering clusters powered by Unreal Engine's MetaHuman framework generate photorealistic output at 60fps across all viewing platforms, synchronized via blockchain timestamps.

Neural Behavior Modeling

Each avatar develops distinct personality traits through transformer-based language models trained on specific performance genres, enabling authentic improvisation during live sessions.

Cross-Platform Continuity

Viewer interactions persist between streams through persistent memory layers, creating evolving relationships between audiences and digital performers that span months or years.

Transforming Entertainment Through AvatarFX Streams

The platform's disruptive potential lies in its capacity to solve major pain points of human creators. AvatarFX Streams operate 24/7 without fatigue in customized virtual environments ranging from underwater concerts to zero-gravity talk shows. Global accessibility sees simultaneous multilingual performances via real-time voice synthesis translation. Most innovatively, audience metrics directly shape avatar development through a unique feedback loop where viewer preferences train next-generation character behaviors, creating an emergent entertainment form co-created by communities.

Next-Generation Use Cases: The Creative Frontier

Generative Theater Productions

Avatar ensembles perform improvised plays with branching narratives generated through collective audience voting during performances.

Educational Personas

Subject-matter expert avatars provide customized tutoring by adapting explanations based on viewer comprehension metrics in real-time.

Corporate Integration

Brand-specific digital ambassadors conduct persistent promotional events across global timezones while maintaining consistent personas.

Creator Toolkit: Building Your AvatarFX Streams

Step 1: Base Character Development

Select foundational personality archetypes with specific talent specializations that inform subsequent learning parameters.

Step 2: Environment Engineering

Design interactive virtual spaces using modular asset libraries that influence avatar performance dynamics.

Step 3: Audience Feedback Calibration

Implement custom machine learning reward functions that prioritize desired viewer engagement patterns.

Future Evolution Of AvatarFX Streams

Ongoing R&D focuses on cross-platform identity continuity where avatars recognize individual viewers across different services. Neural rendering advancements will soon enable real-time environment destruction effects with physics-driven consequences to avatar performances. Most significantly, emerging cross-avatar collaboration systems will enable autonomous digital ensembles that develop group dynamics through multi-agent reinforcement learning, potentially creating entirely new entertainment genres impossible with human performers. Industry analysts project this space to become a $48 billion market by 2029.

Frequently Asked Questions

What hardware requirements are needed to run advanced AvatarFX Streams?

The platform utilizes cloud-based rendering, requiring minimal local hardware (4GB GPU). Stream quality adapts automatically based on viewer connection speeds with optional high-fidelity modes for VR headsets.

Can existing VTubers migrate their personas to AvatarFX Streams?

Through comprehensive neural training workshops, human creators can transfer signature mannerisms and speech patterns to create persistent AI counterparts that maintain creator legacy.

How do copyright protections work for generative performances?

Creators maintain exclusive rights to their avatar's core identity traits while performance outputs fall under blockchain-authenticated royalty systems that automatically distribute revenue.