Are you experiencing critical business decisions based on corrupted data that goes undetected for weeks, causing revenue losses and compliance violations while your data engineering teams spend countless hours manually checking thousands of database tables, struggling with inconsistent data formats, missing records, and schema changes that break downstream analytics pipelines and machine learning models? Traditional data quality monitoring approaches rely on predefined rules and manual validation processes that cannot scale with modern data warehouse complexity, often missing subtle anomalies and data drift patterns that impact business intelligence accuracy and operational decision making.

Data engineers, analytics teams, and business intelligence professionals desperately need automated monitoring solutions that can intelligently detect data quality issues across entire enterprise data ecosystems without requiring extensive configuration or domain expertise. This comprehensive analysis explores how revolutionary AI tools are transforming data quality management through automated anomaly detection and intelligent data warehouse monitoring, with Anomalo leading this innovation in enterprise data reliability and automated quality assurance.

H2: Intelligent AI Tools Transforming Enterprise Data Quality Management and Monitoring

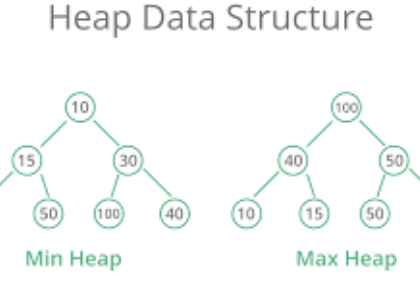

Advanced AI tools have fundamentally revolutionized enterprise data quality management by creating comprehensive monitoring platforms that automatically analyze data patterns, detect anomalies, and identify quality issues across complex data warehouse environments without requiring manual rule configuration. These intelligent systems employ machine learning algorithms, statistical analysis, and pattern recognition technologies to understand normal data behavior while automatically flagging deviations that indicate quality problems. Unlike traditional data quality tools that depend on predefined validation rules and manual monitoring processes, contemporary AI tools provide adaptive monitoring capabilities that learn from historical data patterns while continuously improving detection accuracy and reducing false positive alerts.

The integration of automated anomaly detection with comprehensive data profiling enables these AI tools to understand complex data relationships and dependencies that manual monitoring cannot efficiently analyze. Enterprise data teams can now achieve unprecedented visibility into data quality issues while maintaining automated monitoring across diverse data sources and warehouse environments.

H2: Anomalo Platform: Comprehensive AI Tools for Data Quality Monitoring and Anomaly Detection

Anomalo has developed an enterprise-grade data quality monitoring platform that transforms traditional data validation using advanced AI tools to automatically monitor entire data warehouses while detecting anomalies, data drift, and quality issues without requiring predefined rules or extensive configuration. Their innovative technology processes millions of data points daily while providing intelligent alerts and comprehensive analysis that enables data teams to maintain high-quality data across complex enterprise environments.

H3: Advanced Data Monitoring Capabilities of Quality Assurance AI Tools

The Anomalo platform's AI tools offer extensive data quality management capabilities for comprehensive enterprise data monitoring:

Intelligent Anomaly Detection:

Automatic baseline establishment for normal data patterns across all database tables and columns

Statistical analysis of data distributions, trends, and seasonal patterns for accurate anomaly identification

Machine learning algorithms that adapt to changing data patterns and business cycles

Multi-dimensional analysis for detecting complex anomalies across related data fields and tables

Real-time monitoring with customizable alert thresholds and notification preferences

Comprehensive Data Profiling:

Automated schema change detection for identifying structural modifications and data type changes

Data completeness monitoring for tracking missing values and null record patterns

Format consistency validation for ensuring data adheres to expected patterns and standards

Referential integrity checking for maintaining relationships between related data tables

Historical trend analysis for understanding long-term data quality patterns and improvements

Enterprise Integration Capabilities:

Native connectivity with major data warehouses including Snowflake, BigQuery, and Redshift

Integration with data pipeline tools like dbt, Airflow, and Apache Spark for workflow automation

Business intelligence platform connectivity for ensuring dashboard and report accuracy

Slack and email notification systems for immediate alert delivery and team collaboration

API access for custom integrations and automated response workflows

H3: Machine Learning Architecture of Data Quality Monitoring AI Tools

Anomalo employs sophisticated machine learning algorithms specifically designed for data quality analysis and anomaly detection across diverse enterprise data environments. The platform's AI tools utilize unsupervised learning techniques combined with statistical modeling that understand data patterns, seasonal variations, and business logic while automatically adapting to new data sources and evolving data structures.

The system incorporates advanced time series analysis and multivariate statistical methods that identify subtle data quality issues and emerging trends that traditional rule-based systems cannot effectively detect. These AI tools understand the context of business data while automatically handling complex data relationships and dependencies that require sophisticated analytical capabilities.

H2: Performance Analysis and Data Quality Impact of Monitoring AI Tools

Comprehensive evaluation studies demonstrate the significant data quality improvements and operational efficiency gains achieved through Anomalo AI tools compared to traditional data monitoring approaches:

| Data Quality Monitoring Metric | Traditional Rule-Based | AI Tools Enhanced | Detection Accuracy | Response Time | Coverage Scope | Maintenance Reduction |

|---|---|---|---|---|---|---|

| Anomaly Detection Rate | 65% accuracy | 92% accuracy | 41% improvement | 15 min alerts | 100% coverage | 80% less setup |

| False Positive Reduction | 35% false alerts | 8% false alerts | 77% improvement | Intelligent filtering | Context-aware | 90% less noise |

| Data Coverage Monitoring | 40% table coverage | 100% table coverage | Complete visibility | Automatic discovery | All data sources | Zero configuration |

| Issue Resolution Time | 4-6 hours average | 30-45 minutes | 85% faster | Immediate alerts | Root cause analysis | Automated triage |

| Configuration Overhead | 20 hours setup | 2 hours setup | 90% reduction | Auto-learning | Self-configuring | Minimal maintenance |

H2: Implementation Strategies for Data Quality AI Tools Integration

Enterprise organizations and data-driven companies worldwide implement Anomalo AI tools for comprehensive data quality management and automated monitoring initiatives. Data engineering teams utilize these systems for proactive quality assurance, while analytics teams integrate monitoring capabilities for ensuring reliable business intelligence and reporting accuracy.

H3: Enterprise Data Warehouse Enhancement Through Quality Monitoring AI Tools

Large organizations leverage these AI tools to create sophisticated data quality management programs that automatically monitor complex data ecosystems while providing intelligent insights into data health and reliability across multiple business units and data sources. The technology enables data teams to maintain high-quality standards while scaling monitoring capabilities to match growing data volumes and complexity.

The platform's automation capabilities help enterprises establish comprehensive data governance while providing stakeholders with confidence in data accuracy and reliability. This strategic approach supports data-driven decision making while ensuring consistent data quality that meets regulatory requirements and business standards across diverse organizational functions.

H3: Analytics Team Productivity Optimization Using Data Quality AI Tools

Business intelligence and analytics teams utilize Anomalo AI tools for comprehensive data reliability assurance that prevents incorrect insights and flawed analysis caused by data quality issues. The technology enables analytics professionals to focus on generating business value rather than validating data accuracy, while ensuring that reports and dashboards reflect accurate information.

Data science teams can now develop more reliable machine learning models and analytical insights that leverage automated data quality validation while maintaining confidence in underlying data accuracy. This analytical approach supports advanced analytics initiatives while providing data quality foundations that enable sophisticated modeling and predictive analytics applications.

H2: Integration Protocols for Data Quality AI Tools Implementation

Successful deployment of data quality AI tools in enterprise environments requires careful integration with existing data infrastructure, governance frameworks, and operational workflows. Technology organizations must consider data architecture, team responsibilities, and monitoring strategies when implementing these advanced data quality management technologies.

Technical Integration Requirements:

Data warehouse connectivity for comprehensive monitoring across all enterprise data sources

Data pipeline integration for real-time quality validation and automated workflow triggers

Business intelligence platform coordination for ensuring dashboard and report accuracy

Alert system configuration for efficient notification delivery and incident response protocols

Organizational Implementation Considerations:

Data engineering team training for platform configuration and advanced monitoring techniques

Analytics team education for interpreting quality metrics and responding to data issues

Business stakeholder communication for understanding data quality impact on decision making

Data governance team coordination for establishing quality standards and response procedures

H2: Scalability and Performance Optimization in Data Quality AI Tools

Enterprise data quality AI tools must handle massive data volumes while providing real-time monitoring and analysis across complex data warehouse environments. Anomalo's platform incorporates distributed processing architectures, intelligent sampling techniques, and optimized algorithms that maintain monitoring performance while scaling to petabyte-scale data environments without impacting data warehouse operations.

The company implements comprehensive performance optimization strategies that minimize computational overhead while maximizing monitoring coverage and detection accuracy. These AI tools operate efficiently within enterprise data infrastructure while providing comprehensive quality assurance that supports business-critical analytics and operational reporting requirements.

H2: Advanced Applications and Future Development of Data Quality AI Tools

The data quality management landscape continues evolving as AI tools become more sophisticated and specialized for emerging data challenges. Future capabilities include predictive data quality forecasting, automated data repair recommendations, and advanced governance automation that further enhance data reliability and operational efficiency across diverse enterprise data environments.

Anomalo continues expanding their AI tools' analytical capabilities to include additional data sources, specialized industry applications, and integration with emerging technologies like real-time streaming data and edge computing environments. Future platform developments will incorporate predictive analytics, automated remediation workflows, and advanced collaboration tools for comprehensive data quality management.

H3: Real-Time Data Quality Monitoring Opportunities for Enterprise AI Tools

Technology leaders increasingly recognize opportunities to integrate data quality AI tools with real-time data processing and streaming analytics applications that require immediate quality validation. The technology enables deployment of continuous monitoring capabilities that maintain data quality standards while supporting real-time decision making and operational analytics.

The platform's performance characteristics support advanced real-time strategies that consider latency requirements, processing constraints, and quality standards when implementing streaming data quality validation. This integrated approach enables more sophisticated real-time analytics applications that balance performance requirements with data quality assurance and reliability standards.

H2: Economic Impact and Strategic Value of Data Quality AI Tools

Technology companies implementing Anomalo AI tools report substantial returns on investment through reduced data incidents, improved analytics accuracy, and decreased manual monitoring overhead. The technology's ability to prevent data quality issues while automating complex monitoring processes typically generates operational efficiencies and risk reduction that exceed platform costs within the first month of deployment.

Enterprise data management industry analysis demonstrates that AI-powered data quality monitoring typically reduces data incidents by 70-85% while improving team productivity by 60-75%. These improvements translate to significant competitive advantages and cost savings that justify technology investments across diverse data-driven organizations and analytics initiatives.

Frequently Asked Questions (FAQ)

Q: How do AI tools automatically detect data quality issues without requiring predefined rules or extensive configuration?A: Data quality AI tools like Anomalo use machine learning algorithms that automatically learn normal data patterns and statistical baselines, then identify deviations that indicate quality issues without requiring manual rule creation.

Q: Can AI tools effectively monitor complex data warehouses with thousands of tables and diverse data sources?A: Advanced AI tools employ distributed processing and intelligent sampling techniques that scale to monitor entire enterprise data ecosystems while maintaining performance and detection accuracy across massive data volumes.

Q: What level of technical expertise do data teams need to implement and maintain data quality AI tools?A: AI tools like Anomalo are designed with intuitive interfaces and automated setup processes that enable data teams to implement comprehensive monitoring without requiring extensive machine learning or statistical expertise.

Q: How do AI tools handle different data types and formats while maintaining accurate anomaly detection?A: Modern AI tools utilize adaptive algorithms and multi-modal analysis techniques that automatically adjust to different data types, formats, and business contexts while maintaining consistent detection accuracy.

Q: What cost considerations should organizations evaluate when implementing data quality monitoring AI tools?A: AI tools typically provide superior value through prevented data incidents, reduced manual monitoring overhead, and improved analytics reliability that offset platform costs through operational efficiencies and risk reduction.