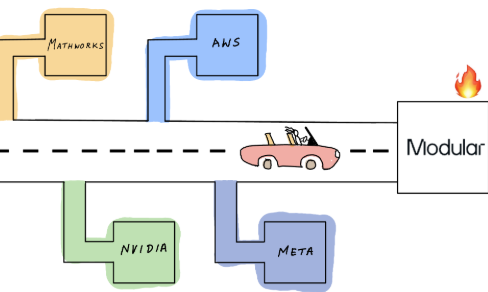

AI developers face overwhelming complexity from fragmented technology stacks that require mastering multiple programming languages, frameworks, and deployment platforms while struggling with performance bottlenecks and integration challenges that slow development cycles and limit innovation potential. Traditional AI development involves juggling Python for prototyping, C++ for performance optimization, CUDA for GPU acceleration, and various specialized tools that create maintenance nightmares and knowledge silos within development teams. This comprehensive analysis explores how Modular's groundbreaking AI tools, led by Swift and TensorFlow creator Chris Lattner, deliver a unified programming language called Mojo and revolutionary AI engine that eliminates technology stack fragmentation while achieving unprecedented performance and developer productivity gains.

Revolutionary AI Tools for Unified Development Infrastructure

Modular transforms AI development through innovative tools that consolidate the entire AI technology stack into a cohesive, high-performance platform designed by industry veterans who created Swift programming language and TensorFlow framework. Founded by Chris Lattner, the company addresses fundamental infrastructure challenges that have plagued AI development since the field's inception, providing unified solutions that combine research flexibility with production performance requirements.

The platform's AI tools eliminate the traditional trade-offs between development speed and execution performance through the Mojo programming language, which delivers Python-like syntax with C++ level performance while maintaining full compatibility with existing Python ecosystems. Modular's unified approach enables developers to write, optimize, and deploy AI models using a single programming language and toolchain, dramatically reducing complexity while achieving performance improvements of 10x to 1000x over traditional Python implementations.

Advanced Mojo Programming Language AI Tools

High-Performance Python-Compatible Development Environment

Modular's AI tools center around the Mojo programming language, which provides seamless Python compatibility while delivering unprecedented performance through advanced compiler optimizations and hardware-specific code generation. Mojo enables developers to leverage existing Python libraries and frameworks while achieving performance levels previously requiring low-level C++ or CUDA programming.

Mojo's design philosophy combines the accessibility of Python with the performance characteristics of systems programming languages through progressive optimization capabilities. Developers can start with familiar Python syntax and gradually add performance annotations and optimizations as needed, enabling smooth transitions from research prototypes to production-ready implementations without requiring complete code rewrites or language migrations.

Intelligent Compiler Optimization and Hardware Acceleration

The platform's AI tools include sophisticated compiler technology that automatically optimizes code for specific hardware architectures including CPUs, GPUs, and specialized AI accelerators. Mojo's compiler performs advanced optimizations including vectorization, parallelization, and memory layout optimization that maximize hardware utilization without requiring manual optimization efforts.

Compiler capabilities include automatic differentiation, graph optimization, and kernel fusion that eliminate performance bottlenecks common in traditional AI frameworks. These AI tools enable developers to achieve optimal performance across diverse hardware platforms while maintaining code portability and development productivity, addressing the traditional complexity of multi-platform AI deployment.

| Development Capability | Traditional AI Stack | Modular AI Tools | Performance Gain | Development Efficiency |

|---|---|---|---|---|

| Language Requirements | Python + C++ + CUDA | Unified Mojo | Single language | 80% complexity reduction |

| Performance Optimization | Manual tuning | Automatic compiler | 10-1000x speedup | Zero manual effort |

| Hardware Compatibility | Platform-specific code | Universal deployment | All architectures | Write once, run anywhere |

| Framework Integration | Multiple dependencies | Unified platform | Seamless compatibility | Simplified maintenance |

| Overall Development | Fragmented workflow | Unified experience | Massive acceleration | Streamlined productivity |

Comprehensive AI Engine Architecture Tools

Unified Runtime and Execution Environment

Modular's AI tools provide a revolutionary execution engine that unifies model training, inference, and deployment through a single runtime environment optimized for modern AI workloads. The platform eliminates the complexity of managing multiple frameworks, runtimes, and deployment targets while delivering superior performance across all AI development phases.

Runtime capabilities include dynamic graph execution, automatic memory management, and distributed computing coordination that simplify complex AI workflows. The unified engine handles everything from research experimentation to production deployment through consistent APIs and optimization strategies, enabling developers to focus on algorithm development rather than infrastructure management.

Advanced Model Optimization and Deployment

The platform's AI tools include sophisticated model optimization capabilities that automatically improve model performance, reduce memory requirements, and optimize inference speed through advanced compiler techniques and hardware-specific optimizations. These tools enable seamless deployment across diverse hardware platforms without manual optimization efforts.

Optimization features include quantization, pruning, and kernel fusion that maintain model accuracy while dramatically improving performance characteristics. The AI tools automatically select optimal optimization strategies based on target hardware and performance requirements, ensuring that models achieve maximum efficiency in production environments.

Sophisticated Performance Acceleration AI Tools

Intelligent Hardware Utilization and Resource Management

Modular's AI tools maximize hardware performance through intelligent resource management algorithms that automatically distribute computation across available processors, memory hierarchies, and specialized accelerators. The platform achieves optimal hardware utilization without requiring developers to understand low-level hardware characteristics or optimization techniques.

Resource management includes automatic workload distribution, memory optimization, and communication minimization that eliminate common performance bottlenecks. These AI tools adapt to available hardware resources while maintaining consistent performance characteristics across different deployment environments, ensuring reliable performance regardless of infrastructure variations.

Advanced Parallel Computing and Distributed Processing

The platform's AI tools provide sophisticated parallel computing capabilities that automatically scale AI workloads across multiple processors, nodes, and accelerators through intelligent workload distribution and communication optimization. Developers can leverage massive computational resources without managing complex distributed computing infrastructure.

Parallel processing features include automatic data parallelism, model parallelism, and pipeline parallelism that maximize computational throughput while minimizing communication overhead. These AI tools handle the complexity of distributed AI training and inference while providing simple, unified programming interfaces that maintain development productivity.

| Performance Optimization | Traditional Frameworks | Modular AI Tools | Speed Improvement | Resource Efficiency |

|---|---|---|---|---|

| CPU Utilization | Manual optimization | Automatic vectorization | 5-20x faster | Maximum efficiency |

| GPU Acceleration | CUDA programming | Unified acceleration | 10-100x speedup | Simplified development |

| Memory Management | Manual allocation | Intelligent optimization | 50% reduction | Automatic efficiency |

| Distributed Computing | Complex setup | Seamless scaling | Linear scaling | Effortless parallelism |

| Overall Performance | Manual complexity | Automatic optimization | Dramatic acceleration | Optimal utilization |

Advanced Development Productivity AI Tools

Seamless Python Ecosystem Integration

Modular's AI tools maintain complete compatibility with the existing Python ecosystem while providing performance enhancements and development improvements through transparent integration mechanisms. Developers can use familiar libraries, frameworks, and tools while benefiting from Mojo's performance advantages and unified development experience.

Integration capabilities include automatic library compatibility, seamless package management, and transparent performance optimization that preserve existing development workflows. The AI tools ensure that migration to Modular's platform requires minimal code changes while delivering immediate performance benefits and long-term development advantages.

Intelligent Development Environment and Tooling

The platform's AI tools include comprehensive development environments that provide intelligent code completion, performance profiling, and debugging capabilities specifically designed for AI development workflows. These tools accelerate development cycles while improving code quality and performance optimization effectiveness.

Development features include real-time performance analysis, automatic optimization suggestions, and integrated debugging that help developers identify and resolve performance issues quickly. The AI tools provide insights into code behavior, resource utilization, and optimization opportunities that enable continuous improvement of AI applications.

Comprehensive Model Development AI Tools

Unified Training and Inference Framework

Modular's AI tools provide a single framework that handles both model training and inference through consistent APIs and optimization strategies. This unified approach eliminates the complexity of managing separate training and deployment pipelines while ensuring optimal performance across all development phases.

Framework capabilities include automatic mixed precision training, dynamic batching, and inference optimization that maximize performance while maintaining development simplicity. The AI tools automatically adapt to different workload characteristics and hardware configurations, ensuring optimal performance without requiring manual tuning or configuration management.

Advanced Model Architecture Support

The platform's AI tools support diverse model architectures including transformers, convolutional networks, recurrent networks, and emerging architectures through flexible programming interfaces and automatic optimization capabilities. Developers can implement cutting-edge research while benefiting from production-ready performance and deployment capabilities.

Architecture support includes automatic graph optimization, memory layout optimization, and computation fusion that maximize performance regardless of model complexity or structure. These AI tools enable researchers and developers to explore innovative approaches while maintaining practical deployment capabilities and performance requirements.

Enterprise-Grade AI Tools for Production Deployment

Scalable Infrastructure and Resource Management

Modular's AI tools provide enterprise-grade infrastructure capabilities that support large-scale AI deployments through intelligent resource management, automatic scaling, and performance monitoring. The platform handles complex deployment requirements while maintaining development simplicity and operational reliability.

Infrastructure features include container orchestration, load balancing, and fault tolerance that ensure reliable AI service delivery. The AI tools automatically manage resource allocation, performance optimization, and system monitoring while providing comprehensive visibility into system behavior and performance characteristics.

Comprehensive Security and Governance

The platform's AI tools include enterprise security capabilities that protect intellectual property, ensure data privacy, and maintain compliance with regulatory requirements through comprehensive security frameworks and access control mechanisms. These tools enable secure AI development and deployment while maintaining development productivity.

Security capabilities include encryption, access controls, and audit logging that meet enterprise security standards. The AI tools provide comprehensive governance frameworks that support both innovation and risk management while ensuring that AI applications meet organizational security and compliance requirements.

Advanced Research and Innovation AI Tools

Cutting-Edge Algorithm Development Support

Modular's AI tools enable researchers to implement and experiment with cutting-edge algorithms through flexible programming interfaces and automatic optimization capabilities. The platform supports both established techniques and emerging research directions while providing production-ready performance and deployment capabilities.

Research capabilities include automatic differentiation, custom operator development, and experimental framework support that accelerate research cycles. These AI tools bridge the gap between research innovation and practical implementation while maintaining the flexibility required for algorithmic exploration and development.

Collaborative Research and Development Environment

The platform's AI tools provide collaborative development environments that support team research, knowledge sharing, and coordinated development efforts through integrated version control, experiment tracking, and result sharing capabilities. Researchers can collaborate effectively while maintaining individual research autonomy and creative exploration.

Collaboration features include shared development environments, experiment reproducibility, and result comparison that enhance research productivity. The AI tools support both individual research and large-scale team collaborations while ensuring that research insights and developments are effectively shared and leveraged across organizations.

Future Innovation in AI Development Tools

Modular continues advancing AI development through ongoing research and platform enhancement focused on emerging technologies including quantum computing integration, neuromorphic computing support, and advanced AI architectures. Future AI tools will incorporate more sophisticated automation and intelligence that further accelerate development productivity.

Innovation roadmap includes enhanced compiler optimizations, expanded hardware support, and advanced development automation that will strengthen the platform's position as the definitive solution for unified AI development. These developments will ensure that Modular's AI tools remain at the forefront of AI infrastructure innovation while maintaining the simplicity and performance advantages that define the platform.

Frequently Asked Questions

Q: How do Modular's AI tools maintain Python compatibility while achieving superior performance?A: Mojo programming language provides seamless Python compatibility through advanced compiler technology that automatically optimizes Python-like code to achieve C++ level performance without requiring code changes or migration efforts.

Q: Can existing Python AI projects easily migrate to Modular's platform?A: Yes, Modular's AI tools maintain full Python ecosystem compatibility, allowing existing projects to run unchanged while gradually adopting Mojo optimizations and performance enhancements as needed without disruptive migrations.

Q: How do the AI tools handle different hardware platforms and accelerators?A: The platform includes intelligent compiler technology that automatically optimizes code for specific hardware architectures including CPUs, GPUs, and AI accelerators while maintaining code portability and development simplicity.

Q: What performance improvements can developers expect from Modular's AI tools?A: Performance improvements typically range from 10x to 1000x over traditional Python implementations, depending on workload characteristics and hardware platforms, while maintaining development productivity and code simplicity.

Q: How do the AI tools support both research experimentation and production deployment?A: Modular provides a unified platform that supports research flexibility through Python compatibility while delivering production performance through automatic optimization, enabling seamless transitions from research to deployment without code rewrites.