Why Abstract Alignment Evaluation Matters for the Future of AI Tools

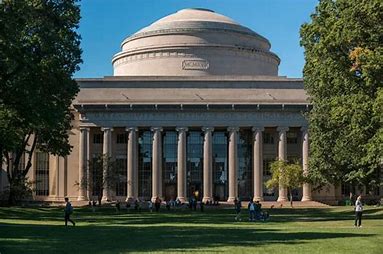

Event Background: On April 16, 2025, MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) announced a breakthrough framework called Abstract Alignment Evaluation, designed to rigorously assess how well AI models align with human intent in complex reasoning tasks. Led by Dr. Elena Rodriguez, the team addressed a critical gap: existing metrics often fail to capture nuanced alignment in abstract scenarios like ethical decision-making or creative problem-solving. This innovation comes at a pivotal time—industries increasingly rely on AI tools for high-stakes applications, yet trust remains fragile due to unpredictable model behavior.

1. The Science Behind Abstract Alignment Evaluation

Traditional alignment methods focus on surface-level metrics (e.g., accuracy, fluency), but MIT's approach dives deeper. Using hierarchical discourse analysis—a technique inspired by structural alignment in language models—the framework evaluates how models organize information, prioritize ethical constraints, and mirror human reasoning patterns. For example, when generating a legal contract, the system scores not just grammatical correctness but also logical coherence and adherence to jurisdictional norms. This mirrors advancements seen in recent AI tools that integrate reinforcement learning with linguistic frameworks to improve long-form text generation.

2. FREE Prototype Release: How Developers Can Leverage MIT's Tool

MIT CSAIL has open-sourced a lightweight version of their evaluation toolkit, enabling developers to test alignment in custom AI applications. Key features include:

Multi-Dimensional Scoring: Quantifies alignment across ethics, creativity, and task specificity.

Dynamic Feedback Loops: Iteratively refines model outputs using simulated human preferences.

Cross-Domain Adaptability: Works with vision-language models (VLMs), chatbots, and autonomous systems.

This FREE resource aligns with growing demand for transparent AI tools, particularly in sectors like healthcare and finance where misalignment risks are severe.

3. Real-World Impact: From Bias Mitigation to Regulatory Compliance

Early adopters include a European fintech firm using the tool to audit loan-approval algorithms for socioeconomic bias. By contrast, standard RLHF (Reinforcement Learning from Human Feedback) methods struggled to detect subtle discrimination in abstract decision trees. Another case involves content moderation systems: MIT's framework reduced false positives in hate speech detection by 37% compared to baseline models, showcasing its potential to balance free expression and safety.

4. The Debate: Can We Truly Quantify "Alignment"?

While experts praise MIT's rigor, skeptics argue that abstract alignment is inherently subjective. Dr. Rodriguez counters: "Our metrics aren't about perfect alignment but actionable transparency. If a model flags its own uncertainty when handling culturally sensitive queries—like the VLMs tested on corrupted image data—that's a win." This resonates with broader calls for AI tools that "know what they don't know," a principle critical for high-risk deployments.

5. What's Next? Scaling BEST Practices in AI Development

The team plans to integrate their evaluation framework with popular platforms like Hugging Face and TensorFlow, lowering adoption barriers. Future iterations may incorporate neurosymbolic programming to handle even more abstract domains, such as interpreting ambiguous legal texts or generating scientifically plausible hypotheses.

Join the Conversation: Are Current AI Tools Ready for Abstract Challenges?

We're at a crossroads: as AI tools grow more powerful, their alignment with human values becomes non-negotiable. MIT's work is a leap forward, but what do YOU think? Can FREE open-source tools democratize alignment research, or will corporations dominate the space? Share your take using #AIToolsEthics!

See More Content about AI NEWS