Over the past few years, artificial intelligence has dramatically changed the way we create music. From lyric writing to mastering, AI is now capable of handling tasks that used to require entire production teams. But one question keeps surfacing: What is the OpenAI music generation model? And more importantly, how does it actually work?

Whether you're a musician, a developer, or just curious about the technology behind AI-generated music, this article dives deep into OpenAI’s music model—explaining what it is, how it was developed, what it can do, and why it's generating so much buzz in the music and tech industries in 2025.

What Is the OpenAI Music Generation Model?

The OpenAI music generation model is an advanced deep learning system developed by OpenAI to generate music from natural language prompts. It functions similarly to text-based models like GPT-4, but it's trained on audio data instead of just text.

As of 2025, the most discussed version of OpenAI's music model is Jukebox, with ongoing speculation around a newer, more capable internal model named Lyria—which, while not fully open to the public, has reportedly been used in OpenAI’s internal music research and collaborations.

In short, this model can generate complete songs, including:

Vocals and lyrics

Instrumentals and harmonies

Genre-specific structure

Realistic performance dynamics

The model’s goal is to allow humans to guide music creation using simple language, lowering the barrier to entry for anyone who wants to produce original audio.

A Brief Timeline: OpenAI’s Journey in AI Music

OpenAI’s work in music generation didn’t start overnight. Here's a quick breakdown of its evolution:

2020: Jukebox Released

OpenAI launched Jukebox, a model that could generate raw audio samples with vocals, instruments, and genre-specific elements. It was groundbreaking but computationally expensive.2021–2023: Silent Period with Experiments

OpenAI didn’t release much during this time, but researchers continued refining transformer-based architectures for long-context audio generation.Late 2023–2024: Lyria (Speculated Name)

According to reports and leaked research demos, a more scalable music model, internally referred to as Lyria, is being tested. It can take a prompt like “a sad indie folk song about distance” and output a complete, high-quality song in less than a minute.2024–2025: Integration with ChatGPT

With the release of ChatGPT voice mode and Sora for video, OpenAI began experimenting with generating both music and soundtrack elements via prompt-based interaction.

How the OpenAI Music Model Works

To understand what the OpenAI music generation model actually does under the hood, let's break it into key components.

1. Audio Tokenization

Before music can be generated, it needs to be translated into a format the model can understand. This is done using audio tokenization, which transforms sound into discrete tokens—similar to how words are tokenized in language models.

The model likely uses a version of EnCodec, a high-fidelity audio codec developed by Meta AI, to handle this conversion. This lets the model analyze and generate detailed audio sequences efficiently.

2. Transformer-Based Architecture

Just like GPT-4 handles text generation by predicting the next word, OpenAI’s music model predicts the next sound token. Using a transformer backbone, the model learns musical structure, rhythm, chord progressions, and vocal phrasing.

3. Multi-Modal Conditioning

The model accepts text prompts as input (e.g., “an emotional piano ballad with female vocals”) and conditions the audio generation based on:

Genre

Emotion

Instrument preference

Vocal style (male/female/rap/instrumental)

This gives users full creative control while keeping the process intuitive.

4. Latent Diffusion & Audio Quality

While OpenAI hasn’t confirmed all techniques used, the audio output quality suggests that latent diffusion methods (similar to what’s used in DALL·E or audio-focused models like Stable Audio) are part of the pipeline. These methods help maintain clarity and realism in long-form music.

Real-World Applications of OpenAI’s Music Generation Model

So why does this matter? Because OpenAI’s model isn’t just a novelty—it’s a toolkit reshaping how music is made across industries.

Here’s how people are already using AI-generated music:

Content Creators: Generate unique tracks for YouTube, TikTok, podcasts, and video games

Indie Artists: Prototype ideas without needing access to studios or session musicians

Film & Game Composers: Quickly mock up ambient soundtracks or character themes

Educators & Students: Use AI for learning music theory or experimenting with composition styles

How Does It Compare to Other AI Music Tools?

Let’s look at how the OpenAI music generation model compares with popular tools in the AI music space:

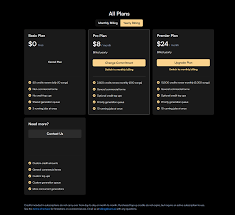

| Tool | Model | Generates Vocals? | Text-to-Music? | Free Access? |

|---|---|---|---|---|

| OpenAI (Jukebox/Lyria) | Proprietary | Yes | Yes | Limited |

| Suno AI | Custom | Yes | Yes | Yes |

| Udio | Proprietary | Yes | Yes | Yes |

| SOUNDRAW | Template-based | No | Partial | Trial |

| AIVA | Symbolic (MIDI) | No | No | Yes |

While Udio and Suno offer real-time creation with fewer customization options, OpenAI’s model is notable for its depth, realism, and potential multi-modal synergy with other OpenAI tools like Sora and DALL·E.

Is OpenAI’s Music Generation Model Open Source?

As of now, Jukebox is partially open-sourced—meaning researchers can inspect the model architecture and pre-trained weights. However, newer iterations (like Lyria) are not public and only available internally or via collaborations.

This is due to:

Ethical concerns (e.g., deepfake vocals of real artists)

Computational cost

Copyright complications

OpenAI has stated its commitment to aligning powerful models with responsible use, especially in creative industries.

Limitations of OpenAI’s Music Model

While impressive, the model does have limitations:

No real-time editing (yet): You can’t tweak individual notes or mix layers manually

Hard to control lyrics word-for-word

High computational demand means it’s not available for casual, widespread use

Limited access to current models beyond research demos or internal use

Still, ongoing improvements suggest that many of these gaps will be closed in future releases.

Conclusion: Why OpenAI’s Music Generation Model Is a Game-Changer

If you’ve been asking what is the OpenAI music generation model, the answer is this: it’s a cutting-edge AI system capable of producing studio-quality songs based on short text prompts. From generating emotional ballads to creating genre-bending audio experiments, this model redefines how music is composed, prototyped, and shared.

As OpenAI continues refining its approach—potentially integrating tools like DALL·E, Sora, and ChatGPT—expect music generation to become even more immersive, personalized, and accessible. While public access is still limited, the direction is clear: AI music is no longer a distant dream—it’s here, and it’s changing the industry one prompt at a time.

Frequently Asked Questions (FAQs)

Q1: Can I try OpenAI’s music generation model myself?

You can experiment with Jukebox (openai.com/research/jukebox), but newer models like Lyria are not yet publicly available.

Q2: Does it create lyrics too?

Yes, the model can generate lyrics and align them with vocals, creating full vocal songs from a prompt.

Q3: Is OpenAI’s model better than Suno or Udio?

In terms of realism and depth, yes. However, Suno and Udio are more accessible to general users as of 2025.

Q4: What genres can it generate?

Nearly all major genres: pop, rock, EDM, classical, jazz, lo-fi, hip hop, ambient, and more.

Q5: Is this replacing human musicians?

Not entirely. It’s a tool for co-creation. Many artists use it to brainstorm, prototype, or enhance existing ideas—not to fully replace creativity.

Learn more about AI MUSIC