AI music is having a moment, and Suno AI is at the center of it. If you’ve ever come across a surprisingly catchy, fully produced song that was generated from a text prompt, there’s a good chance it came from Suno. But beyond the viral tracks and sleek interface, many creators are asking: how does Suno AI work?

In this article, we’ll break down the technology, model architecture, workflow, and user experience behind Suno—without the fluff. Whether you're an artist, developer, or AI enthusiast, this guide offers a detailed look at how Suno AI works and why it’s reshaping the way we think about songwriting.

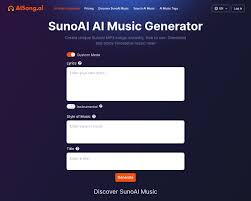

Suno AI is an AI-powered music creation platform that turns simple text prompts into fully composed, vocalized songs. You don’t need to play an instrument, understand music theory, or use a digital audio workstation (DAW). Just describe what you want—like “a pop song about space love” or “a jazzy birthday tune”—and within seconds, Suno generates a full-length track complete with instruments, vocals, structure, and mixing.

As of 2025, Suno has released V3 of its model, which significantly improves quality, vocal realism, and style adaptability.

The process behind Suno’s AI-generated music may seem magical, but it’s built on a combination of large-scale language models, music synthesis engines, and proprietary training techniques.

Let’s walk through the stages of how Suno AI works, from input to output.

When you type something into Suno—like:

“Create a synthpop song about a robot falling in love”

Suno’s system first uses natural language processing (NLP) to interpret your creative intent. It doesn’t just look at keywords like “synthpop” and “robot,” but tries to extract context:

Mood (e.g., upbeat, melancholic)

Genre and subgenre (e.g., synthpop, electro-pop)

Theme or story (e.g., AI romance)

Style references (if provided, like “in the style of The Weeknd”)

This input is parsed and formatted into a structured set of musical directives, similar to what a human producer might use when composing.

Once the prompt is parsed, the model constructs the song structure, which often includes:

Intro

Verse

Chorus

Bridge

Outro

Suno’s system intelligently assigns chord progressions, tempo, and key based on the genre and mood. For example, a sad ballad might use a minor key and slower tempo, while a punk track would lean toward fast BPM and aggressive chords.

This part of the process is governed by transformer-based models trained on millions of real-world songs, allowing the AI to learn patterns in harmonic rhythm, melody phrasing, and genre-specific arrangements.

Here’s where it gets impressive: Suno not only composes music—it also writes and performs lyrics.

Lyric Generation: Based on your prompt and song structure, Suno generates original lyrics using a fine-tuned language model, much like ChatGPT.

Vocal Synthesis: Then it uses a neural singing synthesis system, capable of mimicking different vocal timbres, accents, and emotions.

The vocal engine is based on diffusion models, a new class of generative AI that creates natural-sounding voices from scratch. You might notice slight robotic undertones, but Suno’s V3 voices are remarkably expressive.

Suno uses a multi-track audio generation system to build instrumental layers. These are not pieced together samples—they’re synthesized using generative models trained to understand instrumentation by genre.

Each layer—drums, bass, guitar, synth, strings—is generated to complement the others. The system automatically handles:

Instrument balance

Stereo placement (panning)

Reverb, EQ, and compression

Loudness normalization

In other words, Suno acts as composer, arranger, performer, and producer—all in one shot.

Once everything is generated, the backend renders the track in high-quality audio (usually MP3 or WAV). The rendering is nonlinear, meaning it doesn't require actual playback time; it happens faster than real-time.

Suno typically generates:

A 30-second sample (free-tier users)

A full 1–2 minute song (premium users)

Lyrics displayed in browser

Option to generate matching visuals or video (experimental)

This seamless user experience is powered by cloud GPU infrastructure, enabling rapid model inference and song delivery.

Suno isn’t the first AI music generator, but it is currently the most complete. Here's how it compares:

| Feature | Suno AI | AIVA | Udio | Amper Music |

|---|---|---|---|---|

| Text-to-full-song | Yes | No | Yes | No |

| Vocal synthesis | Yes (multi-voice) | Limited | Yes | No vocals |

| Lyric generation | Yes | No | Yes | No |

| Real-time generation | Yes | Slower | Yes | Yes |

| User input flexibility | High | Low | High | Medium |

Suno’s biggest advantage? Fully-integrated creativity from prompt to production.

Suno is widely used by:

Indie musicians testing ideas

Social media creators looking for quick audio

Educators teaching songwriting

Hobbyists without instruments

Brands creating custom audio

YouTubers needing fast, royalty-free music

As of May 2025, Suno has over 12 million registered users, with 1.5 million monthly active creators.

Prompt:

“Make a 90s-style R&B song about missing someone on a rainy day, in the style of Boyz II Men”

What Suno AI does:

Generates lyrics about longing and emotional vulnerability

Builds slow-jam chord progressions in a minor key

Uses soulful male vocals with vibrato and melisma

Adds soft drums, electric piano, and string pads

Mixes the track with lush reverb and a fade-out

The result? A surprisingly emotional AI song that feels nostalgic and personal.

While powerful, Suno does have limits:

No fine-grained MIDI editing

Vocals still not on par with real singers

Lyrics can sometimes feel generic

Not customizable beyond text prompts

However, with each version, these limitations are shrinking. The leap from V2 to V3 saw a significant upgrade in vocal quality and genre fidelity.

Knowing how Suno AI works gives you more creative control. By understanding what the system interprets from your prompt and how it processes each stage—from structure to vocal performance—you can craft better songs, experiment with genres, and even blend human creativity with machine precision.

As the tech evolves, expect Suno to integrate more interactivity, more voices, and even DAW-like editing tools. But even now, Suno represents a groundbreaking shift in how songs are written, produced, and shared—democratizing music creation in a way never seen before.

Q1: Is Suno free to use?

Yes, Suno offers a free tier with limited generations. Paid tiers allow more song credits and full-length tracks.

Q2: Can I download my Suno songs?

Yes, both audio and lyrics can be downloaded. Full MP3 quality is available even in the free plan.

Q3: Can I use Suno songs commercially?

Only with proper licensing. Suno's terms of use specify non-commercial use by default. Contact their team for business licensing.

Q4: Does Suno generate in multiple languages?

Currently, English is the primary language, though support for others may be in development.

Q5: Can I remix or edit Suno songs in a DAW?

Yes, you can import the MP3 into tools like FL Studio or Ableton, but there’s no MIDI or stem export yet.

Learn more about AI MUSIC TOOLS