Introduction: Why Diffusion Models Are Changing AI Music Forever

The landscape of AI music is rapidly evolving, and diffusion models are leading this transformation. If autoregressive models were the workhorses of early AI music—predicting one note at a time—diffusion models are the modern architects, crafting entire songs with more realism, flexibility, and style control.

To create AI music with diffusion models means using powerful generative frameworks that learn to "denoise" sound from randomness, gradually forming detailed, expressive music. This approach is at the heart of many state-of-the-art tools like Suno AI, Stable Audio, and Riffusion.

In this guide, you'll learn how these models work, which platforms to use, how to create music with them, and what their strengths and limitations are. If you're looking to stay ahead of the curve in music tech, this is where the future is headed.

What Are Diffusion Models in AI Music?

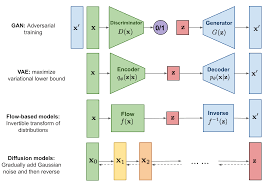

Diffusion models work by starting with noise—literally random audio or spectrograms—and iteratively refining it into structured sound. They’re trained to reverse the process of noise corruption, learning how to recreate meaningful patterns like beats, harmonies, and melodies from scratch.

Key to their power is their ability to generate high-quality audio with fine control over tempo, genre, emotion, and even lyrics (in multimodal models).

Key Features of Diffusion-Based Music Generators

High-Fidelity Audio Generation

Models like Stable Audio and Suno AI can generate tracks with professional-quality mixing and mastering baked in.

Text-to-Music Control

You can input text prompts like “dark cinematic ambient with strings” and receive music that matches the description.

Supports dynamic control over genre, mood, tempo, and instrumentation.

Fast Inference Time (for Music)

Unlike autoregressive models which generate token by token, diffusion models generate parallel outputs.

This means faster generation and less looping or error accumulation.

Multimodal Inputs

Some models allow combining audio and text input or even visual references (spectrograms) to influence output.

Open-Source and Commercial Options

Models like Riffusion are open-source.

Tools like Suno AI and Stability AI’s Stable Audio offer polished, user-friendly platforms.

Popular Diffusion Models That Can Create AI Music

1. Stable Audio (by Stability AI)

Converts text prompts into high-quality audio.

Supports durations up to 90 seconds or more.

Handles genres like EDM, cinematic, ambient, jazz, and more.

Great for creators needing royalty-free music quickly.

2. Suno AI

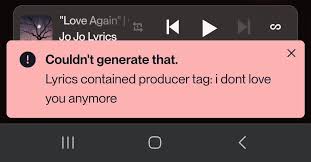

Text-to-music and lyric-to-song generation.

Accepts lyrics, genre, tempo, mood as inputs.

Known for full-song generation with realistic vocals.

Excellent for creators without music production experience.

3. Riffusion

Converts text prompts into music using spectrogram diffusion.

Free and open-source.

Generates short musical loops—great for beatmakers.

4. Dance Diffusion (Harmonai)

Focused on electronic and dance music.

Uses latent diffusion to generate waveforms.

Still experimental but promising for loop producers and DJs.

Pros and Cons of Diffusion Models for AI Music Creation

| Pros | Cons |

|---|---|

| High-quality audio output | Large model sizes require powerful hardware |

| Fast and parallel generation | May lack fine-grained note-level editing |

| Multimodal input support (text, audio, lyrics) | Outputs can be unpredictable without prompt tuning |

| Scalable and adaptable | Fewer tools for live, real-time generation |

| Royalty-free output in many platforms | Editing generated audio can be harder than MIDI |

Use Cases: Who Should Use Diffusion Models?

Content Creators

Generate cinematic background music or catchy theme tunes in minutes.Musicians and Producers

Use as a starting point for loops, melodies, or even vocal hooks.Filmmakers and Game Developers

Generate scoring elements tailored to scenes or moods with descriptive prompts.Podcasters and Streamers

Create intro/outro music that fits your brand style without hiring composers.Educators and Students

Use AI music as a tool to explore sound design, genre structure, and prompt engineering.

How to Create AI Music with Diffusion Models

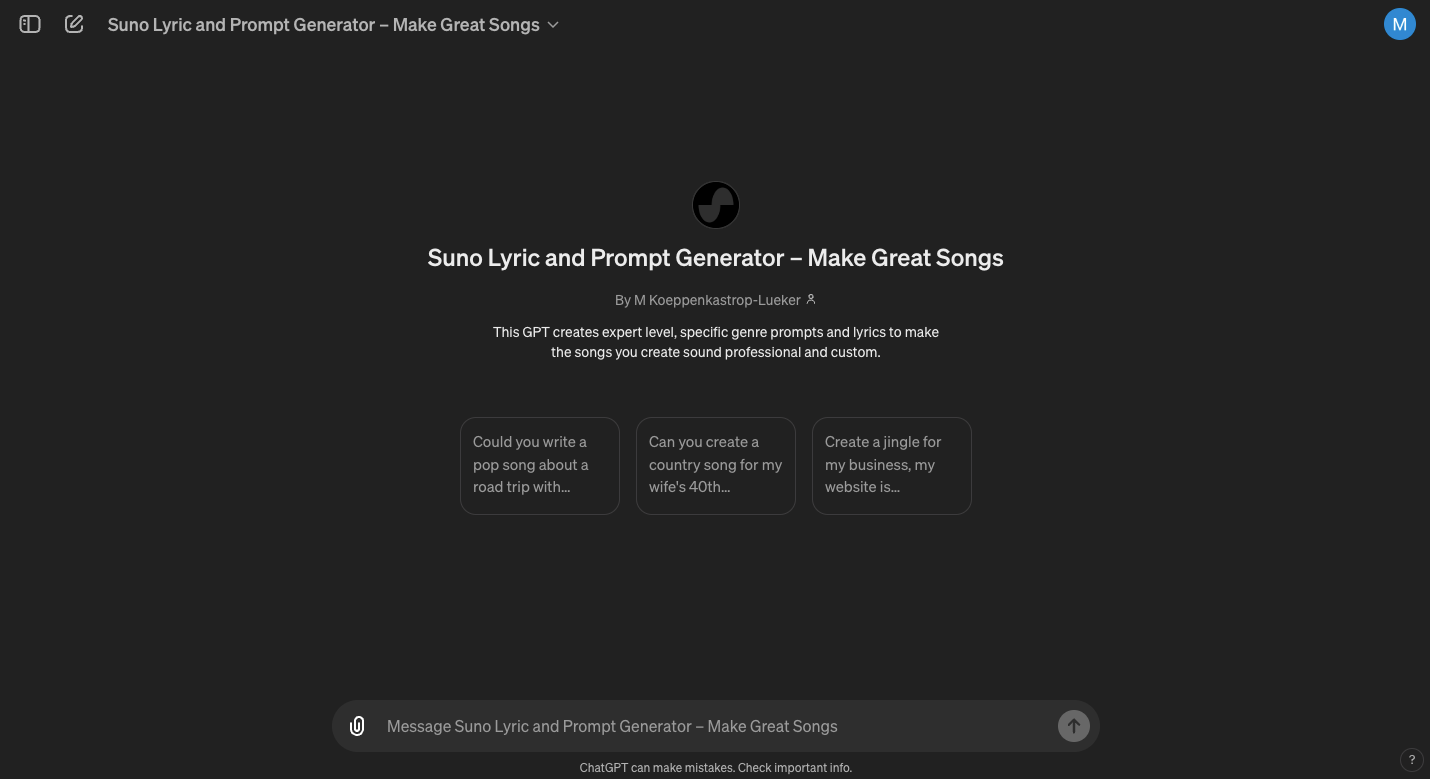

Step 1: Choose Your Platform

For professional quality and simplicity:

Suno AI (https://suno.ai) or Stable Audio (https://www.stableaudio.com)For open-source exploration:

Riffusion (https://www.riffusion.com)

Step 2: Write Your Prompt

Good prompts are key to quality. Be specific.

Examples:

“Dreamy lofi hip hop beat with vinyl crackle and soft piano”

“High-energy 80s synthwave with male vocals”

“Dark ambient cinematic track with drones and strings”

Step 3: Adjust Parameters

Depending on the platform, you can specify:

Track length

BPM (beats per minute)

Genre

Instruments

Mood or emotion

Step 4: Generate and Review

Listen to your AI-generated music. Most platforms allow you to regenerate if the result isn’t quite right.

Step 5: Download and Edit

Export your music file (usually MP3 or WAV). You can further tweak it in a DAW like FL Studio, Logic Pro, or Audacity.

Comparison Table: Diffusion vs Autoregressive Models in AI Music

| Feature | Diffusion Models | Autoregressive Models |

|---|---|---|

| Output Style | Full waveform or spectrogram | Symbolic (MIDI) or waveform |

| Generation Method | Parallel, iterative denoising | Sequential prediction |

| Speed | Fast | Slower for long outputs |

| Quality | Studio-grade audio | Depends on model and token length |

| Input | Text prompts, audio, spectrogram | Notes, chords, lyrics, genre |

| Best For | Realistic audio tracks, sound design | Editable music, theory-based outputs |

FAQ: Diffusion Models in AI Music

Q: Are AI-generated songs using diffusion models royalty-free?

Yes—most platforms like Stable Audio and Riffusion allow royalty-free use, though you should always check their specific license terms.

Q: Can diffusion models create full songs with vocals?

Yes. Tools like Suno AI can generate complete songs, including lyrics and vocal performances.

Q: Do I need to know music theory to use these models?

Not at all. Just describe what you want, and the AI handles the rest. However, a musical ear helps in refining prompts and editing.

Q: Can I use these tools commercially?

Most platforms offer commercial licenses or royalty-free use. Review the terms of use before publishing your music for sale or distribution.

Q: How is the quality compared to real human composers?

For background, mood-based, or loop music—very close. For complex orchestration or nuanced dynamics, human composers still hold the edge.

Conclusion: Why You Should Try Creating Music with Diffusion Models Today

To create AI music with diffusion models is to enter the next generation of digital sound creation. These tools offer unmatched convenience, high-quality audio, and wide creative freedom—perfect for creators who need music on demand without compromise.

While they may not replace traditional composers, they empower artists, developers, and hobbyists to explore musical ideas in ways never before possible. Whether you're building a game, producing YouTube content, or just experimenting, diffusion models make professional music generation accessible to all.

Learn more about AI MUSIC TOOLS