As AI music tools evolve, creators are no longer restricted to proprietary platforms or limited outputs. Enter Meta MusicGen, an open-source AI model released by Meta (formerly Facebook) that generates music from text prompts. Unlike closed tools like Google’s MusicLM or premium generators like AIVA, MusicGen is freely accessible, customizable, and usable on your own machine or in the cloud.

But is it really powerful enough to be useful for musicians, producers, or AI enthusiasts? This Meta MusicGen review takes a close look at its performance, features, use cases, and how it compares with top contenders like Suno, Google MusicLM, and OpenAI’s Jukebox.

What is Meta MusicGen?

Meta MusicGen is a transformer-based AI music generation model that takes in text (or text + melody) and outputs high-fidelity musical audio. It was first introduced in June 2023 by Meta’s FAIR (Fundamental AI Research) team and released under the MIT license—making it one of the most developer-friendly tools in the AI music ecosystem.

The model is trained on 20,000 hours of licensed music, including from ShutterStock and Pond5, and is designed to handle natural language descriptions, such as:

“Upbeat tropical dance music with synths and steel drums.”

Depending on the model variant, it can generate clips up to 30 seconds long and supports both melody-conditioned and text-only generations.

Explore:Best AI Music Generation Models

Core Features of Meta MusicGen

Text-to-Music Generation: Enter descriptive prompts to generate musical clips.

Melody Conditioning: Input a melody (e.g., a .wav or .mid) and guide the generation.

Open-Source: Hosted on GitHub with PyTorch support; can be fine-tuned or self-hosted.

Four Model Variants:

melody: Supports both text and melody inputs.large: Best quality, large parameter count.medium: Mid-size model for faster generation.small: Lightweight model for local testing or quick prototyping.High Audio Quality: 32kHz sample rate, suitable for demos and production testing.

Meta MusicGen vs Competitors

| Feature | Meta MusicGen | Suno AI | Google MusicLM | AIVA |

|---|---|---|---|---|

| Open Source | ? Yes | ? No | ? No | ? No |

| Prompt Type | Text, Melody | Text | Text | Templates + Edits |

| Output Length | 12–30 sec | 1–2 mins | 2 mins | Unlimited (manual) |

| Audio Quality | High (32kHz) | Medium-High | Very High | High (manual export) |

| Commercial Use | ? Yes (MIT) | ? Pro Plans | ? Research only | ? With License |

| Interface | CLI, Web UI (demo) | Web GUI | Experimental App | Web GUI + Editor |

What makes Meta MusicGen stand out is its complete transparency—users can read the model card, access training data sources, and even fine-tune it with their own music datasets. That flexibility is rare in a field increasingly dominated by closed systems.

Pros of Meta MusicGen

Fully open-source and royalty-free under MIT license.

Accepts both text and melody inputs, enabling creative control.

Allows for offline generation on personal hardware (with enough compute).

Integrates well with developer workflows, DAWs, and other AI pipelines.

Fast generation speeds with optimized versions.

Cons of Meta MusicGen

Requires technical setup: Python, PyTorch, and GPU support recommended.

No official GUI; third-party or demo apps (like Hugging Face Spaces) are required for non-coders.

Maximum output is only 30 seconds, limiting full-track creation.

No vocals; instrumental generation only.

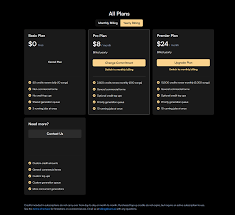

Pricing: What Does Meta MusicGen Cost?

Meta MusicGen is 100% free under an MIT license. There are:

No subscriptions.

No usage caps.

No commercial licensing restrictions.

You can use it for commercial music projects, YouTube background tracks, or custom dataset training—provided you credit third-party datasets if used.

However, cloud-based platforms hosting MusicGen (like Hugging Face) may charge for compute usage or API integration.

Frequently Asked Questions

Is Meta MusicGen suitable for beginners?

Not entirely. It’s developer-focused. However, online demos (like Hugging Face Spaces) let non-tech users try it easily.

Can I generate vocals with Meta MusicGen?

No. MusicGen is instrumental only. It doesn’t support lyrics, singing, or vocal synthesis.

How long does it take to generate music?

On a GPU, each track takes 10–20 seconds. On CPU, it could take several minutes, depending on the model size.

Can I host MusicGen locally?

Yes. If you have a modern GPU and some technical knowledge, you can clone the repo and generate music locally.

Is MusicGen better than Suno or AIVA?

It depends on your use case:

Choose Suno for easy vocal track generation.

Choose AIVA for classical composition workflows.

Choose MusicGen for open access, full control, and experimentation.

Conclusion: Should You Use Meta MusicGen in 2025?

If you’re an AI enthusiast, developer, or experimental musician, Meta MusicGen is a must-try tool. Its open-source philosophy, flexible input support, and surprisingly good audio quality make it one of the most accessible and transparent music AI tools available in 2025.

While it lacks a polished UI or long-form generation capabilities, its strength lies in how much control and freedom it gives the user. Whether you're building a music app or experimenting with new genres, MusicGen is a serious contender in the AI music space.

Learn more about AI MUSIC TOOLS