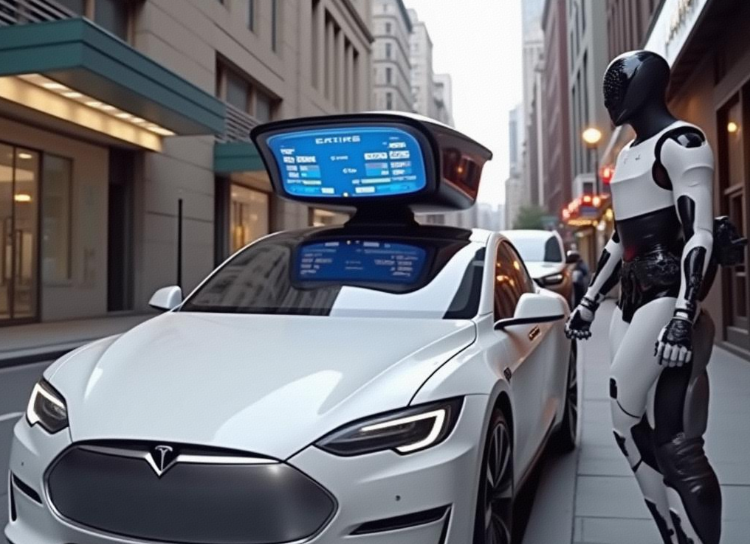

Tesla's Optimus SDK expansion is making waves in the robotics community, especially with its new Perception APIs designed to supercharge factory automation. These cutting-edge tools promise to streamline workflows, enhance precision, and enable smarter human-robot collaboration. Whether you're a developer, engineer, or automation enthusiast, this guide dives deep into how the SDK works, its real-world applications, and actionable tips to get started.

What's New in the Tesla Optimus SDK Expansion?

Tesla's latest SDK update introduces advanced Perception APIs that redefine how Optimus interacts with its environment. Built on the backbone of Tesla's FSD (Full Self-Driving) system, these APIs integrate real-time vision, LiDAR, and tactile feedback to enable tasks like object recognition, path planning, and dynamic obstacle avoidance.

Key Features

Multi-Sensor Fusion: Combine camera, LiDAR, and force-torque sensor data for millimeter-level accuracy in object manipulation.

Real-Time Semantic Mapping: Create dynamic 3D maps of factories to adapt to changing layouts or obstacles.

Collaborative AI: Enable multiple Optimus units to share environmental data and coordinate tasks seamlessly.

For developers, this means writing code that leverages these APIs to automate complex workflows—from sorting parts to quality control inspections.

How to Leverage the New Perception APIs

Step 1: Install the SDK and Dependencies

Start by downloading the latest Optimus SDK from Tesla's developer portal. Ensure your system meets the minimum requirements:

? OS: Linux (Ubuntu 20.04+) or Windows 10/11

? Hardware: NVIDIA GPU (for AI inference) + 16GB RAM

? Libraries: Python 3.8+, ROS (Robot Operating System)

bash Copy

Step 2: Configure Sensor Calibration

The Perception APIs rely on calibrated sensors. Use Tesla's SensorCalibration Toolkit to align cameras and LiDAR:

Run

calibrate_sensors.pyin the SDK directory.Follow on-screen prompts to position reference objects.

Save calibration data to

~/.optimus/config/sensors.yaml.

Pro Tip: Recalibrate sensors weekly or after environmental changes (e.g., lighting shifts).

Step 3: Integrate Semantic Mapping

Enable real-time mapping with the SemanticMapper class:

python Copy

This generates a dynamic map that updates as objects move or new obstacles appear.

Step 4: Code Object Recognition Tasks

Use the ObjectDetector API to identify and sort items:

python Copy

Step 5: Test and Optimize

Deploy the code on a physical Optimus unit or simulate it in Tesla's Gazebo-based robotics simulator. Monitor performance metrics like latency and accuracy, then tweak parameters using the SDK's built-in debugger.

Real-World Applications of the SDK

1. Automated Quality Control

Optimus bots equipped with the SDK can inspect products for defects using high-resolution cameras and AI models. For example:

? Detect scratches on car panels with 99.5% accuracy.

? Flag misassembled parts in real time.

2. Collaborative Material Handling

Multiple Optimus units can work together to move heavy components. The SDK's Swarm API allows:

? Load balancing across bots.

? Dynamic rerouting to avoid collisions.

Case Study: Tesla's Fremont factory reduced assembly line downtime by 30% using coordinated Optimus teams.

3. Predictive Maintenance

By analyzing sensor data (vibration, temperature), the SDK predicts machinery failures before they occur.

Why Developers Love the Tesla Optimus SDK

| Feature | Benefit |

|---|---|

| Low Latency | <50ms response time for critical tasks |

| Scalability | Supports fleets of 100+ robots |

| Cross-Platform | Compatible with ROS, Docker, and Kubernetes |

Troubleshooting Common Issues

Sensor Drift: Recalibrate sensors using

calibrate_sensors.py.Mapping Errors: Ensure LiDAR coverage isn't blocked by moving objects.

API Timeouts: Increase timeout settings in

config/sdk_settings.yaml.

Future-Proof Your Workflow

Stay ahead by:

? Subscribing to Tesla's Developer Insider Newsletter for API updates.

? Joining the Optimus Developer Community on Discord for peer support.