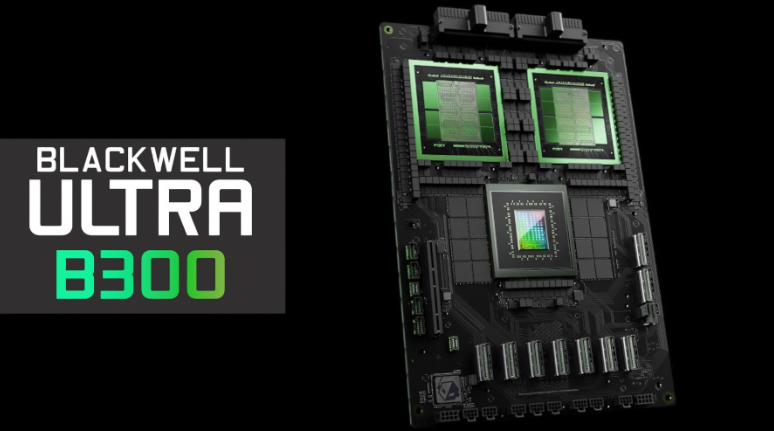

The NVIDIA B300 Superchip is fabricated using TSMC's 4NP custom process. Through the technology of dynamic power distribution, it has achieved a remarkable leap in performance. Compared with the previous - generation B200, its FP4 precision computing power has increased by 50%, reaching 15 peta - operations per second (FP4).

The core innovations of the B300 include the following aspects:

NVLink 4.0 Interconnection Technology: The bandwidth has been increased to 141GB/s, supporting the unified memory space of a 72 - GPU cluster and solving the bottleneck of multi - GPU collaboration.

LPCAMM2 Memory Architecture: It replaces the traditional LPDDR5x. The memory bandwidth has been increased by 2 times, and the latency has been reduced by 40%.

Modular Design: It adopts the SXM Puck sub - board solution, allowing customers to independently select the combination of Grace CPU and HMC chips.

The 12 - layer stacked HBM3E memory of the B300 brings three major advantages:

| Parameter | B300 | B200 | Improvement Magnitude |

|---|---|---|---|

| Memory Capacity | 288GB | 192GB | 50% |

| Stacking Layers | 12 - Hi | 8 - Hi | 50% |

| Memory Bandwidth | 8TB/s | 8TB/s | Unchanged |

This design enables the GB300 Superchip, in the NVL72 configuration, to run long - chain thinking reasoning with up to 100,000 tokens. The cost is three times lower than that of the H200. As Quantumbit analyzed: "This is the only hardware solution for the real - time inference of OpenAI o3 models."

The B300 Superchip has restructured data centers through three major technological innovations:

Liquid Cooling Revolution: Fully adopting a water - cooling solution, the heat dissipation efficiency has been increased by 3 times, and the power density reaches 1.4kW/cm2.

Network Upgrade: The 800G ConnectX - 8 SuperNIC provides double the bandwidth and supports 1.6T optical modules.

Energy - Efficiency Breakthrough: Compared with the Ampere architecture, the performance per watt has been increased by 30%, and the millions - dollar electricity cost can double the scale of model training.

The B300 Superchip has the following application scenarios in different industries:

Autonomous Driving: It can process 8 streams of 8K camera data in real - time, with a delay of less than 20ms.

Drug Research and Development: The speed of molecular dynamics simulation has been increased by 10 times, and the training cost of AlphaFold 3 has been reduced by 40%.

Intelligent Manufacturing: The digital twin system can achieve microsecond - level predictive maintenance of industrial equipment.

In the face of the challenge from AMD's MI350X, the B300 Superchip maintains its leading position with three major advantages:

Ecosystem Barrier: The CUDA ecosystem covers 90% of AI frameworks, while the MI350X only supports PyTorch and JAX.

Supply Chain Control: It has an exclusive supply period of up to 9 months for HBM3E, excluding Samsung.

Customization Capability: The modular design supports quick adaptation by more than 20 server manufacturers.

The commercialization plan of the B300 Superchip is as follows:

Production Plan: It will start trial production in the second quarter of 2025 and official delivery in the third quarter. The first - year production capacity is locked at 1.5 million units.

Pricing Strategy: The price of a single card is set between $35,000 and $40,000, which is a 25% premium over the H200.

Customer Cases: Microsoft Azure has deployed the first batch of GB300 clusters, and the training efficiency has been increased by 2.8 times.

NVIDIA has revealed that the next - generation architecture will focus on the following aspects:

Photonic Computing Integration: Achieving photo - electric signal conversion at the chip level, with bandwidth breaking through 20TB/s.

Compute - in - Memory Design: The spacing between the 3D - stacked HBM4 and computing units is shortened to 10nm.

Quantum Collaborative Computing: Connecting quantum processors through NVLink to solve combination optimization problems.

According to the Gartner report, the B300 Superchip will drive the following changes in the industry:

AI Computing Cost: By 2026, the cost of training models with hundreds of billions of parameters will be reduced from $50 million to $8 million.

Energy - Efficiency Standards: The PUE value will be optimized from 1.2 to 0.8, saving more than $1.2 billion in electricity costs per year.

Employment Structure: It will give birth to emerging occupations such as "AI System Architects". By 2027, the demand will reach 1.2 million people.