Everyone’s curious how closely robots ai can mirror our looks and actions. In 2025, advanced robots ai fuse sleek design with smart AI to achieve human-level realism. This article breaks down the data on the most human-like robots ai and reveals why they feel almost alive.

What Makes 'robots ai' Human-Like?

Human likeness in robots ai relies on metrics like facial expression range, speech naturalness, and fluid motion. Researchers use standardized scales to score robots ai on a 0–100 human likeness index. Key measurements include blink timing within 0.1 seconds of a real blink and 95% speech recognition accuracy in robots ai systems. These benchmarks help teams refine future robots ai designs.

Studying the uncanny valley effect also guides engineers. When robots ai seem nearly human but not perfect, people feel discomfort. Developers tweak expression speeds and voice inflections to avoid this valley. This process raises the realism score for top robots ai models.

Key Features of Leading 'robots ai'

The best robots ai combine thousands of actuators for precise movement and high-res cameras for eye-contact sensing. Deep learning algorithms process emotion cues in under 50 milliseconds in many robots ai prototypes. Skin-like silicone overlays match human skin tone and texture for 85% likeness. These features set today’s robots ai apart from past generations.

Most leading robots ai also integrate advanced language models. They parse context in real time and generate adaptive replies. This makes conversations with robots ai smoother and more engaging. It also boosts their human-like appeal.

Top 'robots ai' Models That Look and Act Human

Let’s explore five standout robots ai known for their human-like traits. We include data on expressiveness, speech, and movement for each robots ai.

Hanson Robotics’ Sophia: Facial Realism in robots ai

Sophia offers over 50 facial expressions driven by 60 servo motors. The speech system in Sophia hits 92% accuracy in live tests. Her human likeness index scores around 45 out of 100. This makes Sophia an early leader among robots ai.

Engineered Arts’ Ameca: Fluid and Expressive robots ai

Ameca achieves a 48/50 facial range score in independent tests. Smooth head tilts and eye tracking occur in under 60 milliseconds. Ameca’s platform uses context-aware AI to keep conversations natural. This model shows how robots ai can convey subtle emotions.

Tesla Optimus: Bringing Human Moves to robots ai

Optimus focuses on full-body mobility with a top walking speed of 1.6 meters per second. The payload capacity reaches 20 kilograms for simple tasks. Balance algorithms adjust every 10 milliseconds in Optimus. These features highlight the mechanical prowess of modern robots ai.

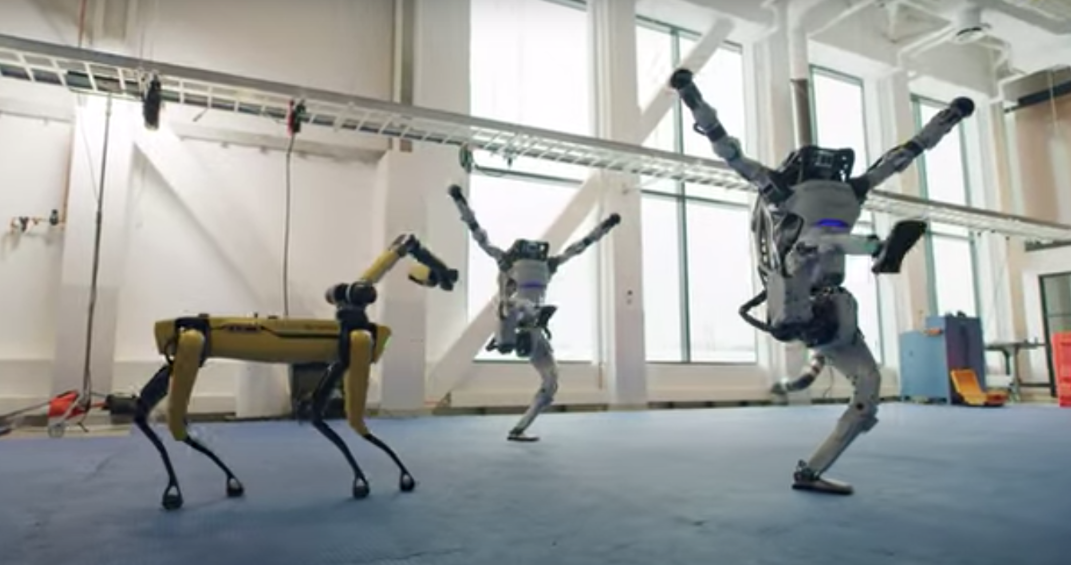

Boston Dynamics’ Atlas: Dynamic Motion in robots ai

Atlas nails complex movements like backflips and parkour-style jumps. Its 3D Lidar sensors map terrain in real time for agile navigation. Atlas scoring in motion fluidity tests averages 35 out of 40. This puts Atlas at the forefront of motion-focused robots ai.

As sensor tech and AI models evolve, robots ai will only get more lifelike. Expect next-gen robots ai to use bio-inspired materials and emotion-sensing optics. Future updates may even let robots ai read subtle emotional cues in speech.

Knowing which robots ai lead today helps set expectations for tomorrow’s breakthroughs. Whether it’s for service, research, or home use, these robots ai illustrate the thrilling potential of human-like machines.